After reading Design Meets Disability, I noticed how it sheds light on an ongoing issue with how society views disabilities. The author explains how when design and disability meet, the outcome is always the most intricate and interesting. It’s an opportunity for designers to test out their skills in both creativity and functionality.

I loved the section where he criticizes designers for making many assistive technology (AT) devices, like hearing aids and prosthetics, hidden. I also believe that disabilities should not be shamed, but embraced. By prioritizing the concealment of these devices, we are discouraging disabled individuals rather than empowering them, making them feel like outsiders instead of supporting them. By making AT more visible and beautifully designed, I believe designers can help change how people perceive disabilities.

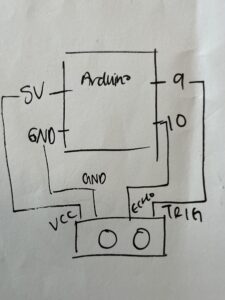

The idea to bring engineers and designers together (‘solving’ and ‘exploring’) to create AT devices is brilliant because the result will be assistive technologies that are both useful and inspiring. I also think designers will be very useful in coming up with a comfortable device for users, since they’ll be wearing or using them all day (sometimes all night).

I absolutely agree with the enforcement of universal design, meaning designing products that are usable by as many people as possible, which of course also includes people with disabilities. The author mentions that rather than making a device for a very specific disability only, we could make devices with designs that are simple enough to be broadly adapted but smart enough to serve people with different needs. Overall, I believe this approach to design makes devices more accessible and more human.