Russian Roulette Final Project

To summarize this project was about making a game that was like Russian Roulette but without the gun. Instead two players would stand opposite each other and would have two options. First there is a “gun” (it just exists in the p5js sketch) that is loaded with a certain amount of live rounds and the rest are blank and then like the real Russian Roulette each player would get chances to shoot the other opponent or not or shoot themselves. If a player shoots themselves and its a blank then they get another turn making it more likely that the next round will be live and hence more likely that they will win and if they do shoot the other player and it is blank then their turn ends and they swap and the cycle continues. There is also 3 lives for each player and if one of them loses, i.e. runs out of lives they have to use a shock pen. The purpose of the shock pen was to create some kind of stakes just like the real Russian Roulette but more sane.

To me this project represents more than a fun creation. Genuinely, while I did enjoy it this was a huge learning experience and not just when it came to coding and soldering and whatever other physical things I did. I’ll explain how so as I go from beginning to end.

I originally had this idea before the midterm and I thought about creating a Russian roulette for my midterm. I chose a different path and made a Mexican Standoff game for my midterm. It was arguably slightly harder to make and for me going into it I really had little idea what I was doing. I learned how to change from different scenes (thanks to Pi telling me about a scene management class), but more importantly I learned a lot about planning as halfway through making it I had to sit down and I sketched a diagram of what I wanted the game to be like on a whiteboard in a library study room.

So in the midterm I just began to plan things properly and I wasn’t very efficient at it. So going into this project I also planned but unlike before I also had to consider the physical: Making and wiring all the components that I’ll need. Even planning it was difficult to be honest. Difficult because I didn’t really know where to start but also I didn’t know what was available in the intro to IM lab. I was very fortunate to learn more about what was available through our lessons where we learned about renting equipment but also saw the different sections in the lab, like the consumables or the blue closet (i dont know if it has a name).

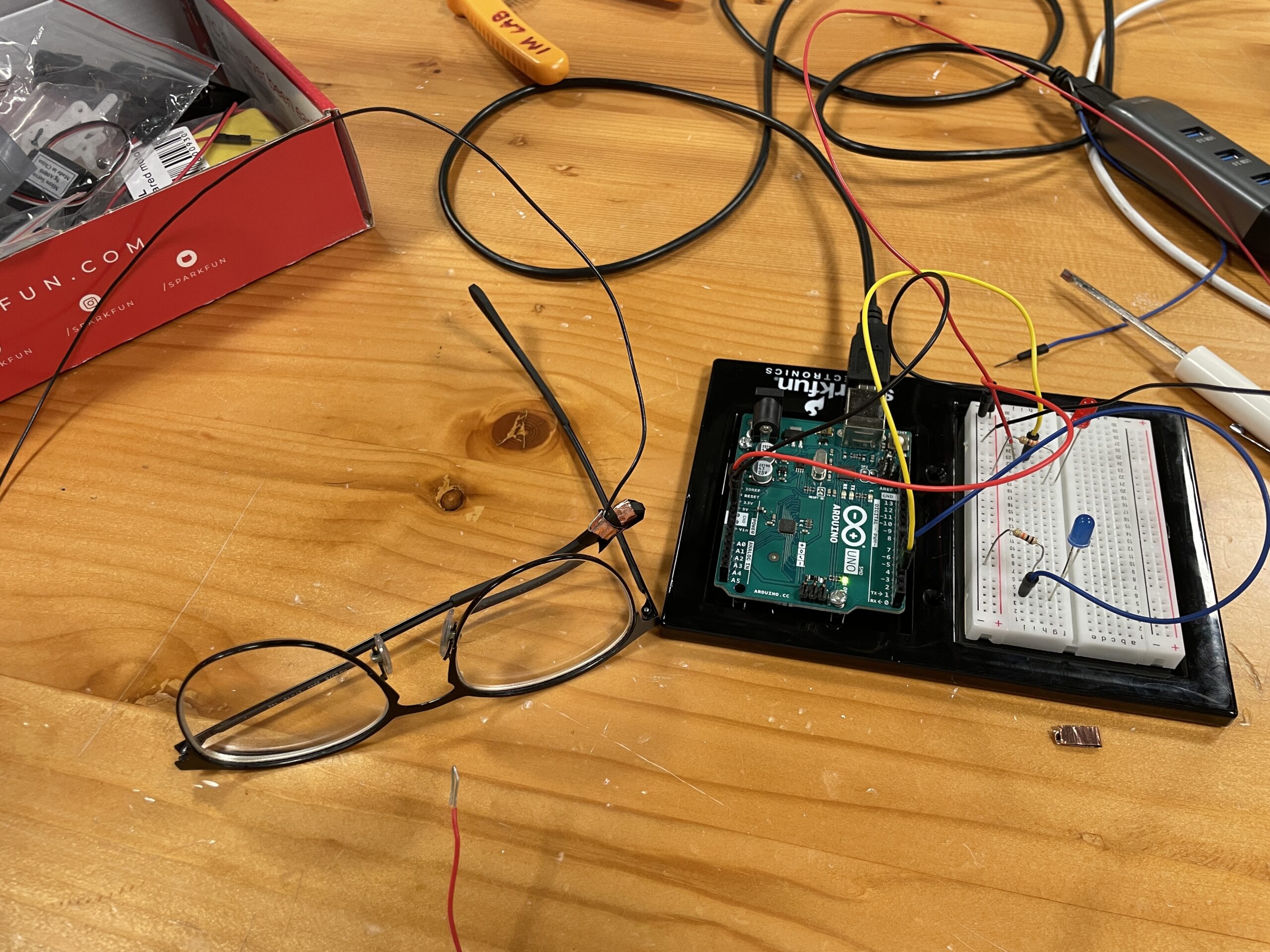

Then when I had to start I had to learn serial communication. I think a lot of people can agree when I say it was very confusing and I would argue it remained a problem with my project from beginning to end even though I thought I understood it at the beginning. That was the biggest hurdle by far mentally because it had been bothering me for a couple days before because when I would go to try to understand the code from professor Aaron and change it to fit my project (4 buttons, a potentiometer and 2 LEDs) it wouldn’t work even though just before that it I felt I understood it when working with Snehil and Khalifa on the previous assignment.

- Include some pictures / video of your project interaction

- How does the implementation work?

- Description of interaction design

- Description of Arduino code and include or link to full Arduino sketch

- Description of p5.js code and embed p5.js sketch in post

- Description of communication between Arduino and p5.js

- What are some aspects of the project that you’re particularly proud of?

- What are some areas for future improvement?

The way that my project works is first that the Arduino detects the button presses from each of the four buttons as well as the input from the potentiometer. Then using serial communication the Arduino sends the signals received from the buttons and potentiometer to the p5js sketch and in response the sketch relays back a message to create a handshake.

The p5js code is slightly more complex. First I have multiple scenes each of which managed by a scene management class where depending on the number that the class receives it calls on different functions, each of those functions are actually the scenes. For example the disclaimer scene is actually just a function which has all the shapes and so on being drawn and the function itself is only called upon when the scene number is 2. The same goes for the other scenes. As for the game itself: Firstly, after the animation there is an array of booleans totaling 6. If an element is true it means it is a live round and vice versa and then there is another global variable called selected_round and that is added up incrementally to signify which round is being selected from the chamber. As for the players, they have two options represented as two buttons. One button (the red one) means that the player can shoot themselves, and the other (the blue button) means that the player can shoot the other person in front of them. If the player chooses to shoot themselves and it’s a blank then it remains their turn and makes it more likely that the next round will be live and hence more likely that they can win the round. However, if a player chooses to shoot the other but it’s blank they switch turns making the odds in the favor of the other player as its now more likely for them that the next round is live. Furthermore, I added difficulties which means that depending on the difficulty chosen by the player there will be a varying amount of live rounds with the easiest difficulty being “Standard” which means only one live round, and the hardest being “Masochist” which can have between 3 and 5. Each of the players have 3 lives, then at the end of it all, if they choose to the loser has to use a shock pen so that there are stakes and to make the game more interesting.

I’m particularly proud of the UI elements, specifically holding down a button to go to the next page and so on. To me it felt the most satisfying.

For the future there are a lot of things I would change. I would have started by making the game aspect first, as I had made the mistake of starting at the start page ironically. This would have made my life a lot easier but at the same time I am somewhat glad that I did what I did because I felt that the UI was a large part of what I liked and I hope others liked about the game.

The sketch on p5js may be a bit weird because I had coded it on vscode and made it such that the canvas fit my mac screen so it may take a while to load and/or may be hard to use.