Automated Trash Sorting System: A Sustainable Waste Solution

Concept:

The project’s main goal is to develop a trash sorting system that can help the environment in several ways. This system relies on technologies like Arduino, capacitive, and IR sensors to automatically detect and sort different types of objects placed in a trash bin, distinguishing between plastic and general waste. This technology offers several environmental benefits.

The automatic trash sorting system helps make sure that things we can recycle, like plastic, don’t get thrown away in the trash and sent to landfills. When plastic items are sorted out from regular trash, we can recycle them correctly, which saves important materials and reduces the amount of new plastic things we have to make. This is good for the environment because it means less pollution from plastic and less energy used to make new plastic stuff.

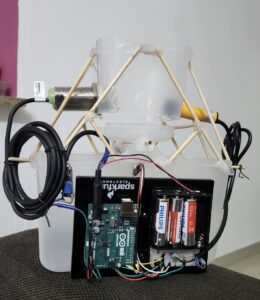

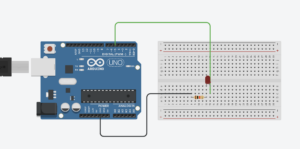

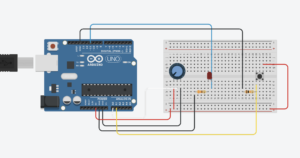

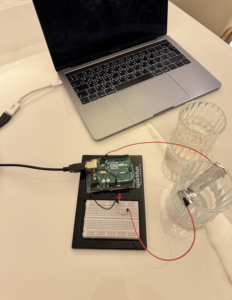

Images of the project:

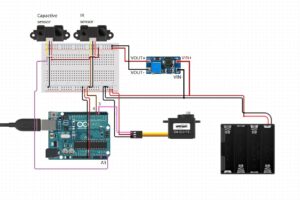

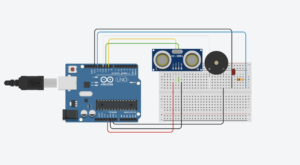

Components:

Arduino uno

battery with 6 volts

IR sensor: E18-D80NK Adjustable Infrared Sensor

Capacitive sensor: Autonics Proximity Sensors Capacitive Sensors CR30-15DN

DC to DC boost converter : (the dc to dc boost converter employing a switching circuit and energy storage components like inductors and capacitors, increases the input voltage to a higher level through controlled switching and energy transfer processes.)

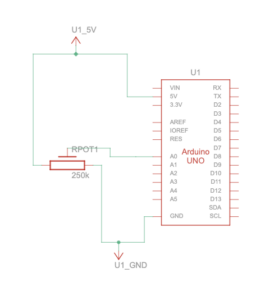

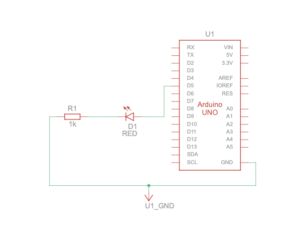

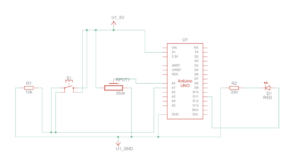

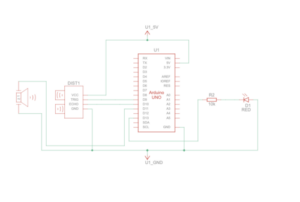

Schematic:

Videos:

User testing

How does the implementation work?

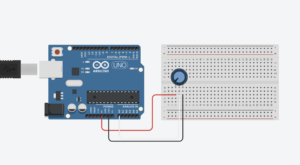

The project’s implementation consists of a smart system that uses technology to make things work. It uses an Arduino and P5.js . The Arduino has a special sensor called a capacitive sensor, which can figure out what kind of material something is made of. For example, it can tell if it’s plastic or something else. Additionally, there’s another sensor called an IR sensor. This sensor can figure out if there’s something in the trash bin or not. To make it even more useful, the project also has a web interface made with p5.js. Where you can control and change how the system works. It’s like having a remote control for your TV but for this trash sorting system. So, with the web interface, you can tell the system what to do, like sorting the trash in different ways or turning it on and off. This makes it easier for people to use and control the system the way they want.

Sensors:

IR Sensor: (Digital)

The infrared sensor has 2 leds, one for sending the infrared light and the second for receiving it. So if there is an object in front of the sensor the light will be reflected from the object back to the sensor. The IR sensor detects if there is an object present or not but it doesn’t know the material of the object. If the signal is high it means there is no object and if its low means there is an object. 5 volts high and low 0 volts.

Capacitive sensor: (Digital)

Capacitive sensors- the change in electric field that can detect if there is an object or not (also depends on the sensitivity/distance). The purpose of capacitive sensor in this project is to detect if the object is not plastic, it can detect conductive objects. When the IR sensor detects an object then it checks the capacitive sensor if the voltage is high (10volts) then the object is general (conductive) if the voltage is low (0volts) then the object is plastic or inductive

Description of interaction design:

The interaction design of the system is all about how people can use and communicate with it. In this case, users can interact with the system through the p5.js web interface, which is like a control panel on a computer screen.

Inside this interface, there are buttons that you can click on. These buttons allow you to do different things. For example, there are buttons that let you choose how the system works, like whether it should do things automatically by itself (automatic mode) or if you want to control it yourself (manual mode). This is similar to choosing between letting a robot do a task for you or doing it with your own hands.

There are also buttons to tell the system what kind of material you’re putting in the trash. You can say if it’s plastic or something else (general waste). This helps the system know what to do with the trash.

To make things even clearer, the interface shows you text on the screen. This text tells you what mode the system is in and what kind of material it’s looking for. This way, you can always see and understand what the system is doing.

Description of Arduino code + code snippets:

The Arduino code uses Servo and defines the pins for capacitive and IR sensors. It reads incoming serial data, processes the angle value, and adjusts the servo accordingly. Modes (automatic/manual) and sensor readings are also handled.

Include the servo library and define the pins with required variable:

#include <Servo.h>; Servo GateServo; #define capSensor A3 //Pin A3 (analog) #define irSensor 4 //Pin 4 (digital) #define GateServoPin 3 // GateServo pin bool openTrash = false; bool autoMode = true; //Plastic goes to angle 11 //General goes to angle 158 //The closing angle of the gate which is 85 degree int initialServoPosition = 85; int anglePlastic = 11; int angleGeneral = 150; int capValue; unsigned long GateServoReturnTime = 0; const unsigned long GateServoReturnDelay = 1000; // 1 seconds in milliseconds

Receive data from p5js via serial connection and extract the angle for plastic and general:

void loop()

{

while (Serial.available() > 0) {

// Read the angle value from serial

String incomingData = Serial.readStringUntil('\n');

//If the mode is manual enable controlling by the p5js panel

if (!autoMode) {

// Check if the incoming data starts with "angle:"

if (incomingData.startsWith("angle:")) {

// Extract the angle value from the incoming data

int angleValue = incomingData.substring(6).toInt();

// Process the angle value if it is within the valid range

if (angleValue >= 11 && angleValue <= 158) {

// Serial.println("Received angle: " + String(angleValue));

// Set the GateServo angle

GateServo.write(angleValue);

// Reset the timer each time a valid angle is received

openTrash = true;

GateServoReturnTime = millis();

}

}

}

Get the working mode from p5js panel and control the servo:

if (incomingData.startsWith("Auto")) {

autoMode = true;

// Serial.println("Auto mode: " + String(autoMode));

}

else if (incomingData.startsWith("Manual")){

autoMode = false;

// Serial.println("Manual mode: " + String(autoMode));

}

}

// Check if it's time to return the GateServo to 85 degrees

if (millis() - GateServoReturnTime >= GateServoReturnDelay && openTrash) {

openTrash = false;

GateServo.write(initialServoPosition);

// Serial.println("Trash Closed");

}

Description of p5.js code + Embedded p5.js sketch:

The p5.js code controls the web interface, buttons, and communication with Arduino. It displays the current mode, material type, and provides buttons for user interaction.

Description of communication between Arduino and p5.js:

Communication between Arduino and p5.js is achieved through the serial port. The p5.js script sends commands for mode setting and material type selection, and the Arduino reads and processes these commands, adjusting the servo and handling sensors accordingly.

The breakdown of the bidirectional communication:

1. p5.js to Arduino:

- Sending Commands & Data: In p5.js, the serial.write(data) function is to send data to the Arduino. This data could be commands, sensor readings, or any other information needed to be sent to the Arduino.

- Receiving in Arduino: On the Arduino side, the Serial.available() to check if there’s data available in the serial buffer. If data is available, the functions erial.readStringUntil(‘\n’) is to read and process the incoming data.

2. Arduino to p5.js:

- Sending Data from Arduino: In Arduino code, the Serial.print(), Serial.println() send data back to the p5.js sketch over the serial port. This could be sensor readings, or any information needed to be visualized or processed in p5.js.

- Receiving in p5.js: In the p5.js sketch ,the serial. on(‘data’, gotData) event to define a function (gotData) that will be called whenever new data is received. Inside this function, the incoming data will be processed.

What are some aspects of the project that you’re particularly proud of?

The seamless integration of hardware components, the user-friendly p5.js interface, and the efficient sorting mechanism underscores the project’s success in creating a functional and user-centric waste disposal system. These achievements not only enhance the user experience but also promote environmentally responsible waste management practices by automating the segregation process based on detected material types.

Challenges faced and how you tried to overcome them:

Challenges included sensor calibration, ensuring real-time communication between Arduino and p5.js, and optimizing the sorting algorithm.

-

- Sensor Calibration and Accuracy:One of the primary challenges encountered during the project was ensuring accurate sensor readings for object detection. The capacitive and IR sensors required precise calibration to reliably distinguish between plastic and general waste. To address this, an extensive calibration process was undertaken, involving systematic adjustments to sensor thresholds, distances, and environmental conditions. Regular testing with a variety of objects helped fine-tune the sensor parameters, improving the overall accuracy of material classification.

- Real-time Communication:Achieving seamless and real-time communication between the Arduino and the p5.js web interface was another significant challenge. Ensuring that commands were sent and received promptly without delays was crucial for responsive user interaction.

What are some areas for future improvement?

There are several exciting possibilities for future improvements to make the system even better and more efficient.

One area of improvement could focus on enhancing the sorting algorithm. Right now, the system can distinguish between plastic and general waste, but in the future, it could be trained to recognize and sort a wider range of materials. For example, it could learn to separate paper, glass, and metal items, increasing the effectiveness of recycling and reducing waste even further.

Another exciting advancement could involve implementing machine learning techniques. By integrating machine learning, the system could become even smarter when it comes to recognizing different objects accurately. This means it could become better at identifying and sorting items that might be tricky to distinguish using only sensors. Machine learning can help the system adapt and improve its performance over time, making it more reliable in sorting various materials.

IM Showcase :

The interactive media showcase was engaging and educational, and I gained a lot from everyone presenting there. It was a fun and enlightening experience. The highlight for me was a conversation with Professor Eva Mansour. She encouraged me to present my project to the Ministry of Climate Change and Environment in the UAE. She believes they would appreciate my project and sees potential for its implementation in the UAE.

Professor Mansour advised me on several key steps: firstly, to publish a paper outlining my project’s concept and findings; then, to seek funding for further development; and finally, to conduct extensive testing. She also emphasized the importance of design, suggesting improvements to enhance its appeal. Her insights have given me much to consider, and I’m hopeful about the possibility of implementing this project in the UAE.

Finally, a huge shoutout to Professor Aya for running such an incredible course. Thank you for your guidance and support, I appreciate it. Every step of the way, you were there, making the whole learning journey not just educational but really enjoyable too. Thank you! <3

testing students projects

Links to resources used: