Concept:

Whenever I step into an art gallery, I am captivated by the stories each artwork tells, the vibrant strokes on the canvas, and the interplay of light and shadow. Inspired by these immersive experiences, I wanted to recreate a similar atmosphere, merging the tranquility of a gallery with the excitement of interaction. My vision for the p5.js Art Gallery centered around creating a dynamic and immersive digital space that embodies the essence of generative art. Influenced by the interplay of light and space, I aimed to design an environment that encourages exploration and creative expression. Extensive research into contemporary digital art installations and interactive galleries inspired the overall concept, guiding me in the creation of an engaging and visually captivating experience for visitors.

The central idea behind my project is to encourage users to explore the art at their own pace and immerse themselves in the diverse creations on display. To achieve this, I plan to incorporate interactive elements such as virtual paintings that users can admire, virtual sculptures they can examine from all angles, and informational pop-ups that offer insights into each artwork. By subtly integrating these interactive features, I aim to evoke curiosity and invite users to engage with the art, thereby fostering a unique and personalized gallery experience for each visitor.

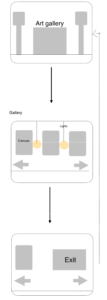

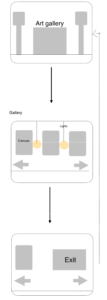

Wireframes I drew for my project:

In planning the sketch, my approach involved meticulous consideration of user experience and interaction design.. By integrating logical operations, including conditional statements, loops, and rendering functions, I aimed to ensure a seamless and engaging user journey within the gallery. Hand sketches and diagrams were instrumental in mapping out the flow of user interactions and visualizing the logical structure of the coding process, facilitating a more systematic development approach.

The pictures of the art gallery are all drawn by me using online tools. I carefully made the inside and outside parts, paying close attention to the lights, light switch, floor, and the way out. Using my creativity and computer skills, I worked hard to create a detailed and accurate representation of the gallery.

Parts I’m Proud of and Challenges Overcome:

- Figuring out when the mouse touches the artwork:

I’m proud of this code because it lets the artwork respond when I click the mouse near it. This part specifically deals with showing the spiral artwork on the screen and checking if I’m clicking near it.

// Function to render the spiral

function renderSpiral(x, y, r) {

push(); // Save the current drawing style settings and transformations

translate(x + sposX, y); // Move the origin to the specified position

image(frameIMG[1], -r / 1.8, -r / 1.8, r * 1.1, r * 1.1); // Display the image with the specified dimensions

pop(); // Restore the previous drawing style settings and transformations

// If mouse pressed

if (mouseX < x + sposX + r/2 && // Check if the mouse's X position is within the specified range

mouseX > x + sposX - r/2 && // Check if the mouse's X position is within the specified range

mouseY < y + r/2 && // Check if the mouse's Y position is within the specified range

mouseY > y - r/2 && // Check if the mouse's Y position is within the specified range

mouse) { // Check if the mouse is pressed

rect(0, 0, width, height); // Draw a rectangle covering the entire canvas

for (var i = 0; i < spiral.length; i++) {

push(); // Save the current drawing style settings and transformations

spiral[i].render(width / 2.5, height / 3, r / 5); // Call the render function for the spiral object

pop(); // Restore the previous drawing style settings and transformations

}

This code checks if I’m clicking near the artwork by looking at where I click and comparing it with the artwork’s position and size. If I click close to the artwork, it shows more spirals on the screen, giving a cool effect that changes when I interact. Making this work well was a bit tough. I had to make sure the program could understand where I’m clicking and when it’s close to the artwork. Getting the right balance between showing the spirals and detecting my clicks accurately was important to make sure the interaction feels smooth and fun.

- Switching the user perspective from scene-to-scene.

I’m proud of this code because it helps smoothly switch what I see in the program, going from one scene to another without any sudden jumps. This part, called ‘Screen,’ takes care of showing different pictures and managing the sounds in the background.

// Check if the screen is outside or inside

if (screen == 0) {

push(); // Save the current drawing style settings and transformations

// Display image outside screen

image(sIMG[0], 0, 0, width, height);

pop(); // Restore the previous drawing style settings and transformations

// Display principal text

push();

translate(width / 2, height / 1.1);

image(sIMG[2], -100, -15, 200, 30); // Display the image with specified dimensions and position

pop();

// Render Art Gallery

agRender(); // Call function to render the art gallery

} else {

push(); // Save the current drawing style settings and transformations

// Display image inside the screen with dynamic x-position

image(sIMG[1], sposX, 0, width * 2.5, height);

pop(); // Restore the previous drawing style settings and transformations

}

The above codeIt makes sure that the sound keeps playing smoothly in the background while I move between scenes, making the whole experience more immersive and enjoyable.When I’m outside in the program (screen = 0), it shows the right picture and puts the main text in the correct place, making it look nice and organized. Making this work well was a bit tricky. I had to make sure that the images changed in a way that didn’t feel sudden or strange, and that the background sound kept playing without any interruptions. Finding the right balance between the pictures and the sound was important to make the whole experience feel smooth and natural.

- I made sure to focus on small details. For instance, when you hover over the door, it creates a knocking sound, but it won’t keep making that sound repeatedly as long as you hover over it. The knocking will only happen again if you move your cursor out of the door and then back onto it. Also, I made sure that the initial music only plays the first time the door is opened, and it doesn’t play every time after that. This way, the music doesn’t overlap with itself. Also I added a sound effects for the light switch, moving to the right and left etc.

Areas for Improvement and Future Work:

Moving forward, there are several areas in my project that I recognize could be refined to enhance the overall user experience and elevate the sophistication of the artworks. One key aspect for improvement lies in enriching the interactive elements within the gallery. For instance, I plan to incorporate more diverse and dynamic interactions with the displayed artworks, enabling users to manipulate shapes, colors, and other visual elements to create a more engaging and immersive experience. By introducing additional interactive features like the ability for users to leave virtual notes or comments on specific art pieces.

Looking ahead, my future work on the project will revolve around integrating advanced generative art techniques to create more intricate and visually captivating artworks. I am particularly interested in exploring the application of complex algorithms and procedural generation methods to produce intricate patterns, textures, and visual effects, thereby adding depth and sophistication to the displayed artworks. I am also keen on delving into the use of machine learning algorithms to develop art that dynamically adapts and evolves in response to user interactions or external stimuli, thereby creating a highly immersive and personalized art experience.

Final Project:

References:

- https://editor.p5js.org/mk7592/sketches/Q3_SYFuO6