Concept

As I mentioned in a previous post, I wanted to make a Mastermind game, which is a two-player board game where one person is the code maker, and they make a code using colored pegs. The other person is the code breaker, and they have to figure out the code in a limited number of moves. The less moves you take to figure out the code, the higher you score.

In the version that I made, this game is actually a 1-player game, where the code is randomly generated, and the players have to guess the code. Players use a physical system of colored pegs and holes to parse input to the board.

In the version that I made, this game is actually a 1-player game, where the code is randomly generated, and the players have to guess the code. Players use a physical system of colored pegs and holes to parse input to the board.

Final Game

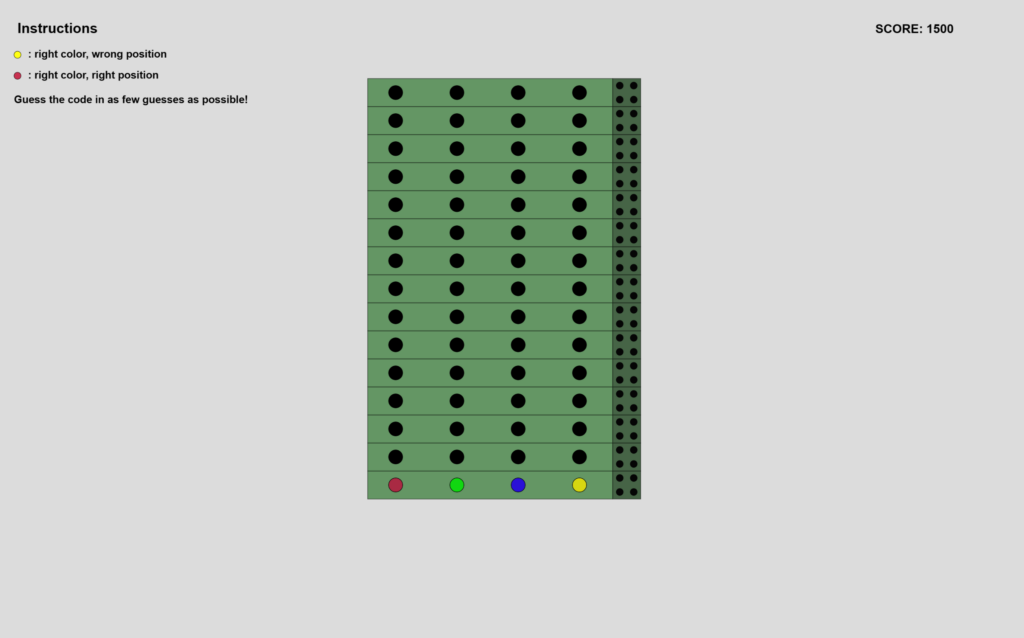

I’m very happy with the final P5Js interface for the game. It’s very minimalistic, which matches the style that I’ve chosen to go with for all of my projects. The instructions are in the upper left corner, as I didn’t want to clutter the interface by adding buttons to other pages. Also, I wanted to keep interaction with the laptop minimal if not zero, so I opted to have a static screen and focus on the physical input interface.

P5Js Sketch

The sketch does require a serial connection with Arduino. However, I enabled skipping the serial connection check for the purposes of viewing the sketch without an Arduino connection. You can press the “space” key to skip to the game screen. I have also edited the aspect ratio, for viewing it successfully within the Intro to IM blog post. It’s best viewed in fullscreen mode.

The Physical System

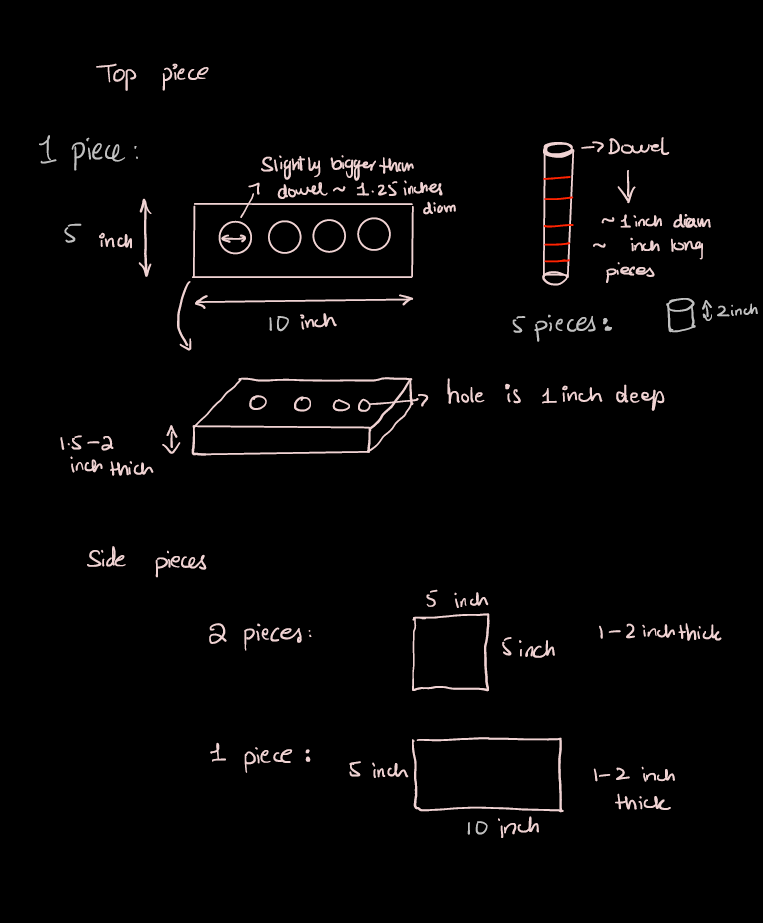

The control interface consists of a box with 5 colored pegs. In the picture below, you can see how the pegs fit into the console, and how the order can be changed to alter the input.

There is also a button on the left side of the interface, which can be pressed to confirm your choice. I used an LED momentary button because it reminded me of retro gaming consoles.

The color detection mechanism is rather naive, and it became a pain later on during the showcase as well. I have embedded LEDs in each peg, and each LED has a specific level of brightness. There is a single light sensor (LDR) in each of the 4 holes, which measures the brightness once a peg is placed into the hole. Depending on the brightness of the LED, a value corresponding to the respective color is generated. In the case of yellow, this would be the value “3”, which is the index in the array that contains the color values, as well as the index in which the pegs are fixed into the console, if you start counting from 0.

To ensure the fit was tight for consistent readings, I wrapped electrical tape around the pegs until there was a snug fit.

User Testing

I asked my friend to test the game for me. I told her nothing about how to use the interface, but she already knew about Mastermind.

She found the interface intuitive to use, but I think that it wasn’t as clear for people who didn’t know the game beforehand.

In the end, my friend won the game:

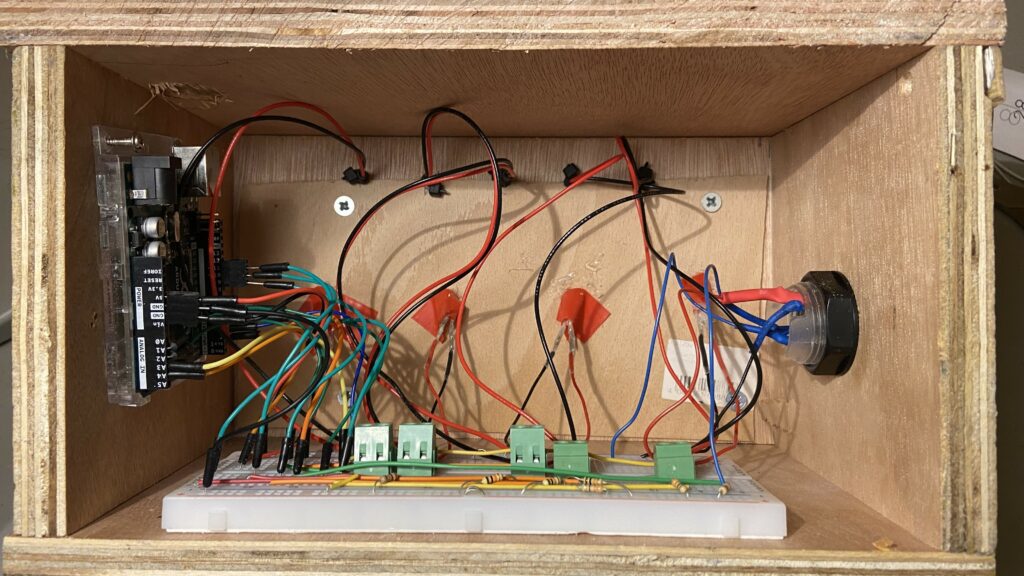

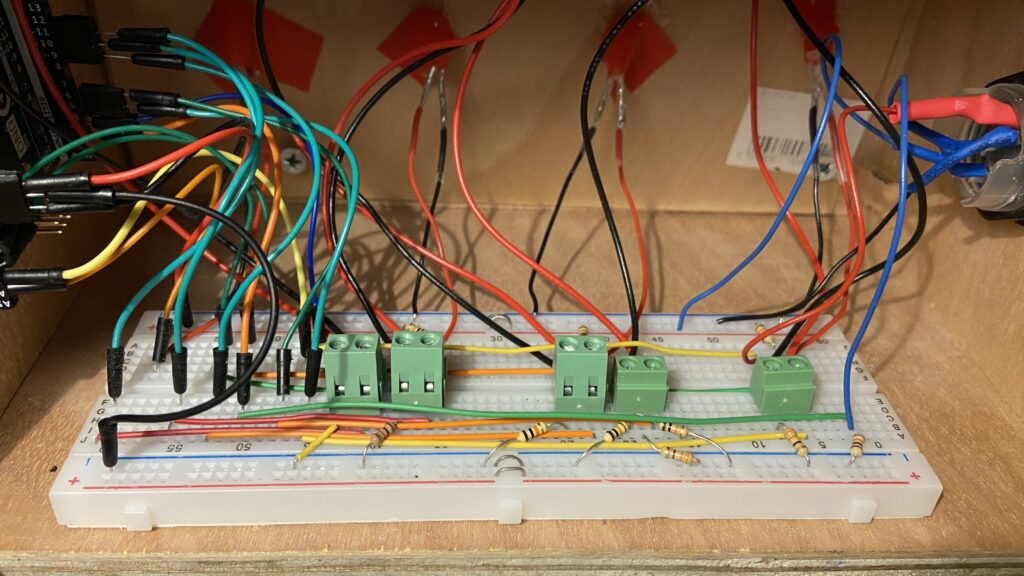

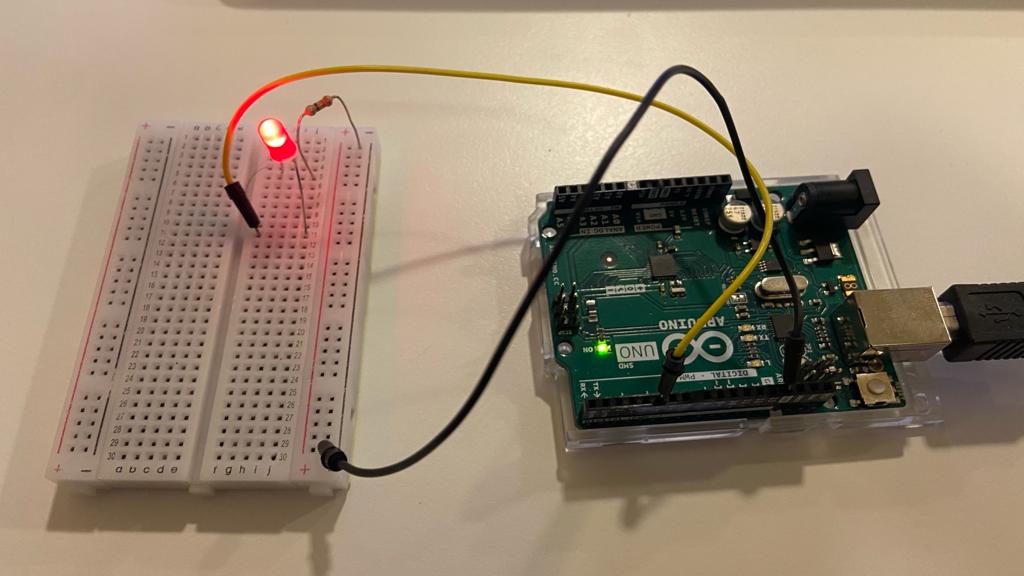

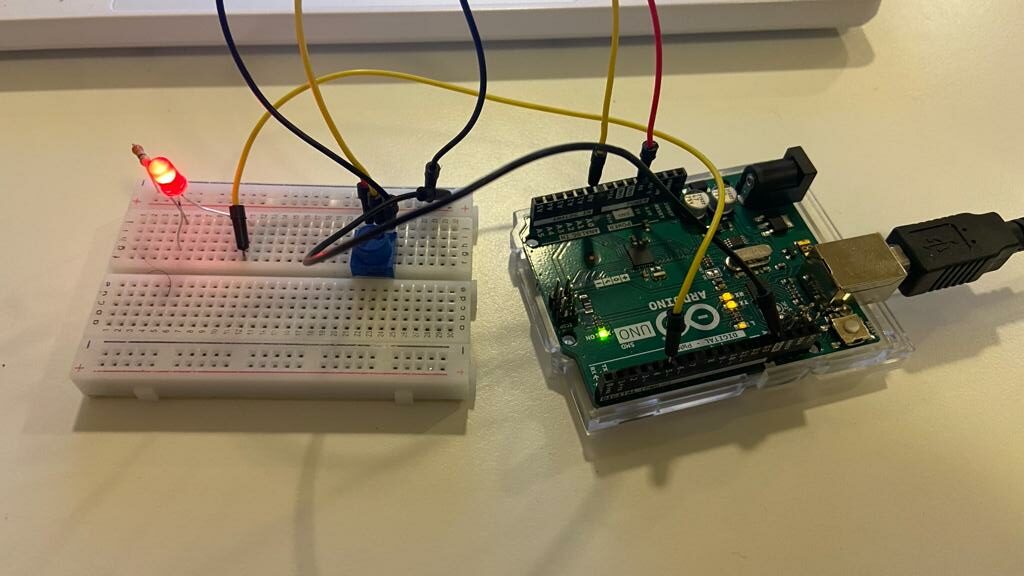

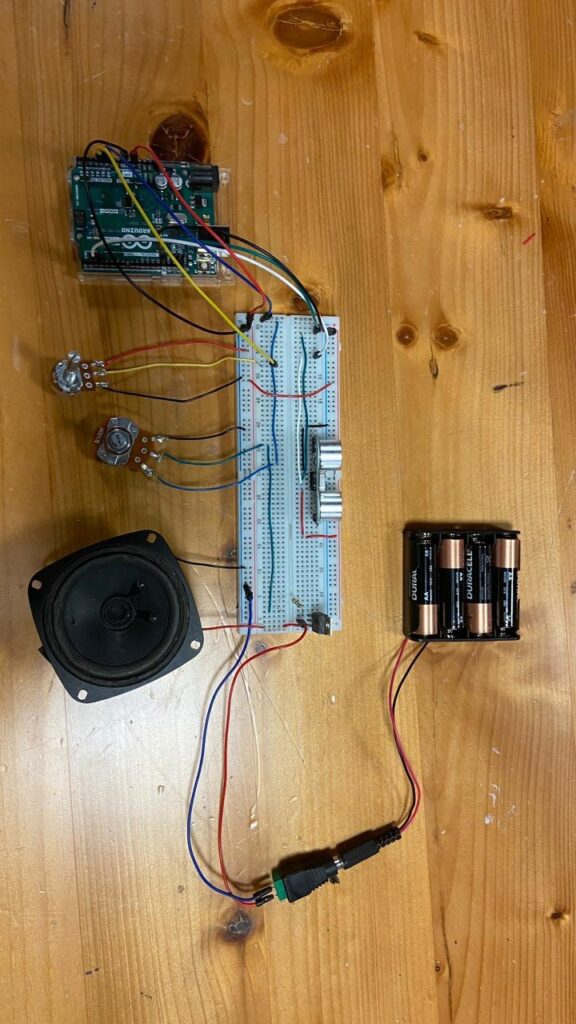

Circuit

I wanted to keep the circuit as neat as possible, so I kept the Arduino on one side of the box, had a long breadboard in the middle, and the wires connecting to the closest part of the breadboard to the corresponding hardware. I then used jumper wires to complete the circuit on the breadboard.

I soldered the wires onto the LDRs and LEDs, and then use screw mounts to connect the other ends to the breadboard. I did the same for the LED button as well.

Communication Between P5Js and Arduino

I only implemented one way communication, as the goal was to have Arduino serve only as an input device. Therefore, I just sent the state of the current row of the game as an integer code, as well as a binary value showing whether or not the button to lock in the choice was pressed.

Challenges

The most challenging aspect was calibrating the LDRs and tuning them so that each color had a particular range. However, due to the sensitivity of the LDRs, it was hard to have the LDR value ranges for each color not intersect. There was a tradeoff, as if I chose to spread out the ranges for each color, it would mean that some colors would have the same brightness as the ambient brightness, which would end up showing that color throughout the board. I chose to keep this in for the sake of having more consistency in the input, but I realize that that decision made the game confusing for some people.

Aspects that I’m proud of

I really like the aesthetics of the game overall, and I think that the P5Js aspect of the project was done very well. There are definitely some features that I would have liked to implement, but the things that I chose to keep in were well done.

I’m also proud of the way I handled an issue that occurred. Since I’m using light sensors, the mechanism needed recalibration whenever the ambient brightness changed. To tackle this, I took two approaches.

Firstly, I took some plastic nuts from the large LED buttons and fixed them on top of the knobs to block out any light from the cracks around the knob. To make sure that there was absolutely no light coming through from around the peg, I wrapped it in tape until it fit snugly. This was the physical steps that I took.

Secondly, I created a calibration script within Arduino. When I needed to calibrate the settings, I would run this, and it would tell me what settings I need for the current environment based on the minimum and maximum readings for each color across all the light sensors. The code for that is below:

void calibrateColorRanges()

{

Serial.println("Calibration started. Please follow the instructions to calibrate each color.");

const int numColors = 5; // Assuming 5 colors: Red, Green, Blue, Yellow, Turquoise

const int numLDRs = 4; // Assuming 4 LDRs

int calibrationValues[numColors][2]; // Array to store min and max values for each color across all LDRs

for (int color = 0; color < numColors; color++)

{

Serial.print("Calibrating ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.println("...");

// Initialize min and max values

calibrationValues[color][0] = 1023; // Initial min value

calibrationValues[color][1] = 0; // Initial max value

for (int ldr = 0; ldr < numLDRs; ldr++)

{

Serial.print("Move ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.print(" to LDR ");

Serial.print(ldr + 1);

Serial.println(" and press Enter.");

// Wait for user input (press Enter in the Serial Monitor)

while (!Serial.available())

{

delay(100);

}

// Discard any existing input

while (Serial.available())

{

Serial.read();

}

// Perform readings and find min and max values

for (int i = 0; i < 100; i++)

{

int ldrValue = analogRead(LDR_1 + ldr);

// Update min and max values

calibrationValues[color][0] = min(calibrationValues[color][0], ldrValue);

calibrationValues[color][1] = max(calibrationValues[color][1], ldrValue);

delay(10); // Delay between readings

}

}

// Display the min and max values for the current color across all LDRs

Serial.print("Min value: ");

Serial.print(calibrationValues[color][0]);

Serial.print(", Max value: ");

Serial.println(calibrationValues[color][1]);

}

Serial.println("Calibration complete. Use the following values in getColorFromLdrVal function:");

for (int color = 0; color < numColors; color++)

{

Serial.print("Color ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.print(": Min - ");

Serial.print(calibrationValues[color][0]);

Serial.print(", Max - ");

Serial.println(calibrationValues[color][1]);

}

}

Although some other approach might have been more elegant, I think that I was able to manage even with the drawbacks of the design that I selected.

Future Ideas

- I think it would be fun to add a leaderboard. Since I have score metrics already, it would be more fun if people competed against people who played the game previously.

- It would be nice to have the option for players to play as codemakers as well, since the original game is a two-player game. That would make my project true to the original.

- It would be better if I had the calibration embedded into the P5Js sketch, so that there is no need to reflash the Arduino. Currently, the way that I do it is to reflash the Arduino with the calibration command, and then flash it again after calibrating it, which is a nuisance.

Arduino Code

#include <Arduino.h>

// colors as ints

const int RED = 0;

const int GREEN = 1;

const int BLUE = 2;

const int YELLOW = 3;

const int TURQUOISE = 4;

// 5 LEDS with variable brightness on Arduino UNO

// LEDs are connected to pins 3, 5, 6, 9, 10

const int LED_PINS[] = {5, 6, 9, 10, 11};

const String colors[] = {"R", "G", "B", "Y", "T", "N"}; // red, green, blue, yellow, turquoise, none

// different brightness levels for LEDS

const int brightnessLevels[] = {LOW, HIGH, HIGH, HIGH, HIGH};

// const int brightnessLevels[] = {10, 0, 4, 153, 255};

// 4 LDRS connected to pins A1, A2, A3, A4

const int LDR_1 = A1; // 15

const int LDR_2 = A2; // 16

const int LDR_3 = A3; // 17

const int LDR_4 = A4; // 18

// 4 variables to store the values from the LDRs

int ldrValue1 = 0;

int ldrValue2 = 0;

int ldrValue3 = 0;

int ldrValue4 = 0;

// button LED

const int buttonLED = 3;

const int buttonPin = 2;

void setup()

{

// initialize serial communication at 9600 bits per second:

Serial.begin(9600);

// initialize the LED pins as an output:

for (int i = 0; i < 5; i++)

{

pinMode(LED_PINS[i], OUTPUT);

}

// initialize the LDR pins as an input:

pinMode(LDR_1, INPUT);

pinMode(LDR_2, INPUT);

pinMode(LDR_3, INPUT);

pinMode(LDR_4, INPUT);

pinMode(buttonLED, OUTPUT);

pinMode(buttonPin, INPUT_PULLUP);

}

const int minRed = 980;

const int maxRed = 1023;

const int minGreen = 610;

const int maxGreen = 940;

const int minBlue = 220;

const int maxBlue = 460;

const int minYellow = 120;

const int maxYellow = 220;

const int minTurquoise = 0;

const int maxTurquoise = 70;

int ambientLight[] = {0, 0, 0, 0};

int getColorFromLdrVal(int ldrVal, int ambientLight)

{

if (ldrVal >= minRed && ldrVal <= maxRed) // range of

{

return RED;

}

if (ldrVal >= minGreen && ldrVal <= maxGreen) // range of 175

{

return GREEN;

}

if (ldrVal >= minBlue && ldrVal < maxBlue) // range of 310

{

return BLUE;

}

if (ldrVal >= minYellow && ldrVal <= maxYellow) // range of 70

{

return YELLOW;

}

if (ldrVal >= minTurquoise && ldrVal <= maxTurquoise) // range of 80

{

return TURQUOISE;

}

else

{

return 5;

}

}

void calibrateColorRanges()

{

Serial.println("Calibration started. Please follow the instructions to calibrate each color.");

const int numColors = 5; // Assuming 5 colors: Red, Green, Blue, Yellow, Turquoise

const int numLDRs = 4; // Assuming 4 LDRs

int calibrationValues[numColors][2]; // Array to store min and max values for each color across all LDRs

for (int color = 0; color < numColors; color++)

{

Serial.print("Calibrating ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.println("...");

// Initialize min and max values

calibrationValues[color][0] = 1023; // Initial min value

calibrationValues[color][1] = 0; // Initial max value

for (int ldr = 0; ldr < numLDRs; ldr++)

{

Serial.print("Move ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.print(" to LDR ");

Serial.print(ldr + 1);

Serial.println(" and press Enter.");

// Wait for user input (press Enter in the Serial Monitor)

while (!Serial.available())

{

delay(100);

}

// Discard any existing input

while (Serial.available())

{

Serial.read();

}

// Perform readings and find min and max values

for (int i = 0; i < 100; i++)

{

int ldrValue = analogRead(LDR_1 + ldr);

// Update min and max values

calibrationValues[color][0] = min(calibrationValues[color][0], ldrValue);

calibrationValues[color][1] = max(calibrationValues[color][1], ldrValue);

delay(10); // Delay between readings

}

}

// Display the min and max values for the current color across all LDRs

Serial.print("Min value: ");

Serial.print(calibrationValues[color][0]);

Serial.print(", Max value: ");

Serial.println(calibrationValues[color][1]);

}

Serial.println("Calibration complete. Use the following values in getColorFromLdrVal function:");

for (int color = 0; color < numColors; color++)

{

Serial.print("Color ");

switch (color)

{

case 0:

Serial.print("RED");

break;

case 1:

Serial.print("GREEN");

break;

case 2:

Serial.print("BLUE");

break;

case 3:

Serial.print("YELLOW");

break;

case 4:

Serial.print("TURQUOISE");

break;

}

Serial.print(": Min - ");

Serial.print(calibrationValues[color][0]);

Serial.print(", Max - ");

Serial.println(calibrationValues[color][1]);

}

}

unsigned long timeSinceLastSerial = 0;

int valFromP5 = 0;

float sum1, sum2, sum3, sum4 = 0;

unsigned int ldrVal1, ldrVal2, ldrVal3, ldrVal4 = 0;

unsigned int iterator = 0;

unsigned long buttonLEDTimer = 0;

unsigned long buttonSendTimer = 0;

int buttonBrightness = 0;

int buttonDirection = 1;

int buttonState = LOW; // if button is not pressed

void loop()

{

// fade in and out button LED

if (millis() - buttonLEDTimer > 5)

{

buttonLEDTimer = millis();

if (buttonBrightness >= 255 || buttonBrightness <= 0)

{

buttonDirection = buttonDirection * -1;

}

buttonBrightness = (buttonBrightness + 1 * buttonDirection);

if (buttonBrightness >= 255)

{

buttonBrightness = 255;

}

if (buttonBrightness <= 0)

{

buttonBrightness = 0;

}

analogWrite(buttonLED, buttonBrightness);

}

// turn on the LEDs to the corresponding brightness

digitalWrite(LED_PINS[RED], brightnessLevels[RED]);

digitalWrite(LED_PINS[GREEN], brightnessLevels[GREEN]);

digitalWrite(LED_PINS[BLUE], brightnessLevels[BLUE]);

digitalWrite(LED_PINS[YELLOW], brightnessLevels[YELLOW]);

digitalWrite(LED_PINS[TURQUOISE], brightnessLevels[TURQUOISE]);

// uncomment to calibrate color ranges

// calibrateColorRanges();

// send to processing

if (millis() - timeSinceLastSerial < 10) // if it has not been more than 20ms since last serial message

{

return; // do nothing

}

timeSinceLastSerial = millis(); // update the time since last serial message

if (iterator == 10)

{

ldrVal1 = int(sum1 / 10);

ldrVal2 = int(sum2 / 10);

ldrVal3 = int(sum3 / 10);

ldrVal4 = int(sum4 / 10);

// print the values if they are not nan

if (!isnan(ldrVal1) && !isnan(ldrVal2) && !isnan(ldrVal3) && !isnan(ldrVal4))

{

// get the color from the LDR value

int color1 = getColorFromLdrVal(ldrVal1, ambientLight[0]);

int color2 = getColorFromLdrVal(ldrVal2, ambientLight[1]);

int color3 = getColorFromLdrVal(ldrVal3, ambientLight[2]);

int color4 = getColorFromLdrVal(ldrVal4, ambientLight[3]);

int buttonState = digitalRead(buttonPin);

Serial.print(color1);

Serial.print(",");

Serial.print(color2);

Serial.print(",");

Serial.print(color3);

Serial.print(",");

Serial.print(color4);

Serial.print(",");

Serial.println(buttonState);

}

iterator = 0;

sum1 = 0;

sum2 = 0;

sum3 = 0;

sum4 = 0;

}

// read and add values from the LDRs

sum1 = sum1 + analogRead(LDR_1);

sum2 = sum2 + analogRead(LDR_2);

sum3 = sum3 + analogRead(LDR_3);

sum4 = sum4 + analogRead(LDR_4);

iterator++;

}

P5Js Code

let board;

let WIDTH = 2800;

let HEIGHT = 1500;

let colorsArray;

const RED = 0;

const GREEN = 1;

const BLUE = 2;

const YELLOW = 3;

const TURQUOISE = 4;

const BLACK = 5;

let canvas;

let port;

let highScores = [];

function generateCode() {

let code = [];

let array = [0, 1, 2, 3 , 4, 5];

//select random subset of 4 colorsArray

let colorsArray = shuffleArray(array);

for (let i = 0; i < 4; i++) { // select only 4 colorsArray

code.push(colorsArray[i]);

}

return code;

}

// Helper function to shuffle an array

function shuffleArray(array) {

//copy the array

array_copy = array.slice();

for (let i = array_copy.length - 2; i > 0; i--) {

const j = Math.floor(Math.random() * (i + 1));

[array_copy[i], array_copy[j]] = [array_copy[j], array_copy[i]];

}

return array_copy;

}

function calculateScore(numGuesses) {

return 1500 - 100 * numGuesses;

}

let r, g, b, y, t, bl;

let currentGuess;

let numberOfGuesses;

let lastButtonPressed;

let isButtonPressed;

let buttonClock;

let board_score;

let state;

function setup() {

canvas = createCanvas(WIDTH, HEIGHT);

board = new Board();

r = color(200, 50, 80);

g = color(20, 255, 20);

b = color(50, 20, 255);

y = color(255, 255, 20);

t = color(20, 255, 255);

bl = color(0, 0, 0);

// colorsArray are red, green, blue, yellow, turquoise, black

colorsArray = [r, g, b, y, t, bl];

//generate the code

code = generateCode();

port = createSerial();

//initialize important variables

numberOfGuesses = 0;

lastButtonPressed = 0;

isButtonPressed = 0;

buttonClock = 0;

board_score = [0, 0];

state = "AUTH_STATE";

}

function resetGame()

{

board.reset();

numberOfGuesses = 0;

score = 0;

state = "AUTH_STATE";

code = generateCode();

}

function getNameInput()

{

let name = prompt("Please enter your name ");

if (name == null || name == "") {

name = "Anonymous";

}

return name;

}

let delayClock = 0;

function draw() {

if (state == "GAME_STATE") {

background(220);

board_score = board.score;

print("score: " + board_score);

if (board_score[0] == 4) {

print("GAME WON")

state = "GAME_WON_STATE";

delayClock = millis();

}

if (board.currentRow == 15) {

print("GAME LOST")

state = "GAME_LOST_STATE";

delayClock = millis();

}

// if the port is open, read the data

if (port.available() > 0) {

let data = port.readUntil("\n");

split_data = int(data.split(","));

// first 4 are the current guess

// last is the button press

currentGuess = split_data.slice(0, 4);

isButtonPressed = split_data[4];

board.update(currentGuess);

}

//if the button is pressed, finalize the guess

if (isButtonPressed == 1 && lastButtonPressed == 0) {

if (board.finalizeChoices(code))

{

numberOfGuesses++;

};

lastButtonPressed = 1;

}

else if (isButtonPressed == 0 && lastButtonPressed == 1) {

lastButtonPressed = 0;

}

board.show();

//show the score at the top right

fill(0);

textSize(35);

push();

textStyle(BOLD);

text("SCORE: " + calculateScore(numberOfGuesses), width - 300, 100);

pop();

// print instructions to the side

fill(0);

textSize(40);

push();

textStyle(BOLD);

text("Instructions", 50, 100);

pop();

textSize(30);

push();

fill(y);

circle(50, 162, 20);

pop();

text(": right color, wrong position", 80, 170);

// red circle

push();

fill(r);

circle(50, 222, 20);

pop();

text(": right color, right position", 80, 230);

text("Guess the code in as few guesses as possible!", 40, 300);

}

else if (state == "GAME_WON_STATE") {

background(220, 200);

fill(0);

textSize(60);

textStyle(BOLD);

text("YOU WON!", width / 2 - 200, height / 2);

//SHOW SCORE

fill(0);

textSize(35);

push();

textStyle(BOLD);

text("SCORE: " + calculateScore(numberOfGuesses), width / 2 - 200, height / 2 + 100);

pop();

if (millis() - delayClock < 2000) {

return;

}

//read input from the serial port

if (port.available() > 0) {

let data = port.readUntil("\n");

data = int(data.split(","));

let buttonval = data[4];

if (buttonval == 1) {

print("resetting");

board.reset();

numberOfGuesses = 0;

state = "GAME_STATE";

board_score = [0, 0];

}

}

}

else if (state == "GAME_LOST_STATE") {

background(220, 200);

fill(0);

textSize(60);

textStyle(BOLD);

text("YOU LOST!", width / 2 - 200, height / 2);

text("The code was: ", width / 2 - 600, height / 2 + 200);

//print the right code

for (let i = 0; i < 4; i++) {

push();

fill(colorsArray[code[i]]);

circle(width/2 - 100 + i * 100, height/2 + 185, 50);

pop();

}

//push the button to reset

push();

textSize(30);

text("Press the button to reset", width / 2 - 200, height / 2 + 400);

pop();

if (millis() - delayClock < 2000) {

return;

}

//read input from the serial port

if (port.available() > 0) {

let data = port.readUntil("\n");

data = int(data.split(","));

let buttonval = data[4];

if (buttonval == 1) {

print("resetting");

resetGame();

}

}

}

else if (state == "AUTH_STATE") {

//ask to connect to the device

background(220, 200);

fill(0);

textSize(60);

textStyle(BOLD);

text("PRESS SPACE TO START", width / 2 - 300, height / 2);

if(port.opened()) {

state = "GAME_STATE";

}

}

}

/**

* Handles key press events and performs corresponding actions.

*/

function keyPressed() {

/**

* If the key pressed is "c" and the state is "GAME_STATE",

* finalize the choices on the board using the provided code.

*

* @returns {void}

*/

if (key == "c") {

if (state != "GAME_STATE") {

return;

}

board.finalizeChoices(code);

}

}

/**

* If the key pressed is "n", update the current color of the current row on the board.

* The color is updated by incrementing the current color index by 1 and wrapping around to 0 if it exceeds 5.

*

* @returns {void}

*/

if (key == "n") {

board.rows[board.currentRow].currentColor = (board.rows[board.currentRow].currentColor + 1) % 6;

}

/**

* If the key pressed is " " (space) and the state is "AUTH_STATE",

* open a port at 9600 baud using the port object.

*

* @returns {void}

*/

if (key == " ") {

if (state != "AUTH_STATE") {

return;

}

port.open(9600);

}

/**

* If the key pressed is "r", reset the game.

*

* @returns {void}

*/

if (key == "r") {

resetGame();

}

}