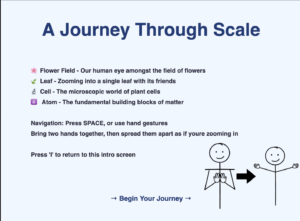

I made a game before that was controlled with keyboard inputs so this time, I wanted to create a game that used different input.

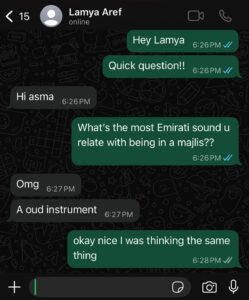

As I was scrolling through Youtube Shorts to find inspiration of my project, I came across a simple game playable with user’s pitch levels. In the video I watched, the character moved up and forward depending on the level of pitch. With this in mind, I tried making simple programs that took user input.

First, I built a program that detected a specific pitch, in this case “C”. If the user sings the pitch, the block is moved upwards and if the user maintains the same level for certain amount of time, the block permanently moves upwards. I made this because my initial strategy was to make a adventure game where the character travels a 2D map and make certain interactions that triggers things such as lifting a boulder with a certain note. This little exercise allowed me to get familiar with sound input and how I can utilize it in the future.

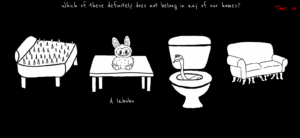

For my midterm, I decided to create a simple game that uses paddles that move left and right. The goal of the game is to catch the falling objects with these moving paddles. The hardest part about the game was obviously moving the paddles depending on the user’s pitch. At first, the paddles were so sensitive to the point that its movement was all over the place even with a slight input of sound. Adjusting that and making it so that it moves smoothly was the key in my game.

While I was testing for movements, I realized that I was making sounds that resembled a monkey. I was making the sounds OOO for the low volumne and EEE to make high pitched noise. So I came up with a clever idea to make the game monkey-themed with falling objects being bananas and the paddles as monkey hands. It made me laugh thinking that the users would have to immitate monkeys in order to play the game. Also, I added a little feature in the end to replay the sound that the players made while playing my game so that they can feel a bit humilliated while playing the game. I thought this was a great idea to bring some humor in my game. I also had to test this multiple times making the game and had to experience it beforehand.

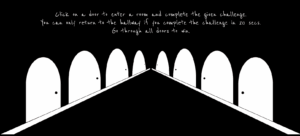

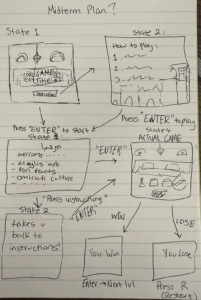

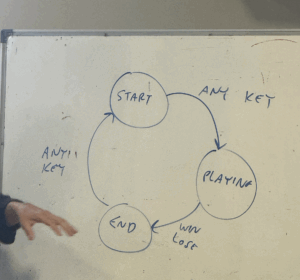

My game is divided into 4 major stages: start screen, instructions, gameplay, and gameover screen. As explained in class, I utilized the different stages so that resetting was easiler.

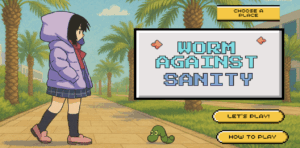

The startscreen is the title screen. It has 3 buttons: instructions, play and full screen button. Clicking the buttons make a clicking sound. That is the only sound feature I have since my gameplay is hugely effected by sound. Any background music or sound effects effect how the game is played so I kept it to the minimum. Also, making the game full screen effects the game play, so I had the fullscreen feature to fill everywhere else with black.

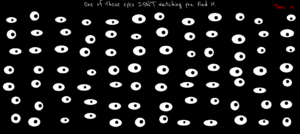

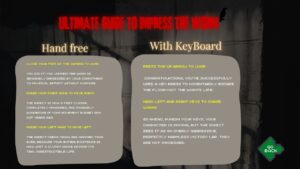

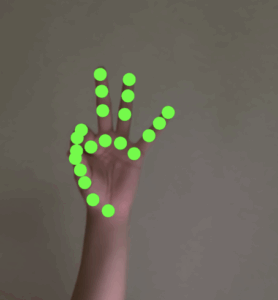

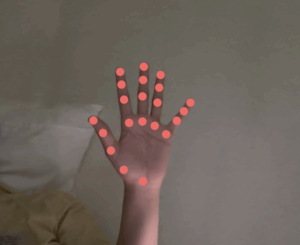

Before playing the users can click the instructions page to find out the controls and calibrate their pitch range. I deliberately say to use OOOs and EEEs for the pitches so that they can sound like monkeys. These pitch ranges are adjustable with up and down arrows and are stored in the local storage so that the settings remain even after resetting the game. I also show a live paddle so that the users can see how their voice with move the hands.

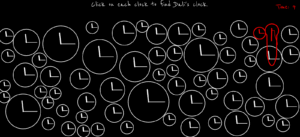

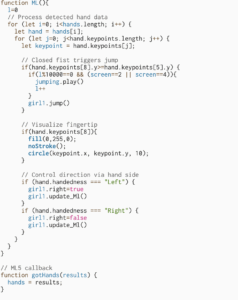

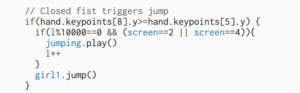

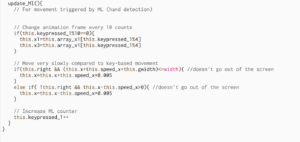

Once they hit play, the core loop is simple: bananas spawn at the top and fall; the goal is to catch them with the monkey “hands” (the paddle) at the bottom. I map the detected pitch to x-position using the calibrated min/max from instructions, I clamp the raw frequency into that window, map it to the screen’s left/right bounds (so the hands never leave the canvas), then smooth it. To keep control stable I added a small noise gate (ignore very quiet input), a frequency deadzone (ignore tiny wiggles), linear smoothing with lerp, and a max step cap so sudden jumps don’t overshoot. the result feels responsive without the little movement I had early on. The player scores when a banana touches the hands and loses a life on a miss; three misses ends the round.

When the run ends, the gameover screen appears with the background art, a big line like “you got x bananas!”, and two buttons: “play again” and “did you sound like a monkey?”. during gameplay i record the same mic that powers pitch detection; on gameover I stop recording and let the player play/stop that clip. it’s a tiny feature, but it adds a fun (and slightly embarrassing) payoff that matches the monkey concept.

I’m especially proud of how I handled pitch jumps. early on, tiny jitters made the hands twitchy, but big interval jumps still felt sluggish. I fixed this by combining a few tricks: a small deadzone to ignore micro-wiggles, smoothing with lerp for steady motion, and a speed boost that scales with the size of the pitch change. when the detected frequency jumps a lot in one frame (like an “ooo” to a sharp “eee”), I temporarily raise the max movement per frame, then let it settle back down. that way, small fluctuations don’t move the paddle, normal singing is smooth, and deliberate leaps produce a satisfying snap across the screen without overshooting. Getting this balance right made the controls feel musical.

For future improvements on the game itself, I want to smooth the frustration without losing the funny chaos. Bananas don’t stack, but several can arrive in different lanes at the same moment, and with smoothing plus a max step on the hands, some patterns are effectively unreachable. I kept a bit of that because the panic is part of the joke, but I’d like the spawner to reason about landing time instead of just spawn time, spacing arrivals so that at least one of the simultaneous drops is realistically catchable. I can still sprinkle in deliberate “double-arrival” moments as set pieces, but the baseline should feel fair.