Initial Concept:

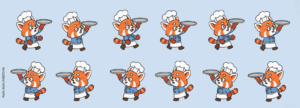

The core concept of my project is to create a game where a panda chef stacks pancakes on its tray while pancakes fall from random positions in the sky . The gameplay is intentionally simple—pancakes fall from the sky, and the player moves the panda left and right to catch them before they hit the ground. I wanted the concept to be both whimsical and approachable, something that could appeal to all ages while still having the potential for engaging mechanics like timers, scoring, and fun visuals.

Design

The panda sprite sheet is animated using a frame-based system that cycles through images depending on movement, while background elements like clouds and grass are generated with simple loops and p5 shapes for efficiency. Pancakes are handled as objects using a dedicated class, which keeps the code modular and easy to expand. I also separated core functions—like drawing the welcome screen, updating the game state, and spawning pancakes—so the program remains organized and readable. This approach makes the design not only playful on-screen but also manageable under the hood.

Potential Struggles:

Potential Struggles:

The most frightening part of the project was integrating sounds into the game. I know that audio is essential to making the experience immersive, but I was unsure about the technical steps required to implement it smoothly, especially since I had issues in the past when it comes to overlapping sounds and starting and stopping it accurately. Questions like when to trigger sounds, how to loop background music, or how to balance audio levels without them being distracting added to the challenge.

How I Plan to Tackle:

To reduce this risk, I turned to existing resources and examples, especially by watching tutorials and breakdowns on YouTube. Seeing other creators demonstrate how to load and trigger sounds in p5.js gave me both practical code snippets and creative inspiration. By learning step by step through videos, I am hoping to be able to gradually integrate audio without it feeling overwhelming.

Embedded Skecth:

Reading Response:

Computer vision i would say is different from how humans see. We naturally understand depth, context, and meaning, but computers just see a grid of pixels with no built-in knowledge or ability to infer and make connections like humans do. They need strict rules, so even small changes in lighting or background can throw a computer off.

To help computers “see” better, we often set up the environment in their favor, meaning that we cater to their capabilities. Simple techniques like frame differencing (spotting motion), background subtraction (comparing to an empty scene), and brightness thresholding (using contrast) go a long way. Artists and designers also use tricks like infrared light, reflective markers, or special camera lenses to make tracking more reliable.

What’s interesting in art is how this power of tracking plays out. Some projects use computer vision to make playful, interactive experiences where people’s bodies become the controller. Others use it to critique surveillance, showing how uncomfortable or invasive constant tracking can be. So, in interactive art, computer vision can both entertain and provoke — it depends on how it’s used.