Concept

Memory Dial is a desk object that listens to the room and turns what it senses into a living visual field.

Three inputs drive everything:

1. Light from a photoresistor

2. Proximity from an ultrasonic sensor

3. Sound from the laptop microphone

These values animate a layered visualization in p5.js. Over time, the system also writes short poems that describe how the room behaved during the last interval.

The project is both physical and digital: the Arduino gathers the signals, and the browser visualizes the “aura” of the space.

Process

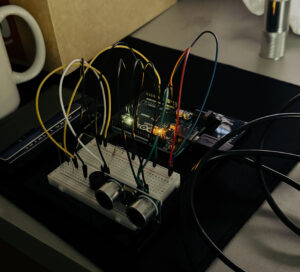

Hardware

The electronics sit inside or beside the desk object.

- Photoresistor (LDR): measures brightness in the room

- HC-SR04 Ultrasonic Sensor: detects how close a person stands

- (Piezo sensor is present but not used in the final logic)

- Arduino Uno: reads the sensors and streams data via Web Serial

Arduino prints values in this format:

lightValue,distanceValue,piezoValue

Software Flow

The browser-based sketch (p5.js) opens the serial port and receives the sensor stream.

Then it does five main jobs:

1. Signal Smoothing

Raw sensor data is jittery.

To make the animation feel organic, I smooth everything, which ultimately prevents flickering and gives the visuals a “breathing” quality.

smoothLight = lerp(smoothLight, currentLight, 0.18); smoothDist = lerp(smoothDist, currentDist, 0.18); smoothMicLevel = lerp(smoothMicLevel, rawMicLevel, 0.2);

2. Normalization

Each value is mapped into a range between 0 and ~1 so it can drive animation:

- lightNorm: 0 = very dim, 1 = very bright

- presenceNorm: 0 = far away, 1 = very close

- micNorm: 0 = quiet, 1 = loud

These norms control different visual layers.

Visual System: What Each Sensor Controls

The sketch builds a three-layer aura that reacts in real time.

1. Light → Affective Basefield (Background)

- Low light = blue / cool hues

- Medium = muted purples

- Bright room = warm oranges

The background is made of hundreds of fading circles with slow noise-driven texture (“veins”), giving it a soft atmospheric motion.

2. Distance → Flowfield and Moving Rings

When someone approaches:

- Flowfield particles move faster and glow more

- Radial rings become thicker and more defined

- Blobs orbiting the center grow and multiply

Distance affects the energy level of the whole system.

3. Sound → Breathing Halo (Distorted Circles)

Microphone amplitude controls:

- Speed of breathing

- Amount of wobble

- Thickness and brightness of the halo

Louder sound → faster breathing, more distortion

Quiet room → slow, smooth pulse

This halo behaves like the room’s heartbeat.

4. Timed Sparks

Every few seconds, spark particles are released from the center:

- They drift, swirl, and fade over ~10 seconds

- Create a subtle “memory trace” effect

This gives the visuals a slow-time texture

Poem System: Long-Duration Feedback

While the visual reacts instantly, a second system keeps track of behaviour over longer periods.

Every frame, the sketch adds to running totals:

lightNorm presenceNorm micNorm

After a set interval (currently short for testing), the program:

- Computes average light, distance, and sound

- Categorizes the ambience into a mood (quietDark, quietBright, busyClose, mixed)

- Selects a matching four-line poem from a text file (some of most popular & my favorites)

- Fades the poem in and out on top of the animation

- Resets the history buffer for the next cycle

This creates a rhythm:

instant animation → slow reflection → instant animation.

(Picture 2: Cinematic Photography)

Interaction Summary

When people walk by:

- Their presence wakes the field

- Their voice or music changes the breathing

- Lighting conditions tint the entire atmosphere

After a long stretch of time, the system “writes” a short poem capturing the mood of the environment.

The desk object becomes a quiet companion that listens, reacts, and eventually reflects.

What the Viewer Actually Experiences is –

- They see the aura pulse when sound changes

- They see the energy rise when someone approaches

- They feel the ambience shift when lights dim or brighten

- They get a poem that summarizes the vibe of the past hour

The project sits between functional sensing and artistic ambience.

It doesn’t demand interaction; it responds to whatever happens.

(Video clip 1: close-up shot of breathing)

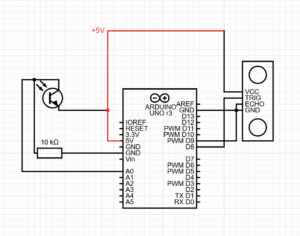

Schematic Diagram

What I am proud of:

One part I’m especially happy with is the poem selection system, because it isn’t just random text appearing every few minutes. It observes the room quietly, stores a rolling history of the ambience, and then chooses a poem that matches the emotional “weather” of the last cycle.

The logic only works because the sketch accumulates sensor values over time, instead of reacting to single spikes. For example, this block keeps a running history of the room’s brightness, proximity, and sound:

histLightSum += lightNorm; histDistSum += presenceNorm; histMicSum += breathStrength; histSampleCount++;

Then, when it’s time to display a poem, the system computes averages and interprets them like a mood:

Future Improvements

I want to expand the Memory Dial so it becomes a small personal companion. Ideas include:

- Logging poems over time into a journal page

- Adding touch sensing so the object reacts when someone taps the table

- Allowing the user to “bookmark” moments when the aura looks special

- Using AI to rewrite or remix poems based on ambience trends

- Creating a physical enclosure for the sensors so it feels like a finished artifact

Good project! There are two errors in the schematic. The black dot on the wires for the ECHO and GND indicates that these wires are connected, which looks incorrect (they should not be connected, ECHO should only be connected to D9). You can see a correct symbol for an LDR / photoresistor here: https://github.com/mangtronix/IntroductionToInteractiveMedia/blob/master/lectureNotes2.md#analog-input

Your post needs to include a “How this was made” section as explained on the course main site.