Musical Instrument

Introduction:

An instrument is something that helps us humans either measure the data or produce it. For this assignment, we were supposed to build a musical instrument in a group of two. Given my health challenges, I was unable to team up with anyone, and decided to pursue it on my own. I started off by asking the most fundamental question – “What is a musical instrument ? ” Something that plays sound when triggered? Or something that plays sound on its own? What kind of sound? How many sounds? It is when after pondering on the philosophy of a musical device, I questioned my surroundings. I don’t happen to have a dorm mate, and very less likely do I get to socialize with others around the campus. Sometimes the thoughts themselves get too loud that I start second guessing my self. This is where the eureka moment came in! A musical device that talks to me – interacts with my as if a roommate for instance would have done. To start off with basics, a greeting or a salutation would have had sufficed as well. There and then the idea of ‘Welcominator’ was born!

Concept and design:

After having decided upon what to make and accomplish, I got down to work. The basic logic of ‘Welcominator’ would involve use of a trigger mechanism which would recognize the moment I settle inside my room, and a speaker, alongside a digital and an analogue switch to trigger and adjust response.

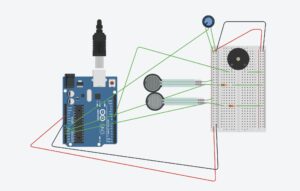

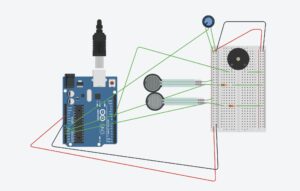

For the digital sensor / switch I decided to use the FSR sensor (Force Sensing Resistor). This sensor reduces the resistance with greater pressure applied, and by logic qualifies for the analogue sensor. However, the basic concept to this instrument was me putting down my items such as my watch as soon as I enter and settle down inside my dorm. Thus, two FSR sensors were used for greater surface area, and the value read by these sensors was read using digitalRead. Therefore, acting as a switch, the value of 1 or 0 was only read.

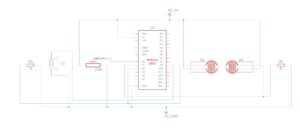

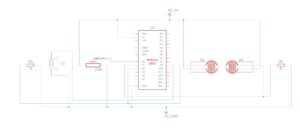

As for the analogue sensor, the potentiometer was used. The potentiometer in this case was not used to adjust the volume, but rather to choose between the audio tracks. The code written would select which sound to play depending on the current fed in by the wiper toward the A0 pin on the Arduino. The schematic and sketch below show the connection and logical mapping of the ciruit:

The buzzer or the speaker takes voltage signals from pin 9, whilst the digitalRead performed on FSR sensor is sent to pin A1 and A2 respective of the sensor. It is an active buzzer and hence can play different sound tunes.

Code:

//crucial file added to understand how pitches and notes work

#include "pitches.h"

#define BUZZER_PIN 9

#define POT_PIN A0

#define SENSOR1_PIN A1

#define SENSOR2_PIN A2

bool isPlaying = false;

// godfather

int godfather_melody[] = {

NOTE_E4, NOTE_A4, NOTE_C5, NOTE_B4, NOTE_A4, NOTE_C5, NOTE_A4, NOTE_B4, NOTE_A4, NOTE_F4, NOTE_G4,

NOTE_E4, NOTE_E4, NOTE_A4, NOTE_C5,

NOTE_B4, NOTE_A4, NOTE_C5, NOTE_A4, NOTE_C5, NOTE_A4, NOTE_E4, NOTE_DS4,

NOTE_D4, NOTE_D4, NOTE_F4, NOTE_GS4,

NOTE_B4, NOTE_D4, NOTE_F4, NOTE_GS4,

NOTE_A4, NOTE_C4, NOTE_C4, NOTE_G4,

NOTE_F4, NOTE_E4, NOTE_G4, NOTE_F4, NOTE_F4, NOTE_E4, NOTE_E4, NOTE_GS4,

NOTE_A4

};

int godfather_durations[] = {

8, 8, 8, 8, 8, 8, 8, 8, 8, 8, 8,

2, 8, 8, 8,

8, 8, 8, 8, 8, 8, 8, 8,

2, 8, 8, 8,

2, 8, 8, 8,

2, 8, 8, 8,

8, 8, 8, 8, 8, 8, 8, 8,

2

};

// nokia tune

int nokia_melody[] = {

NOTE_E5, NOTE_D5, NOTE_FS4, NOTE_GS4,

NOTE_CS5, NOTE_B4, NOTE_D4, NOTE_E4,

NOTE_B4, NOTE_A4, NOTE_CS4, NOTE_E4,

NOTE_A4

};

int nokia_durations[] = {

8, 8, 8, 8,

8, 8, 8, 8,

8, 8, 8, 8,

8

};

void setup() {

pinMode(BUZZER_PIN, OUTPUT);

pinMode(SENSOR1_PIN, INPUT);

pinMode(SENSOR2_PIN, INPUT);

pinMode(POT_PIN, INPUT);

}

void loop() {

int potValue = analogRead(POT_PIN);

bool useGodfather = potValue < 512; // Left side (low) pot = Godfather, Right (high) = Nokia

int sensor1Value = digitalRead(SENSOR1_PIN);

int sensor2Value = digitalRead(SENSOR2_PIN);

bool sensorTriggered1 = sensor1Value == HIGH;

bool sensorTriggered2 = sensor2Value == HIGH;

if ((sensorTriggered1 || sensorTriggered2) && !isPlaying) { // checks if no music is playing and either of the sensor trigger is recorded for

isPlaying = true;

if (useGodfather) {

playMelody(godfather_melody, godfather_durations, sizeof(godfather_melody) / sizeof(int));

} else {

playMelody(nokia_melody, nokia_durations, sizeof(nokia_melody) / sizeof(int));

}

isPlaying = false;

}

}

//function for playing melody

void playMelody(int melody[], int durations[], int length) {

for (int i = 0; i < length; i++) {

int noteDuration = 1000 / durations[i];

tone(BUZZER_PIN, melody[i], noteDuration);

delay(noteDuration * 1.2); // time duration added betnween notes to make it seem buttery smooth.

noTone(BUZZER_PIN);

}

}

// pitches.h

#define REST 0

#define NOTE_B0 31

#define NOTE_C1 33

#define NOTE_CS1 35

#define NOTE_D1 37

#define NOTE_DS1 39

#define NOTE_E1 41

#define NOTE_F1 44

#define NOTE_FS1 46

#define NOTE_G1 49

#define NOTE_GS1 52

#define NOTE_A1 55

#define NOTE_AS1 58

#define NOTE_B1 62

#define NOTE_C2 65

#define NOTE_CS2 69

#define NOTE_D2 73

#define NOTE_DS2 78

#define NOTE_E2 82

#define NOTE_F2 87

#define NOTE_FS2 93

#define NOTE_G2 98

#define NOTE_GS2 104

#define NOTE_A2 110

#define NOTE_AS2 117

#define NOTE_B2 123

#define NOTE_C3 131

#define NOTE_CS3 139

#define NOTE_D3 147

#define NOTE_DS3 156

#define NOTE_E3 165

#define NOTE_F3 175

#define NOTE_FS3 185

#define NOTE_G3 196

#define NOTE_GS3 208

#define NOTE_A3 220

#define NOTE_AS3 233

#define NOTE_B3 247

#define NOTE_C4 262

#define NOTE_CS4 277

#define NOTE_D4 294

#define NOTE_DS4 311

#define NOTE_E4 330

#define NOTE_F4 349

#define NOTE_FS4 370

#define NOTE_G4 392

#define NOTE_GS4 415

#define NOTE_A4 440

#define NOTE_AS4 466

#define NOTE_B4 494

#define NOTE_C5 523

#define NOTE_CS5 554

#define NOTE_D5 587

#define NOTE_DS5 622

#define NOTE_E5 659

#define NOTE_F5 698

#define NOTE_FS5 740

#define NOTE_G5 784

#define NOTE_GS5 831

#define NOTE_A5 880

#define NOTE_AS5 932

#define NOTE_B5 988

#define NOTE_C6 1047

#define NOTE_CS6 1109

#define NOTE_D6 1175

#define NOTE_DS6 1245

#define NOTE_E6 1319

#define NOTE_F6 1397

#define NOTE_FS6 1480

#define NOTE_G6 1568

#define NOTE_GS6 1661

#define NOTE_A6 1760

#define NOTE_AS6 1865

#define NOTE_B6 1976

#define NOTE_C7 2093

#define NOTE_CS7 2217

#define NOTE_D7 2349

#define NOTE_DS7 2489

#define NOTE_E7 2637

#define NOTE_F7 2794

#define NOTE_FS7 2960

#define NOTE_G7 3136

#define NOTE_GS7 3322

#define NOTE_A7 3520

#define NOTE_AS7 3729

#define NOTE_B7 3951

The code above addresses the logic and the pitches.h is another file used for defining and storing the notes that are used by our program. Code for the pitches and the sounds for ‘Godfather theme’ and ‘Nokia ‘ tune were taken from Arduino Project HUB website .

Both FSR sensor trigger HIGH or LOW value, and if potentiometer is registering lower voltage, then Godfather theme plays, and when it registers higher voltage, the Nokia tune plays. Once it ends, it sets the isPlaying state to false. This is to avoid interruption. Last but not the least, chatgpt was used to order pitches.h file as coding it myself would have been impossible.

Challenges:

One and the only challenge I faced was the FSR registering high, even when there was no pressure being applied. This led me to do some researching. Turns out that sometimes inaccuracy and condition of the sensor renders false positives. Hence, I used a resistor connection with the sensor and ground to get rid of the tingling current, and register high only when a solid threshold was provided. An led was attached before the buzzer, for visual aesthetics and for indication that current was going through the buzzer. Initially the buzzer would work as well. This led me to code and find the bug in wrong pin assignment

Demo:

Future Revisions:

For future revision I intend to add multiple songs, FSR sensors, and an array of leds which can mimic the sound pattern.