Concept

I’ve been trying to learn the piano for the past year, so when we had to make a musical instrument I definitely knew I wanted to make something tangentially related to the piano.

Another thing I wanted to do with this week’s assignment was to add more ‘design wrapping’ to it. I liked what the professor said about Week 9’s projects — sometimes a well-wrapped simple project is much nicer than a messy technically impressive project, so before even designing what I want to do, I thought about the presence of materials and how I can use them to enhance the project.

I thought of something like Nintendo’s cardboard piano, and it sounded like it would be fun to implement. However, I looked at the documentation for Arduino Tones and learned that only 1 tone can be played at a time, even with multiple piezo buzzers/ I didn’t like the idea of creating a piano that can only play one note at a time, plus I thought this idea may have been overdone: creating a piano as a musical instrument for arduino.

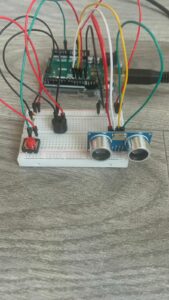

I still opted to create something piano-like, but instead of using touch as input, I decided to use the ultrasonic detector which makes it a theremin. This makes use of the 1-tone at a time limit as a feature rather than a bug, however during implementation I realized the ultrasonic detector isn’t very precise, so I wasn’t able to add the black keys to the piano without sacrificing accuracy. One more problem that came to mind when using the ultrasonic detector to determine which note to play was: “how often do I play the note”? To address this, I added a potentiometer which sets the tempo of the notes, so the theremin can play 1 note every 400ms-1000ms depending on the position of the position of the potentiometer. I realized I should also add some visual feedback to this as it might otherwise be difficult to set the desired value, so I added a blinking LED that blinks at the same rate the theremin is playing. If the LED is blinking at the same pace you want the song to be played, then you’re good!

Video