Concept:

Rujul and I collaborated on our Week 10 assignment, centered on crafting a musical instrument. Our initial concept revolved around utilizing the ultrasonic sensor and piezo speaker to create a responsive system, triggering sounds based on proximity. However, we expanded the idea by incorporating a servo motor to introduce a physical dimension to our instrument. The final concept evolved into a dynamic music box that not only moved but also emitted sound. The servo motor controlled the music box’s rotation, while the ultrasonic sensor detected proximity, prompting different tunes to play. To reset the music box, we integrated a button to return the servo motor to its initial position.

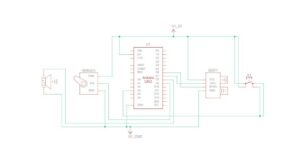

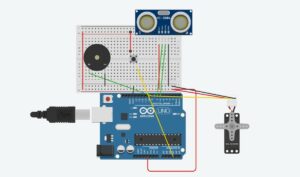

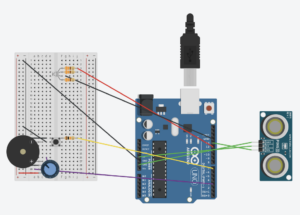

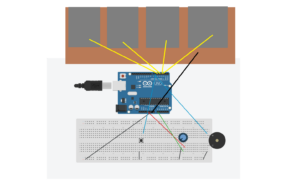

Modelling and Schematic:

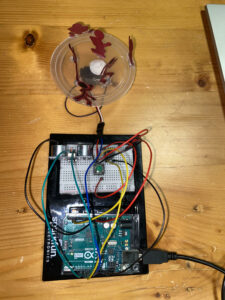

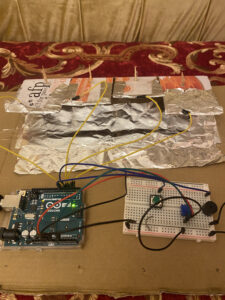

Prototype:

Code:

Our prototype took shape seamlessly, and the coding aspect proved relatively straightforward. One particularly intriguing segment involved establishing thresholds for distinct melodies based on the object’s position within the carousel.

void checkDistanceAndSound() {

int duration, distance;

digitalWrite(TRIG_PIN, LOW);

delayMicroseconds(2);

digitalWrite(TRIG_PIN, HIGH);

delayMicroseconds(10);

digitalWrite(TRIG_PIN, LOW);

duration = pulseIn(ECHO_PIN, HIGH);

distance = (duration * 0.0343) / 2;

// Play different melodies for different distance ranges

if (distance > 0 && distance <= 5 && !soundProduced) {

playMelody1();

soundProduced = true;

delay(2000); // Adjust delay as needed to prevent continuous sound

} else if (distance > 5 && distance <= 10 && !soundProduced) {

playMelody2();

soundProduced = true;

delay(2000); // Adjust delay as needed to prevent continuous sound

} else if (distance > 10 && distance <= 20 && !soundProduced) {

playMelody3();

soundProduced = true;

delay(2000); // Adjust delay as needed to prevent continuous sound

} else {

noTone(SPEAKER_PIN);

soundProduced = false;

}

}

void playMelody1() {

int melody[] = {262, 294, 330, 349, 392, 440, 494, 523}; // First melody

int noteDurations[] = {250, 250, 250, 250, 250, 250, 250, 250};

for (int i = 0; i < 8; i++) {

tone(SPEAKER_PIN, melody[i], noteDurations[i]);

delay(noteDurations[i]);

}

}

void playMelody2() {

int melody[] = {392, 440, 494, 523, 587, 659, 698, 784}; // Second melody

int noteDurations[] = {200, 200, 200, 200, 200, 200, 200, 200};

for (int i = 0; i < 8; i++) {

tone(SPEAKER_PIN, melody[i], noteDurations[i]);

delay(noteDurations[i]);

}

}

void playMelody3() {

int melody[] = {330, 330, 392, 392, 440, 440, 330, 330}; // Third melody

int noteDurations[] = {500, 500, 500, 500, 500, 500, 1000, 500};

for (int i = 0; i < 8; i++) {

tone(SPEAKER_PIN, melody[i], noteDurations[i]);

delay(noteDurations[i]);

}

}

Reflection:

Reflecting on our project, I believe enhancing it with LEDs synchronized to specific sounds could offer a more comprehensive understanding of the ultrasonic sensor’s functionality. Each LED would correspond to a particular sound, demonstrating the sensor’s operations more visibly. Additionally, considering a larger-scale implementation for increased interactivity might further elevate the project’s engagement potential.