After reading Norman, I kept thinking about how often I’ve felt genuinely embarrassed, not because I did something wrong, but because something was so badly designed that it made me look like I didn’t know what I was doing. I’ve blamed myself so many times for design failures, but Norman makes it clear that it’s not me, it’s the object. One thing that still annoys me is the sink setup at Dubai Airport. The soap, water, and dryer are all built into one sleek bar, with no sign telling you which part does what. You just keep waving your hands around and hope something responds. Sometimes the dryer blasts air when you’re trying to get soap, or nothing works at all. To make things worse, some mirrors have Dyson hand dryers built in, others have tissues hidden somewhere off to the side, and there’s no way to know without ducking and peeking like a crazy person. Norman’s point about discoverability and signifiers felt especially real here. One simple label could fix all of it.

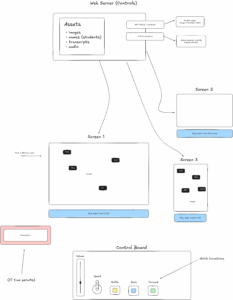

In my interactive media work, I’m starting to think more about how people approach what I build. Norman’s ideas about system image and mental models stuck with me. If someone doesn’t know what they’re supposed to do when they see my sketch, I’ve already failed as a designer. In my work, I try to make interactive elements obvious and responsive. If something is clickable, it should look like it. If something changes, the feedback should be clear. The goal is to make users feel confident and in control, not confused or hesitant. Good design doesn’t need to explain itself. It should just make sense.