Concept

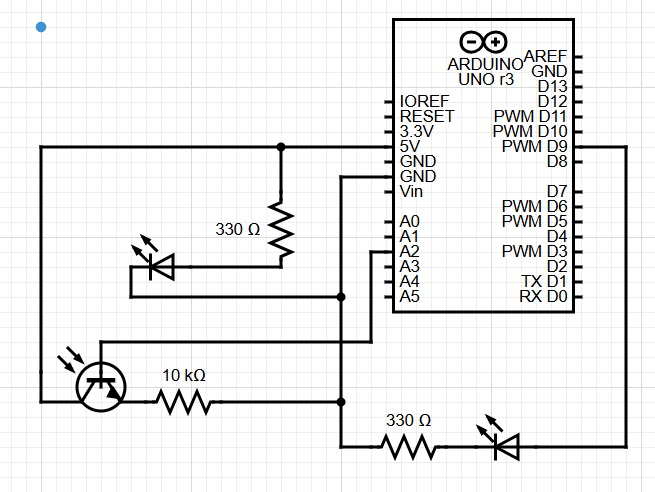

For this project, I tried to build a simple lighting system using an LDR (light sensor), a push-button, and two LEDs. One LED changes brightness depending on how bright the room is, and the other lights up to show when I’ve manually taken control.

I wanted to manually override the automatic light control with the press of a button—so if I want the light to stay at a fixed brightness no matter how bright it is outside, I just hit the button. Press it again, and the automatic behavior comes back.

I used TinkerCad for the circuit simulation.

Video

How It Works

-

-

The LDR is connected to pin A0 and tells the Arduino how bright the environment is.

-

Based on this reading, the Arduino maps the value to a number between 0 and 255 (for LED brightness).

-

The LED on pin 9 gets brighter when it’s dark and dims when it’s bright—automatically.

-

I also wired a button to pin 2. When I press it, the system switches to manual mod

-

In this mode, the LED stays at medium brightness, no matter the light level.

-

An indicator LED on pin 13 lights up to let me know I’m in manual mode.

-

-

Pressing the button again switches back to automatic mode.

-

Code

// Pin Definitions

const int ldrPin = A0; // LDR sensor connected to A0

const int buttonPin = 2; // Push-button connected to digital pin D2

const int pwmLedPin = 9; // PWM LED for the ambient light effect

const int overrideLedPin = 13; // Digital LED for manual override indicator

// Variables

bool manualOverride = false; // Tracks if the override mode is active

int lastButtonState = LOW; // With external pull-down, default is LOW

unsigned long lastDebounceTime = 0;

const unsigned long debounceDelay = 50; // Debounce time in milliseconds

void setup() {

pinMode(ldrPin, INPUT);

pinMode(buttonPin, INPUT);

pinMode(pwmLedPin, OUTPUT);

pinMode(overrideLedPin, OUTPUT);

// Start with manual override off, LED off

digitalWrite(overrideLedPin, LOW);

Serial.begin(9600);

}

void loop() {

// Read the LDR Sensor

int ldrValue = analogRead(ldrPin);

// Map the LDR value to PWM brightness (0-255).

// Darker environment (low ldrValue) yields a higher brightness.

int pwmValue = map(ldrValue, 0, 1023, 255, 0);

// Handle the Push-Button for Manual Override with Debouncing

int reading = digitalRead(buttonPin);

if (reading != lastButtonState) {

lastDebounceTime = millis();

}

if ((millis() - lastDebounceTime) > debounceDelay) {

//Unpressed = LOW, pressed = HIGH.

if (reading == HIGH && lastButtonState == LOW) { // button press detected

manualOverride = !manualOverride;

// Update the indicator LED accordingly

digitalWrite(overrideLedPin, manualOverride ? HIGH : LOW);

}

}

lastButtonState = reading;

// LED Behavior Based on Mode

if (manualOverride) {

// In manual override mode, set LED to a fixed brightness.

analogWrite(pwmLedPin, 128);

} else {

// Set brightness according to ambient light measured by the LDR.

analogWrite(pwmLedPin, pwmValue);

}

// Debug output

Serial.print("LDR Value: "); Serial.print(ldrValue);

Serial.print(" | PWM Brightness: "); Serial.print(pwmValue);

Serial.print(" | Manual Override: "); Serial.println(manualOverride ? "ON" : "OFF");

delay(10);

}

Challenges

-

-

Balancing Automatic and Manual Modes:

Getting the right balance between automatic brightness adjustments and a satisfying manual override was a fun challenge. I had to fine-tune the mapping of LDR readings to PWM values until the LED’s response felt right in different lighting conditions. -

Debugging with Serial Monitor:

Utilizing the Serial Monitor was incredibly useful. Every time something wasn’t working as expected, I added more Serial prints to understand what was happening.

-