Before diving into the first task, we began by loading the sample Arduino and p5.js code from the previous class. We then read through each line to see how Arduino connects and communicates with p5. This served as a helpful foundation to jumpstart our workflow.

Task 1:

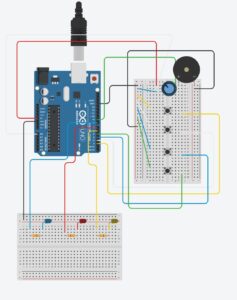

After reviewing the code and identifying the necessary components, we proceeded to build the circuit using a sliding potentiometer. Using analogRead from pin A0, we captured the potentiometer’s data and sent it to p5. The values ranged from 0 to 900, so we divided them by 2.25 to map them to the x-position on the canvas, ensuring smooth and accurate movement. A global variable ‘pos’ is updated and mapped into the x position of the ellipse.

Here is the p5.js code:

let pos = 0;

function setup() {

createCanvas(400, 400);

}

function draw() {

background(220);

ellipse(pos,200,100,100);

}

function keyPressed() {

if (key == " ") {

setUpSerial(); // setting up connection between arduino and p5.js

}

}

function readSerial(data) {

if (data != null) {

let fromArduino = trim(data) + "\n";

pos = fromArduino/2.25; // to map 0 to 900 in the right range in p5.js (400 by 00) canvas

writeSerial(sendToArduino);

}

}

and the arduino code:

int sensor = A0;

void setup() {

Serial.begin(9600);

pinMode(LED_BUILTIN, OUTPUT);

digitalWrite(led, HIGH);

delay(200);

digitalWrite(led, LOW);

// starting the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

digitalWrite(LED_BUILTIN, LOW);

int sensor = analogRead(A0);

Serial.println(sensor); // sending sensor information to p5.js

}

Here’s the video of it in action:

https://drive.google.com/file/d/1kT32H353kkMX_5HeKBphHf4Cxy-xhMF_/view?usp=sharing

Task 2:

We decided to create an input box where if the user inserted a number between 0-255 and pressed enter, it would then reflect the corresponding brightness onto the blue LED on the breadboard. It was a relatively simple implementation that required very minimal code changes.

Here’s the p5.js code:

let ledval = 0;

let input;

function setup() {

createCanvas(400, 400);

input = createInput('');

input.position(120, 100);

}

function draw() {

background(220);

}

function keyPressed() {

if (key == " ") {

setUpSerial(); // setting up connection

}

}

if (data != null) {

let fromArduino = trim(data);

let sendToArduino = input.value() + "\n";

writeSerial(sendToArduino);

}

}

and the arduino code:

int led = 3;

void setup() {

Serial.begin(9600);

pinMode(LED_BUILTIN, OUTPUT);

pinMode(led, OUTPUT);

// Blink them so we can check the wiring

digitalWrite(led, HIGH);

delay(200);

digitalWrite(led, LOW);

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0,0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

digitalWrite(LED_BUILTIN, HIGH); // led on while receiving data

int ledVal = Serial.parseInt();

if (Serial.read() == '\n') {

analogWrite(led, ledVal);

delay(5);

}

}

digitalWrite(LED_BUILTIN, LOW);

}

and finally, the video of it in action:

https://drive.google.com/file/d/1eMi1d_3H6abYxtYwyEpybCnZB7-fTVXF/view?usp=sharing

Task 3:

For the last task, we needed to first open up and examine the given gravity wind code. We identified two key things we could alter that would complete the given task at hand: the “wind.x” variable and the “(position.y > height-mass/2)” IF statement. We could map the analog value we read in from pin A0 to the wind.x position to alter the ball’s position on the x axis and since the aforementioned IF statement indicates when the ball has touched the ground, we could simply sneak in a line that sets a boolean flag to true and sending this to arduino and performing a digitalWrite (replacing the previous analogWrite from the input()).

Here’s how we did it in p5.js:

let velocity;

let gravity;

let position;

let acceleration;

let wind;

let drag = 0.99;

let mass = 50;

let floor = false;

function setup() {

createCanvas(640, 360);

noFill();

position = createVector(width/2, 0);

velocity = createVector(0,0);

acceleration = createVector(0,0);

gravity = createVector(0, 0.5*mass);

wind = createVector(0,0);

}

function draw() {

background(255);

applyForce(wind);

applyForce(gravity);

velocity.add(acceleration);

velocity.mult(drag);

position.add(velocity);

acceleration.mult(0);

ellipse(position.x,position.y,mass,mass);

if (position.y > height-mass/2) {

velocity.y *= -0.9; // A little dampening when hitting the bottom

position.y = height-mass/2;

floor = true; // light up the LED!

}

}

function applyForce(force){

// Newton's 2nd law: F = M * A

// or A = F / M

let f = p5.Vector.div(force, mass);

acceleration.add(f);

}

function keyPressed(){

if (key == " ") {

setUpSerial(); // setting up serial connection

}

if (keyCode==LEFT_ARROW){

wind.x=-1;

}

if (keyCode==RIGHT_ARROW){

wind.x=1;

}

if (key=='s'){ // changed from space to 's' since SPACEBAR is used to initiate serial connection pairing to arduino

mass=random(15,80);

position.y=-mass;

velocity.mult(0);

}

}

function readSerial(data) {

if (data != null) {

let fromArduino = trim(data);

wind.x = map(fromArduino, 0, 912, -2, 2); // mapping sensor's analog value to ball's wind x axis value

let sendToArduino = Number(floor) + "\n";

writeSerial(sendToArduino);

floor = false; // turning off blue LED

}

}

*We used the Number() function to convert the boolean flag value to an integer value since initially we were encountering issues where it was not actually being send as a numeric value to turn on the LED in digitalWrite.

and the arduino code:

int sensor = A0;

int led = 3;

void setup() {

Serial.begin(9600);

pinMode(LED_BUILTIN, OUTPUT);

pinMode(led, OUTPUT);

// Blink them so we can check the wiring

digitalWrite(led, HIGH);

delay(200);

digitalWrite(led, LOW);

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0,0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

digitalWrite(LED_BUILTIN, HIGH); // led on while receiving data

int ledVal = Serial.parseInt();

if (Serial.read() == '\n') {

digitalWrite(led, ledVal);

delay(5);

}

}

digitalWrite(LED_BUILTIN, LOW);

int sensor = analogRead(A0);

Serial.println(sensor);

}

Finally, here’s the video of the final product (we have two to demonstrate both the analog and digital capacity):

1. https://drive.google.com/file/d/1TcwYwz7HcyUobzH0MwLQ3P1pbf2rw8BR/view?usp=sharing

2. https://drive.google.com/file/d/1Ydz9OjHuqt8VPypLTQhBYDtB-ShDBGmk/view?usp=sharing

VOILÁ!