The Concept:

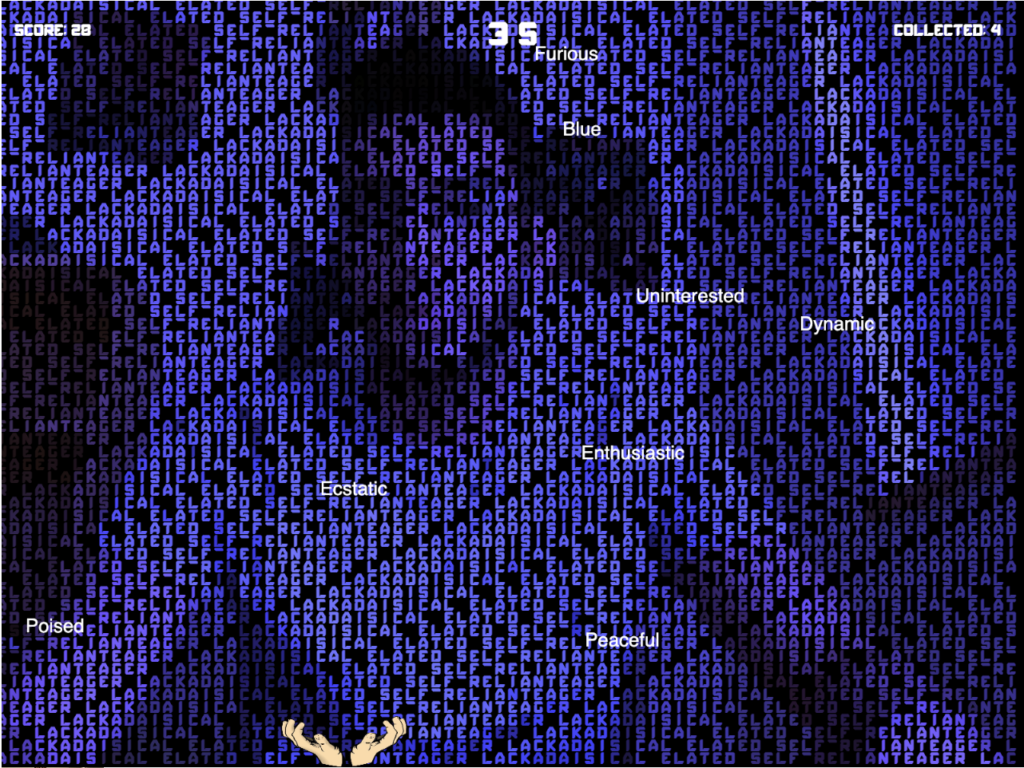

In this engaging “Chromamood” game, players take control of a catching hand, moving it through the game screen width. The objective is to collect words falling from the top of the screen. These words encapsulate various human moods, such as “happy,” “calm,” and “excited.” But the interesting part of the game lies in its categorization system. These words are neatly organized into distinct categories, with each category intimately linked to a unique display color and an associated score. As players adeptly gather these words, they trigger a series of cascading effects. The real magic happens as the words are seamlessly woven into the live video feed, enhancing the text display in the most visually enthralling way possible. The text dynamically updates, with its color shifting harmoniously, reflecting the precise word that the player has successfully captured.

Why “ChromaMood”?

The name “ChromaMood” is a fusion of two essential elements in the game. “Chroma” relates to color and represents the dynamic background shifts triggered by the words collected, where each mood category is associated with a distinct color. “Mood” encapsulates the central theme of the game, which revolves around collecting words that express various human emotions and moods

How the project works:

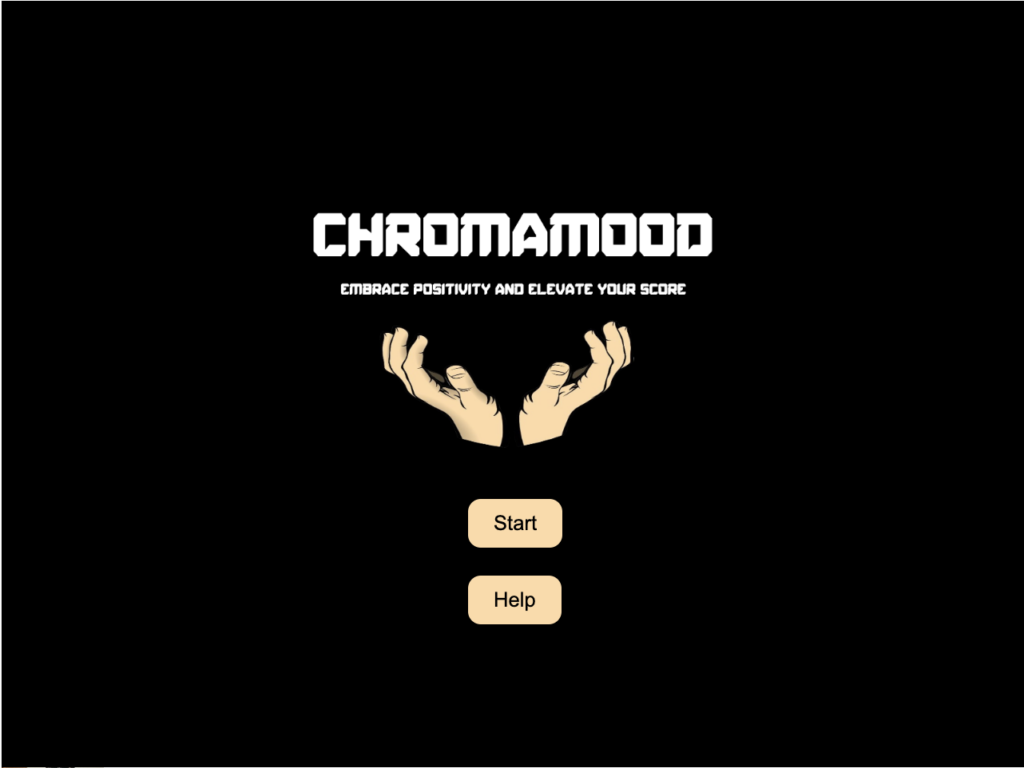

The game starts on a welcoming screen with the title “ChromaMood” and two options: “start” and “help.” Clicking “start” leads you to the main game screen. Here, you’ll see a hand-shaped object set against a black background, with words falling from the top. The game’s twist is in the words you catch. Each word represents a different mood, like “happy” or “angry,” and it impacts the background and your score. For example, catching words associated with anger turns the background red and gives you a score of 2. The words on the background change too. The goal is to get the highest score by collecting words that reflect positive moods within a limited time.

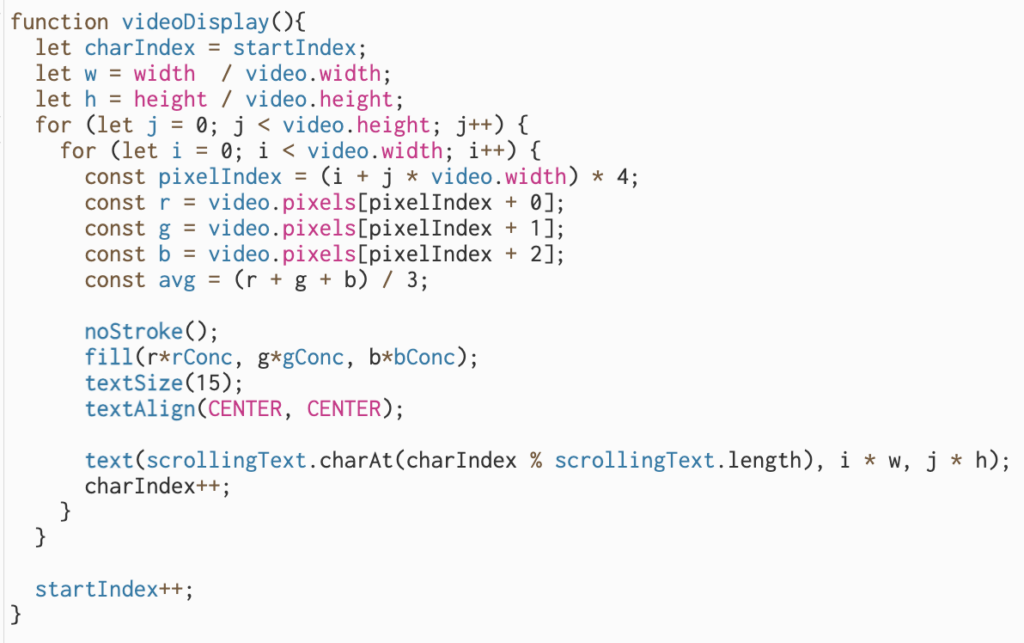

Code Highlight I am proud of:

The part of the game I am proud of is developing the video display background as I had no idea of how to do it. It required somehow intricate coding to seamlessly integrate dynamic text updates with the live video feed, ensuring a cohesive and engaging user experience. The idea is that I am dealing with the sketch as pixels. So, I update the pixels with every character of the words the player catches. Honestly, it was challenging for me to do it but I watched some tutorials and managed to figure it out.

Sketch:

The video and sound effects are not working in the embedded iframe below. Open it on P5JS editor for better experience.

Soma areas of improvement:

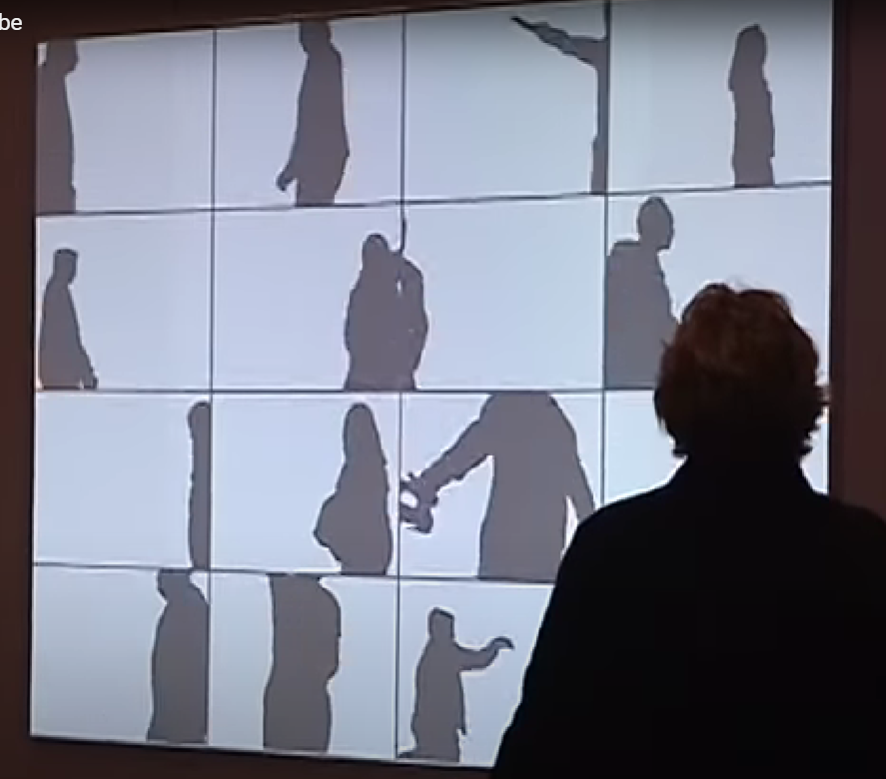

A potential enhancement to consider is shifting the way we control the hand object. Instead of relying on traditional keyboard controls, we could explore the possibility of tracking the player’s movements in the live video feed. By doing so, we could introduce a new level of interactivity, allowing players to physically interact with the game. This change not only simplifies the game’s control mechanics but also aligns perfectly with its fundamental concept. It would create a more immersive experience, where players can use their own motions to catch the falling words, making the game even more engaging and unique.