I initiated this project to emulate the card-scanning excitement of Yu-Gi-Oh duel disks, in which tapping cards summons monsters and spells. Users present one or more RFID tags-each representing cowboy, astronaut or alien-to an MFRC522 reader connected to an Arduino Uno. The system then allocates a five-second selection window before launching one of three interactive mini-games in p5.js: Stampede, Shooter or Cookie Clicker. In Stampede you take the helm of a lone rider hurtling through a hazardous space canyon, dodging bouncing rocks and prickly cacti that can slow or shove you backwards-all while a herd of cosmic cows closes in on your tail. Shooter throws two players into a tense standoff: each pilot manoeuvres left and right, firing lasers at their opponent and scrambling down shields to block incoming beams until one side breaks. Cookie Clicker is pure, frenzied fun-each participant pounds the mouse on a giant on-screen cookie for ten frantic seconds, racing to rack up the most clicks before time runs out. All visual feedback appears on a browser canvas, and audio loops accompany each game.

Components

The solution comprises four principal components:

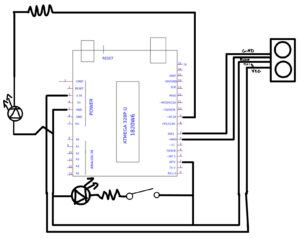

- RFID Input Module: An MFRC522 reader attached to an Arduino Uno captures four-byte UIDs from standard MIFARE tags.

- Serial Bridge: The Arduino transmits single-character selection codes (‘6’, ‘7’ or ‘8’) at 9600 baud over USB and awaits simple score-report messages in return. P5.js

- Front End: A browser sketch employs the WebSerial API to receive selection codes, manage global state and asset loading, display a five-second combo bar beneath each character portrait, and execute the three mini-game modules.

- Mechanical Enclosure: Laser-cut plywood panels, secured with metal L-brackets, form a cuboid housing; a precision slot allows the 16×2 LCD module to sit flush with the front panel.

Hardware Integration

The MFRC522 reader’s SDA pin connects to Arduino digital pin D10 and its RST pin to D9, while the SPI lines (MOSI, MISO, SCK) share the hardware bus. In firmware, the reader is instantiated via “MFRC522 reader(SS_PIN, RST_PIN);

” and a matchUID() routine compares incoming tags against the three predefined UID arrays.

Integrating a standard 16×2 parallel-interface LCD alongside the RFID module proved significantly more troublesome. As soon as “lcd.begin(16, 2)” was invoked in setup(), RFID reads ceased altogether. Forum guidance indicated that pin conflicts between the LCD’s control lines and the RC522’s SPI signals were the most likely culprit. A systematic pin audit revealed that the LCD’s Enable and Data-4 lines overlapped with the RFID’s SS and MISO pins. I resolved this by remapping the LCD to use digital pins D2–D5 for its data bus and D6–D7 for RS/Enable, updating both the wiring and the constructor call in the Arduino sketch.

P5.js Application and Mini-Games

The browser sketch orchestrates menu navigation, character selection and execution of three distinct game modules within a single programme.

A single “currentState” variable (0–3) governs menu, Stampede, Shooter and Cookie Clicker modes. A five-second “combo” timer begins upon the first tag read, with incremental progress bars drawn beneath each portrait to visualise the window. Once the timer elapses, the sketch evaluates the number of unique tags captured and transitions to the corresponding game state.

Merging three standalone games into one sketch turned out to be quite the headache. Each mini-game had its own globals-things like score, stage and bespoke input handlers-which clashed as soon as I tried to switch states. To sort that out, I prefixed every variable with its game name (stampedeScore, sh_p1Score, cc_Players), wrapped them in module-specific functions and kept the global namespace clean.

The draw loop needed a rethink, too. Calling every game’s draw routine in sequence resulted in stray graphics popping up when they shouldn’t. I restructured draw() into a clear state machine-only the active module’s draw function runs each frame. That meant stripping out stray background() calls and rogue translate()s from the individual games so they couldn’t bleed into one another

Finally, unifying input was tricky. I built a single handleInput function that maps RFID codes (‘6’, ‘7’, ‘8’) and key presses to abstract commands (move, shoot, click) then sends them to whichever module is active. A bit of debouncing logic keeps duplicate actions at bay- especially critical during that five-second combo window- so you always get predictable, responsive controls.

The enclosure is constructed from laser-cut plywood panels, chosen both for its sustainability, and structural rigidity, and finished internally with a white-gloss plastic backing to evoke a sleek, modern aesthetic. Metal L-brackets fasten each panel at right angles, avoiding bespoke fasteners and allowing for straightforward assembly or disassembly. A carefully dimensioned aperture in the front panel accommodates the 16×2 LCD module so that its face sits perfectly flush with the surrounding wood, maintaining clean lines.

Switching between the menu and the individual mini-games initially caused the sketch to freeze on several occasions. Timers from the previous module would keep running, arrays retained stale data and stray transformations lingered on the draw matrix. To address this, I introduced dedicated cleanup routine- resetStampede(), shCleanup() and ccCleanup()- that execute just before currentState changes. Each routine clears its game’s specific variables, halts any looping audio and calls resetMatrix() (alongside any required style resets) so that the next module starts with a pristine canvas.

Audio behaviour also demanded careful attention. In early versions, rapidly switching from one game state to another led to multiple tracks playing at once or to music cutting out abruptly, leaving awkward silences. I resolved these issues by centralising all sound control within a single audio manager. Instead of scattering stop() and loop() calls throughout each game’s code, the manager intercepts state changes and victory conditions, fading out the current track and then initiating the next one in a controlled sequence. The result is seamless musical transitions that match the user’s actions without clipping or overlap.

The enclosure underwent its own process of refinement. My first plywood panels, cut on a temperamental laser cutter, frequently misaligned-the slot for the LCD would be too tight to insert the module or so loose that it rattled. After three iterative cuts, I tweaked the slot width, adjusted the alignment tabs and introduced a white-gloss plastic backing. This backing not only conceals the raw wood edges but also delivers a polished, Apple- inspired look. Ultimately, the panels now fit together snugly around the LCD and each other, creating a tool-free assembly that upholds the project’s premium aesthetic.

Future Plans

Looking ahead, the system lends itself readily to further enhancement through the addition of new mini-games. For instance, there could be a puzzle challenge or a rhythm-based experience which leverages the existing state-framework; each new module would simply plug into the central logic, reusing the asset-loading and input-dispatch infrastructure already in place.

Beyond additional games, implementing networked multiplayer via WebSockets or a library such as socket.io would open the possibility of remote matches and real-time score sharing, transforming the project from a local-only tabletop experience into an online arena. Finally, adapting the interface for touch input would enable smooth operation on tablets and smartphones, extending the user base well beyond desktop browsers.

Conclusion

Working on this tabletop arcade prototype has been both challenging and immensely rewarding. I navigated everything from the quirks of RFID timing and serial communications to the intricacies of merging three distinct games into a single p5.js sketch, all while refining the plywood enclosure for a polished finish. Throughout the “Introduction to Interactive Media” course, I found each obstacle-whether in hardware, code or design-to be an opportunity to learn and to apply creative problem-solving. I thoroughly enjoyed the collaborative atmosphere and the chance to experiment across disciplines; I now leave the class not only with a functional prototype but with a genuine enthusiasm for future interactive projects.