Introduction and Concept

Welcome to Webster! This game comes from an inspiration of a very dear friend, a pet actually. You know what they say ” Do not kill that spider in the corner of your room, it probably thinks you are it’s roommate.” I saw a spider in the corner of a room we do not usually enter in my house, and I called it Webster.

This project is a labor of love that brings together some really fun game design. The game uses solid physics to simulate gravity and rope mechanics, making our little spider swing through a cave that’s so high it even has clouds! I broke the project into clear, modular functions so every bit of the physics—from gravity pulling our spider down to the rope tension that keeps it swinging—is handled cleanly. This means the spider feels natural and responsive, just like it’s really hanging from a web in a bustling cave (maybe IRL a cave of clouds doesn’t exist but its oki)

On the design side, Webster is all about variety and challenge. The game dynamically spawns clouds, flies, and even bees as you progress, keeping the environment fresh and unpredictable. Randomized placements of these elements mean every playthrough feels unique, and the parallax background adds a nice touch of depth. Inspired by classic spider lore and a bit of Spiderman magic, the game makes sure you’re always on your toes—eating flies for points and avoiding bees like your life depends on it (well, Webster’s life does)

Enjoy swinging with Webster!

Sketch!

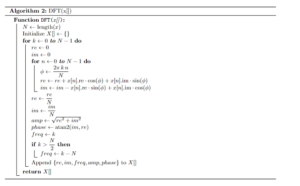

Code Highlights

// --- Physics-related vars & functions ---

// global vars for gravity, rope stiffness, rope rest length, rope anchor, and damping factor

let gravity, ropeK = 0.5, ropeRestLength, ropeAnchor, damping = 1;

function setup() {

createCanvas(640, 480);

gravity = createVector(0, 0.08); // sets constant downward acceleration

}

class Spider {

constructor(x, y) {

this.pos = createVector(x, y); // starting pos

this.vel = createVector(0, 0); // starting vel

this.radius = 15;

this.attached = false; // not attached initially

}

update() {

this.vel.add(gravity); // apply gravity each frame

if (this.attached && ropeAnchor) {

let ropeVec = p5.Vector.sub(ropeAnchor, this.pos); // vector from spider to rope anchor

let distance = ropeVec.mag(); // current rope length

if (distance > ropeRestLength) { // if rope stretched beyond rest length

let force = ropeVec.normalize().mult((distance - ropeRestLength) * ropeK); // calculate tension force

this.vel.add(force); // apply rope tension to velocity

}

}

this.vel.mult(damping); // simulate friction/air resistance

this.pos.add(this.vel); // update position based on velocity

}

}

This snippet centralizes all physics computations. Gravity is set as a constant downward acceleration in setup and then applied every frame in the Spider class’s update() method, which makes the spider to accelerate downwards. When attached to a rope, a corrective force is calculated if the rope exceeds its rest length, which simulates tension; damping is applied to slow velocity over time, which mimics friction or air resistance.

// --- Spawning Elements Functions ---

// spawnObstacles: checks spider's x pos and adds cloud obs if near last obs; random spacing & y pos

function spawnObstacles() {

if (spider.pos.x + width - 50 > lastObstacleX) { // if spider near last obs, spawn new one

let spacing = random(200, 500); // random gap for next obs

let cloudY = random(height - 50 / 2, height + 1 / 2); // random vertical pos for cloud

obstacles.push({ // add new cloud obs obj

x: lastObstacleX + 500, // x pos offset from last obs

y: cloudY, // y pos of cloud

w: random(80, 150), // random width

h: 20, // fixed height

type: "cloud", // obs type

baseY: cloudY, // store base y for wobble effect

wobbleOffset: random(100000) // random wobble offset for animation

});

lastObstacleX += spacing; // update last obs x pos

}

}

// spawnWorldElements: calls spawnObstacles then spawns collectibles (flies/webPower) and enemies (bees)

// based on frame count and random chance, spawning them ahead of spider for dynamic environment growth

function spawnWorldElements() {

spawnObstacles(); // spawn cloud obs if needed

if (frameCount % 60 === 0 && random() < 0.6) { // every 60 frames, chance to spawn collectible

collectibles.push({

x: spider.pos.x + random(width, width + 600), // spawn ahead of spider

y: random(50, height + 500), // random vertical pos

type: random() < 0.7 ? "fly" : "webPower" // 70% fly, else webPower

});

}

if (frameCount % 100 === 0 && random() < 0.7) { // every 100 frames, chance to spawn enemy

enemies.push({

x: spider.pos.x + random(width, width + 600), // spawn ahead of spider

y: random(100, height + 500), // random vertical pos

speed: random(2, 4) // random enemy speed

});

}

}

This snippet groups all spawning logic for environment elements. The spawnObstacles() function checks if the spider is near the last obstacle’s x coordinate and, if so, adds a new cloud obstacle with randomized spacing, vertical position, and dimensions. Then spawnWorldElements() calls this function and also adds collectibles and enemies (bees) ahead of the spider based on frame counts and random chances, to ensure a dynamic and everchanging environment.

Problems I faced (there were many)

There were quite a few quirky issues along the way. One problem was with collision detection—sometimes the spider would bounce off clouds a bit jitterily or not land smoothly, which made the swing feel less natural. And then there was that pesky web projectile bug where it would linger or vanish unexpectedly if the input timing wasn’t just right, which threw off the feel of shooting a web.

Another area for improvement is enemy behavior. Bees, for example, sometimes weren’t as aggressive as I’d like like, so their collision detection could be sharpened to ramp up the challenge. I also ran into occasional delays in sound effects triggering properly—especially when multiple actions happened at once—which reminded me that asset management in p5.js can be a bit finicky.

Another hiccup was with the custom font and web projectile behavior. Initially, every character was coming out as a single letter because of font issues. When I changed the font extension from ttf to otf, it worked out for some reason.

I also had a lot of problem with the cloud spawning logic, sometimes they would spawn under the spider itself which prevents it from actually swinging as it wont gain any horizontal velocity, this was a PAIN to solve, because I tried every complicated approach which did not work, but the solution was simple, I only had to add a constant (which i chose to be 500) to the initial spawning x coordinates for the clouds. YES! it was that simple, but that part alone took me around 3 hours.

All in all, while Webster is a fun ride, these little details offer plenty of room to refine the game even further!