Inspiration:

For this week’s project, the main inspo for our instrument was Stormae’s Song “Alors on Danse”. We were mainly inspired by the way that the songs main notes are split into 3 notes of varying pitches, with one sound constantly playing in the background. For that reason we varied the pitches of the three sounds our project produces with a 4th note that is constantly playing when the button is pressed.

Concept:

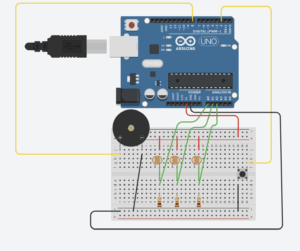

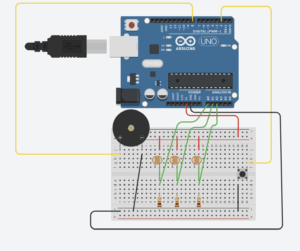

For this week’s project, we used 3 light sensors to play sounds on the piezo speaker, with one note being played constantly when a button is clicked. With the light sensor, once the user’s finger covers the sensor that is when the note is played. Furthermore, we have three sensors each of which plays a different pitch on the piezo speaker. The condition that allows for this is the reading of the sensor in comparison to the threshold we defined. An additional component we added was the button that allows for the sounds to be played on the piezo speaker and then stopped once the button is pressed again.

Code:

int photoPins[3] = {A0, A1, A2};// first we define a list of integers holding the analog pins

int buttonPin = 2; // digi pin 2 for the buttons

int piezzoPin = 8; //digi pin 8 for the piezzo speaker

int threshold = 700; //this is the threshold fo rte light/no light intensity that worked wit our light sensors in our environment/lighting

bool prevPhoto[3] = {false, false, false}; //keeping track of whether the light sebsir was interacted with or not false initially

bool prevButton = false; //initially false

bool buttonState = false;//initially false

void setup() {

pinMode(buttonPin, INPUT_PULLUP); //for the button pint as an input for the arduino

pinMode(piezzoPin, OUTPUT); //setting the buzzor pin as output so the arduino sneds the sound signal

Serial.begin(9600); // serial monitor for debugging

}

void loop() {

for (int i = 0; i < 3; i++) { //looping over the 3 sensors to reasd their analog value

int value = analogRead(photoPins[i]);

bool tapped = value < threshold; //comparing the value captured by the sensor and the defined threshold

if (tapped && !prevPhoto[i]) { //checking for tap in the current state compared to prev

if (i == 0) tone(piezzoPin, 440, 200); // translates to A0

if (i == 1) tone(piezzoPin, 523, 200); // translates to A1

if (i == 2) tone(piezzoPin, 659, 200); // translates to A2

}

prevPhoto[i] = tapped; //MAKING SURE TO NOTE it as tapped to have a singular tap rather than looping

Serial.print(value); //serial print

Serial.print(",");

}

bool pressed = digitalRead(buttonPin) == LOW; //setting the reading of the button to low meaning the button is pressed

if (pressed && !prevButton) { //when the button is pressed state changes from not pressed(false) to presssed(true)

buttonState = !buttonState;

if (buttonState) tone(piezzoPin, 784);// if toggled on play a continuoue G5 tone

else noTone(piezzoPin); //otherwise stop the buzzer

}

prevButton = pressed;

Serial.println(pressed ? "1" : "0"); //for monitoring purposes

delay(50);//short delay

}

Disclaimer: Some AI/ChatGPT was used to help with debugging and allowing multiple elements to work cohesively.

More Specifically:

1- When trying to debug, to check if button is pressed is true on the serial monitor (this line: Serial.println(pressed ? “1” : “0”); //for monitoring purposes)

2- Recomended values for frequency in hertz to mimic Alors on Danse (if (i == 0) tone(piezzoPin, 440, 200); // translates to A0 if (i == 1) tone(piezzoPin, 523, 200); // translates to A1 if (i == 2) tone(piezzoPin, 659, 200); // translates to A2) The SECOND parameter

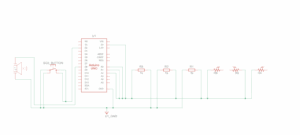

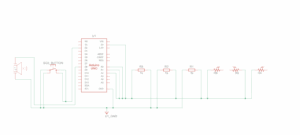

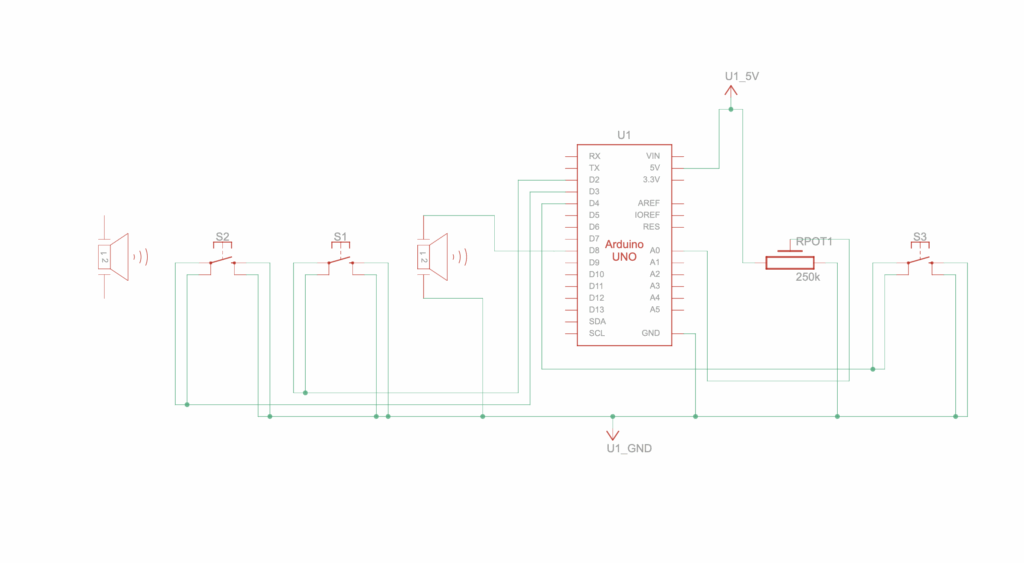

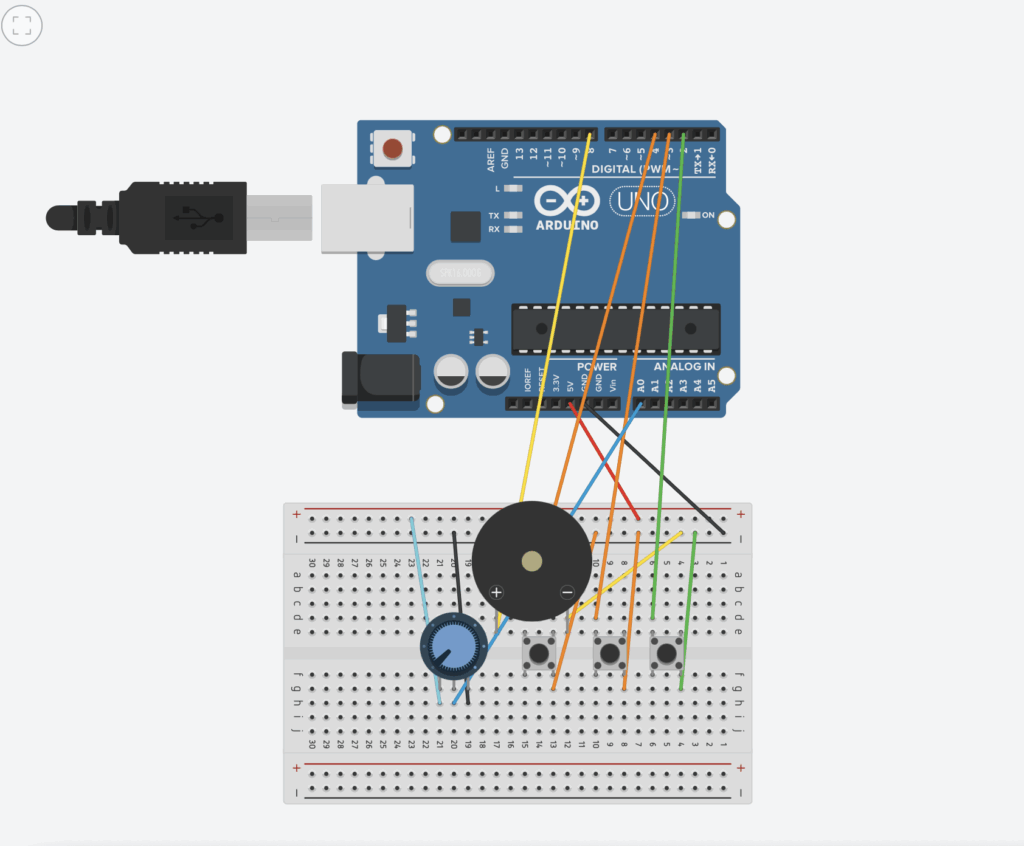

Schematic:

Demo:

Demo:

IMG_8360

Future Improvements:

As for the future improvements, one main thing we wanted to capture in this project is being to overlap the sounds, but since we were working with one piezo speaker, we were not able to do that. To address this we aim to learn more about how we can maybe start playing the sounds from our laptops instead of the physical speaker we connect to the Arduino. Other improvements could be exploring how we can incorporate different instrument sounds maybe and create an orchestra like instrument.

Figure 2 ( Circuit design with code and simulation on “Magnificent Jaiks” by Abdelrahman

Figure 2 ( Circuit design with code and simulation on “Magnificent Jaiks” by Abdelrahman