Concept:

For this week’s assignment to create an unusual switch, I built a jaw-activated LED circuit that lights up a red LED when the user opens or closes their mouth. The switch consists of two contact plates that complete the circuit when pressed together.

To construct this, I repurposed old highlighter tubes and wood pieces to create a frame that holds the contact plates. I attached this frame to a headband, which positions the mechanism alongside the user’s jaw when worn. When the headband is in place and the wearer moves their jaw (opening or closing their mouth), the jaw movement pushes the contact plates together. This completes the circuit and activates the red LED.

The design essentially turns jaw movement into a hands-free switch – the mechanical motion of opening and closing your mouth becomes the trigger for the electronic circuit. The highlighter tubes and wood pieces work as the structural components that translate the jaw’s movement into the pressing motion needed to activate the switch.

Implementation:

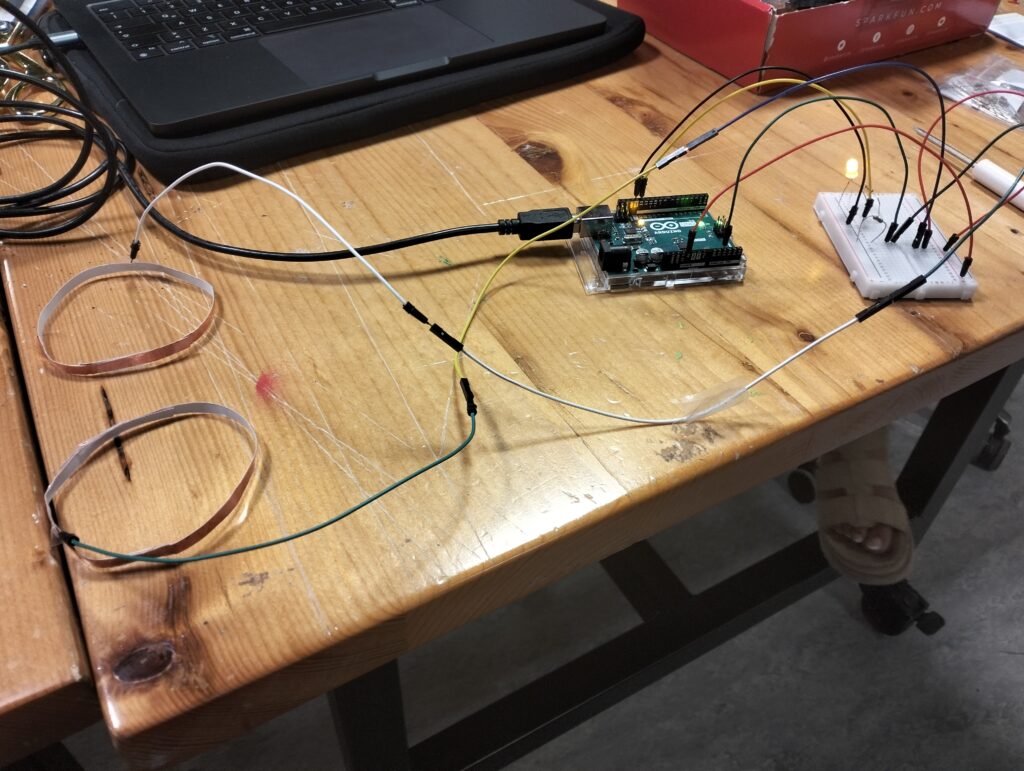

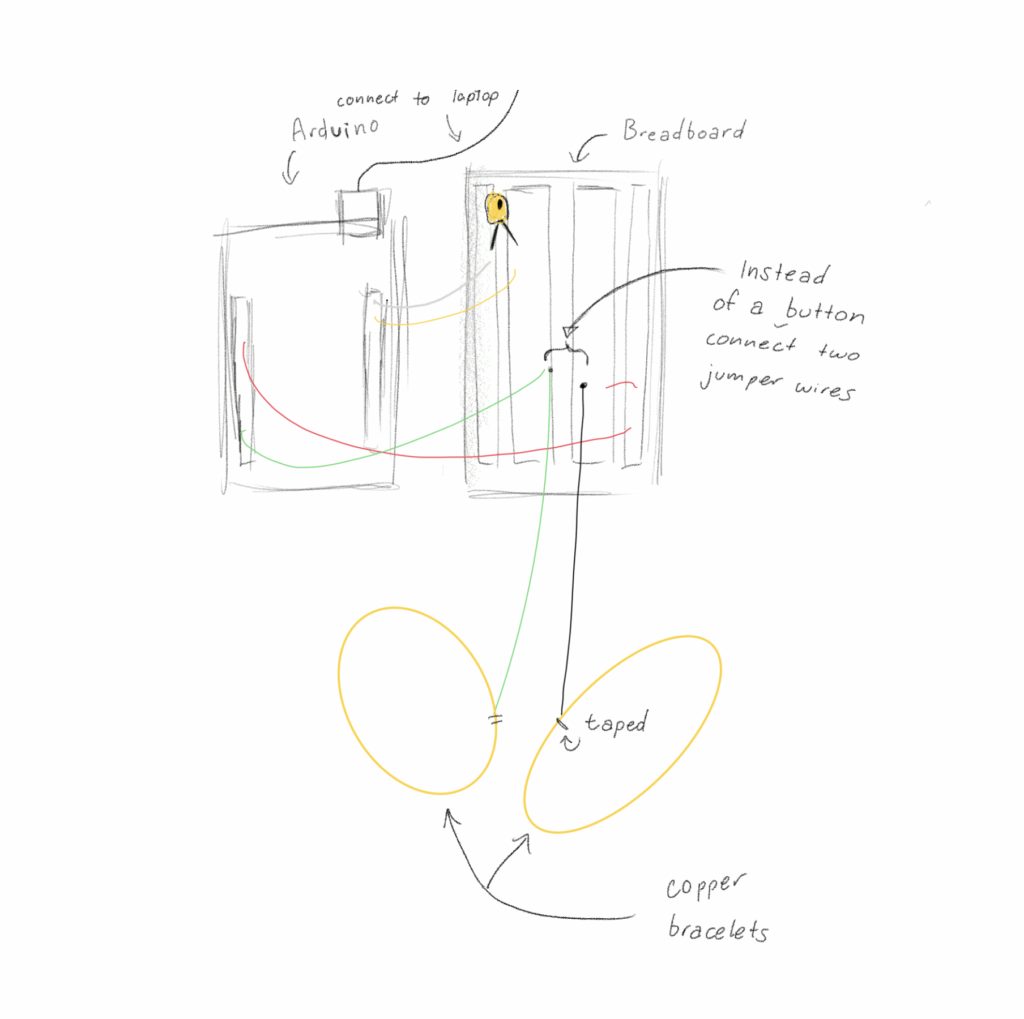

My Arduino circuit uses two pushbuttons to control an LED. Each button is connected to digital pins 2 and 3 on the Arduino, with 10 kΩ pull-down resistors ensuring the inputs stay LOW when not pressed. When a button is pressed, it sends a HIGH signal to the Arduino, which then turns the LED connected to pin 13 ON through a 330 Ω resistor that limits current. The LED’s short leg is connected to ground, and the red and blue rails on the breadboard distribute 5 V and GND from the Arduino. The setup allows the LED to light up when either button is pressed, demonstrating how digital inputs and outputs can be used together to build simple interactive circuits.

Code:

const int button1Pin = 2;

const int button2Pin = 3;

const int ledPin = 13;

void setup() {

pinMode(button1Pin, INPUT);

pinMode(button2Pin, INPUT);

pinMode(ledPin, OUTPUT);

}

void loop() {

int button1State = digitalRead(button1Pin);

int button2State = digitalRead(button2Pin);

if (button1State == LOW || button2State == LOW) {

digitalWrite(ledPin, HIGH);

} else {

digitalWrite(ledPin, LOW);

}

}

This Arduino code controls an LED using two pushbuttons. It first defines constants for each pin: button 1 on pin 2, button 2 on pin 3, and the LED on pin 13. In the setup() function, pinMode() sets the two button pins as inputs so they can read signals, and the LED pin as an output so it can turn ON or OFF. In the loop(), the program continuously reads each button’s state using digitalRead(), storing the result in button1State and button2State. The if statement checks if either button is pressed (returns LOW because the circuit uses pull-down or pull-up logic depending on wiring). If one or both buttons are pressed, the LED turns ON with digitalWrite(ledPin, HIGH); if neither is pressed, it turns OFF with digitalWrite(ledPin, LOW). This creates a simple interactive system where pressing any button lights the LED.

DEMO:

IMG_8130

Reading Reflection:

Both readings tell a story about why good design literally saves lives. Margaret Hamilton was programming the Apollo missions in the 1960s, juggling motherhood with rocket science – bringing her 4-year-old daughter Lauren to MIT on weekends while she coded. One day, Lauren was playing with the simulator and accidentally crashed it by hitting P01, a program that wasn’t supposed to run during flight. Hamilton immediately thought “we need to protect against this,” but NASA shut her down: “We had been told many times that astronauts would not make any mistakes… They were trained to be perfect”. Because stressed-out humans hurtling through space in a tin can are definitely going to be perfect. Sure enough, during Apollo 8, astronaut Jim Lovell accidentally selected P01 and wiped out all their navigation data. Hamilton’s backup procedures saved the day, but the whole mess could’ve been avoided if NASA had listened to her in the first place about building in safeguards.

This is exactly what Don Norman talks about in his design article – you can’t just assume people won’t make mistakes, especially under pressure. He points out that “products designed for use under stress follow good human-centered design, for stress makes people less able to cope with difficulties and less flexible in their approach to problem solving.” Hamilton understood this intuitively. She knew that even brilliant, highly trained astronauts are still human. Norman also argues that “attractive things work better” because when we’re in a good mental state, we’re actually better at problem-solving – “positive affect makes people more tolerant of minor difficulties and more flexible and creative in finding solutions.” The flip side is that badly designed systems become even worse under stress. The Apollo story shows how one woman’s insistence on human-centered design – despite everyone telling her the users would be “perfect” – literally got humans to the moon and back safely.

Circuit Illustration

Circuit Illustration