After three days of painstaking brainstorming for my midterm, I came up with two directions: one was a game-like networking tool to help people start conversations, and the other was a version of Flappy Bird controlled by the pitch of your voice.

I was undoubtedly fascinated by both, but as I thought more about the project, it was clear that I wanted to experiment with generative AI. Therefore, I combined the personal, identity-driven aspect of the networking tool with a novel technical element.

The Concept

“Synthcestry” is a short, narrative experience that explores the idea of heritage. The user starts by inputting a few key details about themselves: a region of origin, their gender, and their age. Then, they take a photo of themselves with their webcam.

From there, through a series of text prompts, the user is guided through a visual transformation. Their own face slowly and smoothly transitions into a composite, AI-generated face that represents the “archetype” of their chosen heritage.

Designing the Interaction and Code

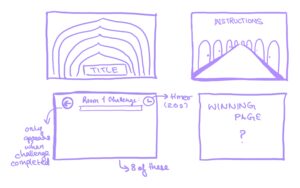

The user’s journey is the core of the interaction design, as I already came across game state design in class. I broke the game down into distinct states, which becomes the foundation of my code structure:

- Start: A simple, clean title screen to set the mood.

- Input: The user provides their details. I decided against complex UI elements and opted for simple, custom-drawn text boxes and buttons for a more cohesive aesthetic. The user can type their region and gender, and select an age from a few options.

- Capture: The webcam feed is activated, allowing the user to frame their face and capture a still image with a click.

- Journey: This is the main event. The user presses the spacebar to advance through 5 steps. The first step shows their own photo, and each subsequent press transitions the image further towards the final archetype, accompanied by a line of narrative text.

- End: The final archetype image is displayed, offering a moment of finality before the user can choose to start again.

My code is built around a gameState variable, which controls which drawing function is called in the main draw() loop. This keeps everything clean and organized. I have separate functions like drawInputScreen() and drawJourneyScreen(), and event handlers like mousePressed() and keyPressed() that behave differently depending on the current gameState. This state-machine approach is crucial for managing the flow of the experience.

The Most Frightening Part

The biggest uncertainty in this project was the visual transition itself. How could I create a smooth, believable transformation from any user’s face to a generic archetype?

To minimize the risk, I engineered a detailed prompt that instructs the AI to create a 4-frame “sprite sheet.” This sheet shows a single face transitioning from a neutral, mixed-ethnicity starting point to a final, distinct archetype representing a specific region, gender, and age.

To test this critical algorithm, I wrote the startGeneration() and cropFrames() functions in my sketch. startGeneration() builds the asset key and uses loadImage() to fetch the correct file. The callback function then triggers cropFrames(), which uses p5.Image.get() to slice the sprite sheet into an array of individual frame images. The program isn’t fully functional yet, but you can see the functions in the code base.

As for the use of image assets, I had two choices. One is to use a live AI API generation call; the other is to have a pre-built asset library. The latter would be easier and less prone to errors, I agree; but given the abundance of nationalities on campus, I would have no choice but to use a live API call. It is to be figured out next week.