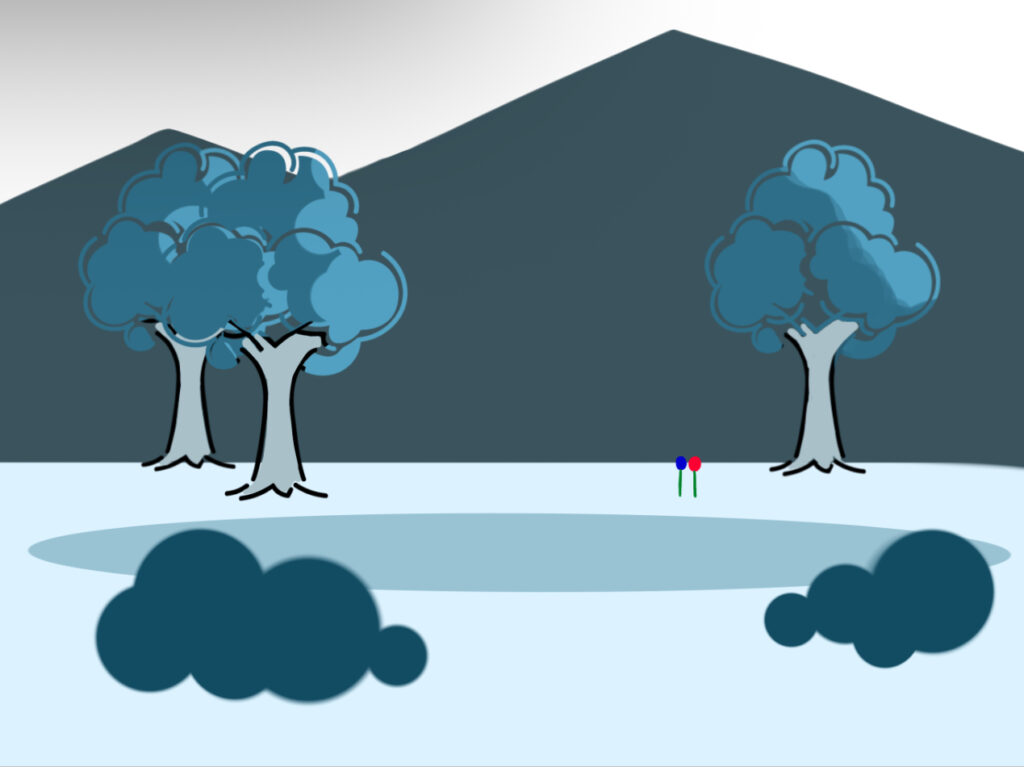

Concept + Design

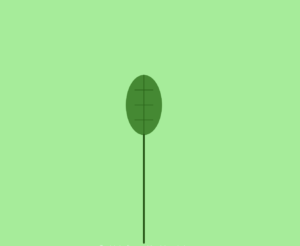

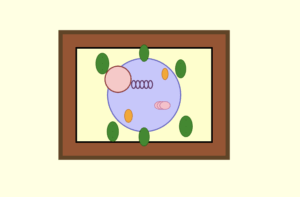

For this project, I wanted to go for something mystical yet down to earth. This is why I wanted to integrate “ordinary” objects such as trees, flowers, and combine them with magical designs, such as the fantasy-like structures on the background, and blue hues.

Although I am still in the process of deciding what the interaction will be for the viewers to engage with this project, I have narrowed my main ideas to two.

- The roses will display different colors and musical tracks whenever the viewer clicks on them. For example, one rose will show a different color after the viewer clicks on it. Another will change music every time it is clicked on it. And the third one might give an inspirational message after every click. This will allow an entertaining and colorful interaction to match the tone of the sketch.

- Another option, while less entertaining, will focus on the visual aesthetics, hence, the interaction will rely on a function that resembles a magnifying glass so viewers can take a closer look at all the objects displayed, and depending on where in the canvas they move the mouse, they will be able to listen to a different musical track.

Most Frightening/Complex Part

Although I haven’t written a code or program that can detect, prevent, or avoid specific problems or glitches, I one code I wrote to prevent the image in my canvas from having any issues is the preload function. This should allow the code to load properly before displaying the image.

// Edited Image PNG

let palace;

function preload(){

palace = loadImage('palace.PNG');

}

Another function I struggled to make but eventually led to a success was the custom shape, which initially was made in another sketch to define the shape of the tree. However, after I realized that I would need to move this shape in my final sketch, I introduced the translate code, along function “drawCustomShape” in order to execute the function for the custom shape and translate it in the canvas to my desired position.

function draw() {

background(51);

drawCustomShape(450, 230);

drawCustomShape(-50, 230);

}

// function of custom shape (tree) + translation of position at x and y

function drawCustomShape(x, y) {

push();

translate(x, y);

noStroke();

fill("rgb(235,233,233)");

beginShape();

vertex(140, 95);

vertex(140, 250);

vertex(140, 250);

vertex(100, 280);

vertex(225, 280);

vertex(225, 280);

vertex(190, 250);

vertex(190, 95);

endShape(CLOSE);

fill("rgb(32,32,228)");

ellipse(120, 90, 170, 120);

ellipse(180, 98, 130, 110);

ellipse(150, 45, 140, 160);

ellipse(200, 55, 150, 120);

pop();

// end of code for custom shape

}

Embedded sketch

Reflection and ideas for future work or improvements

My next steps are to find a way to display text to introduce the context and instructions for the interaction. I also plan to decide what said interaction will be based on the feedback and begin to write the codes necessary in a separate sketch before applying it to the final one. In the meantime, I will also search for the music pieces I will be using and edit them accordingly.

Furthermore, I need to plan how to include the option to start a new session without restarting the sketch. Any kind of feedback will be highly appreciated.