Concept

For my project, I developed a traffic light system that uses sound to provide directions. This solution addresses the challenge pedestrians face in reading traffic lights during the day, particularly when sunlight makes the signals hard to see. The system uses an LDR (Light Dependent Resistor) to detect light levels above a certain threshold, activating a buzzer that beeps twice for “go” (green) and once for “stop” (red). This feature helps pedestrians who may struggle to see the traffic lights in bright sunlight. At night, when the lights are easily visible, the buzzer remains off, reducing unnecessary noise.

Implementation

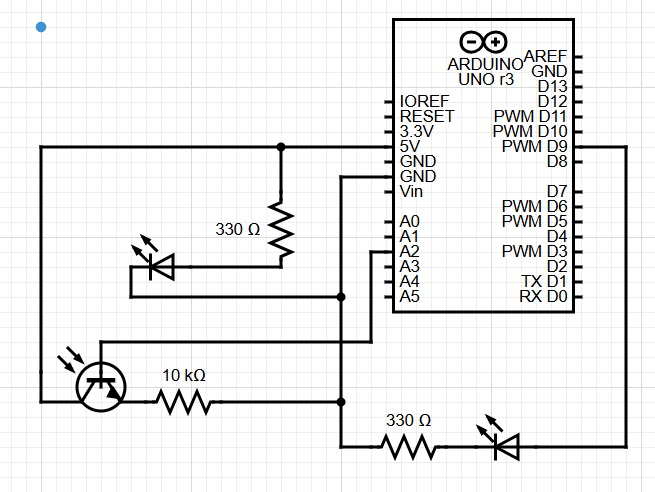

The project uses the following components: 6 LEDs, an LDR, a buzzer, resistors, wires, and a switch. The switch is particularly useful for stopping and resetting the system. The buzzer is triggered when specific LEDs are on, and the LDR reading exceeds a set threshold. The LEDs are divided into two groups: one for pedestrian signals and the other for vehicle signals. The buzzer sound is dependent on the pedestrian signal’s color: it beeps twice when the light is green and once when it is red.

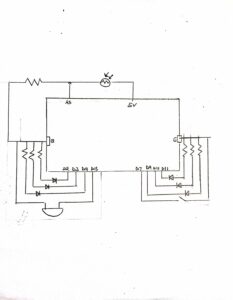

Images and sketch

Code Highlights

I’m particularly proud of how I managed the buzzer’s beeping pattern using functions, classes, and conditional statements. Below are some key snippets of the code.

void beepOnce(int note, int duration) {

tone(buzzer, note, duration); // Play the note on the buzzer

delay(duration + 50); // Wait for the note to finish

noTone(buzzer); // Stop the sound

}

void beepTwice(int note, int duration) {

tone(buzzer, note, duration); // Play the note on the buzzer

delay(duration + 50); // Wait for the note to finish

noTone(buzzer); // Stop the sound

delay(100); // Short delay before second beep

tone(buzzer, note, duration); // Play the note again

delay(duration + 50); // Wait for the note to finish

noTone(buzzer); // Stop the sound

ldr_value = analogRead(ldrpin); // Read the LDR sensor value

if (ldr_value > 600) { // Only beep if LDR value is greater than 600

beepTwice(NOTE_E4, 300); // Beep twice when Side 1 is Green

}

ldr_value = analogRead(ldrpin); // Read the LDR sensor value

if (ldr_value > 600) { // Only beep if LDR value is greater than 600

beepOnce(NOTE_C4, 300); // Beep once when Side 1 is Red and Side 2 is Yellow

}

Additionally, I learned how to define musical notes in Arduino, and I believe this will be useful in future projects.

const int NOTE_C4 = 261; const int NOTE_E4 = 330;

Challenges and Future Improvements

I faced challenges in synchronizing the color patterns of the traffic lights, but after some time and effort, I was able to get it right. Moving forward, I plan to deepen my understanding of buzzer functionalities and work on streamlining and optimizing my code.

Demonstration