1. Concept

Faced with the decision of choosing between working on data visualization or creating a generative text output, I considered that since I had a good understanding of how to create the generative text from class, and working in data visualization could help me gain some valuable skills, I chose to work on data visualization.

I was inspired by the data visualization of flights in the U.S. I was amazed at that a flight network could tell so much information, such as through its resemblance to the U.S. geography, and where the large airports are.

I was interested in applying data visualization mapping techniques to astronomical data. I explored Kaggle datasets and decided Gaia Astronomical Data to be very appropriate for my project as I noted I could visualize data in 3D space using x (parsecs) coordinates, y (parsecs) coordinates and z (parsecs) coordinates.

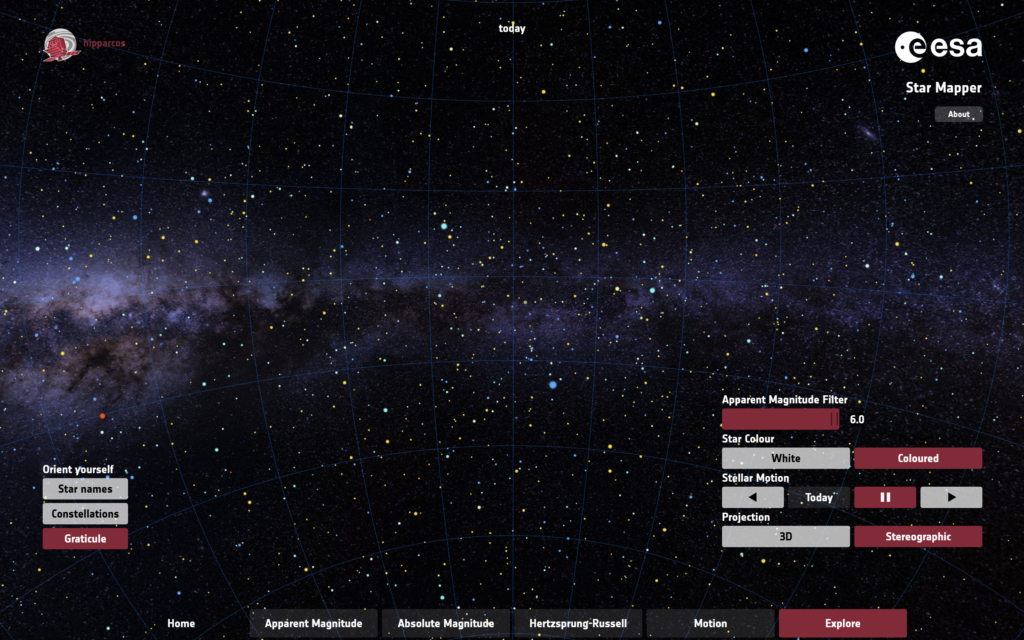

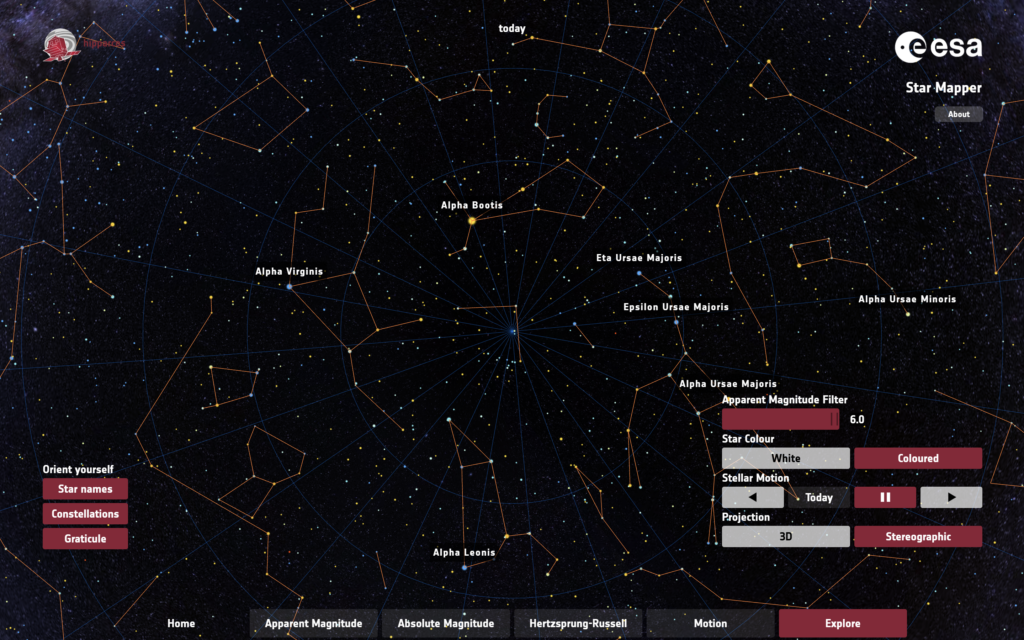

I also tried to explore other space visualization projects. In particular, I was inspired by the European Space Agency’s Star Mapper, a visualisation based on data from the European Space Agency’s Hipparcos star mapper. Not only were the views under ‘Explore’ tab very beautiful and breathtaking (see images below), I especially enjoyed the interactivity element of the website; being able to control the view of stars in space using my mouse when it is pressed, and I intended to incorporate this into my p5js animation.

The project involves manipulating the Gaia Astronomical Data to map stellar objects into 3D space, and enable users to zoom in, zoom out and move across the space using mouse controls. This would be my very first time animating in 3D space, as well as controlling camera and perspective through code! How exciting.

I used the code template for wind turbines data which was discussed in class and made many revisions to obtain the outcome.

2. Code Highlights

I encountered various major challenges, resolved with the help of AI.

First, my goal includes being able to move across the space using mouse controls, which is new to me, and watching Coding Train’s WebGL and p5js tutorial on Camera and Perspective helped me understand these unfamiliar concepts in p5js. I learned important ideas including field of view (fov), perspective, orbitControl, camera([x], [y], [z], [centerX], [centerY], [centerZ], [upX], [upY], [upZ]). It was still very challenging, and with the help of AI, I learned to incorporate astronomical measurements:

- in mapping mouse X-position to 30°-90° zoom range. Wider FOV (90°) shows more galactic context, narrower (30°) focuses on specific star formations. Uses actual astronomical angular measurements (π/6 = 30°, π/2 = 90°).

- in perspective, which sets viewing frustum matching typical astronomical scales: 1 parsec near clip (prevents nearby masers from clipping); 10000 parsec far clip (accommodates distant OB associations).

At some point in the project development, the stellar objects weren’t visible to my eye in the initial view – though if I moved my mouse to something like mouseX = 395 and mouseY = 340, the stellar objects were visible. This posed a problem, because users might not realize at first glance that there are stars they can explore. So I used target mouse coordinates to have the stellar objects within initial view: const targetMouseX = 397.5 and const targetMouseY = 340, camera(initialCamX, initialCamY, initialCamZ, initialLookAtX, initialLookAtY, 0, 0, 1, 0). Since camera is incorporated when mouse is not pressed, the user’s view would reset to a place they can view the objects in case they lost sight of it.

function draw() {

background(10); // Darker background for better contrast

// Field of View - Only update if mouse is pressed.

let fov;

if (mouseIsPressed) {

// Maps mouse X-position to 30°-90° zoom range. Wider FOV (90°) shows more galactic context, narrower (30°) focuses on specific star formations. Uses actual astronomical angular measurements (π/6 = 30°, π/2 = 90°).

fov = map(mouseX, mouseY, width, PI / 6, PI / 2);

} else {

fov = map(targetMouseX, targetMouseY, width, PI / 6, PI / 2); // Use targetMouseX

}

// Sets viewing frustum matching typical astronomical scales: 1 parsec near clip (prevents nearby masers from clipping); 10000 parsec far clip (accommodates distant OB associations)

perspective(fov, width / height, 1, 10000);

// Camera Controls - orbitControl handles movement when pressed.

if (!mouseIsPressed) {

camera(initialCamX, initialCamY, initialCamZ, initialLookAtX, initialLookAtY, 0, 0, 1, 0);

} //orbitControl handles the rest

print(mouseX, mouseY);

...

}

Second, since I needed to move across space, it is important that I draw all stellar objects every frame, so that when the user moves the cursor, the view of all stellar objects may adjust accordingly. The original code where each data point was drawn once per frame needed to be adjusted. Processing all the relevant coordinates for each stellar object was performed in a function, which was called by setup() instead of draw(). An array of stellar objects is used to hold stellar object data, with each element being an object storing the data for that stellar object (xpos, ypos, zpos, size determined according to distance from viewer).

function findMinMaxXYZAndProcessData() {

let singleRow = [];

// loop over each row in the file

for (let csvRowNumber = 1; csvRowNumber < strings.length; csvRowNumber++) {

// get a single row and split that row

// into individual words

singleRow = split(strings[csvRowNumber], ",");

// We know that the last two fields are the

// latitude and longitude and so they are

// numerical:

let x = float(singleRow[5]);

let y = float(singleRow[6]);

let z = float(singleRow[7]);

// The file may be missing a field, in which case

// the converstion to a float might have failed

if (isNaN(x) || isNaN(y) || isNaN(z)) {

print("conversion to float failed; skipping row " + csvRowNumber);

continue;

}

// Initialize min/max on the first valid row.

if (minX === undefined || x < minX) minX = x;

if (maxX === undefined || x > maxX) maxX = x;

if (minY === undefined || y < minY) minY = y;

if (maxY === undefined || y > maxY) maxY = y;

if (minZ === undefined || z < minZ) minZ = z;

if (maxZ === undefined || z > maxZ) maxZ = z;

// --- Data Processing and Storage ---

// Calculate mapped positions *here* (during setup).

let xpos = map(x, minX, maxX, -width / 2, width / 2);

let ypos = map(y, minY, maxY, -height / 2, height / 2);

let zpos = map(z, minZ, maxZ, -width / 2, width / 2);

let screenZ = map(z, minZ, maxZ, 8, 1); // Size based on distance.

// Create an object to store the data for this stellar object.

stellarObjects.push({

x: xpos,

y: ypos,

z: zpos,

size: screenZ,

// You could add color, type, or other attributes here from the CSV.

});

if (csvRowNumber >= strings.length) {

print("finished");

noLoop();

}

}

print("X (min, max) = (" + minX + "," + maxX + ") ");

print("Y (min, max) = (" + minY + "," + maxY + ")");

print("Z (min, max) = (" + minZ + "," + maxZ + ")");

} // end of findMinMaxXYZ

Third, my text on WebGL apparently read backwards. I made a logical guess that this was because of the view (I was behind the stellar objects rather than in front or my z-coordinates should be far the other way). I made an attempt to fix this, but it didn’t work. So with the help of AI, I learned to reset the camera for 2D drawing (orthographic projection) and default camera just specifically for text, the text read left-right.

// --- 2D Overlay (Text) ---

// Disable depth test for 2D overlay

drawingContext.disable(drawingContext.DEPTH_TEST);

// Reset the camera for 2D drawing (orthographic projection)

ortho();

camera(); // Reset to default camera

// Draw the textScreen

image(textScreen, -width / 2 + 20, -height / 2 + 20);

// Re-enable depth test for 3D rendering in the next frame

drawingContext.enable(drawingContext.DEPTH_TEST);

3. Embedded Sketch

4. Reflection and Areas for Future Improvement

This project allowed for vast improvement in my data visualization skills in 3D space as well as valuable opportunities to try using WebGL for 3D animation in a personal project for the first time. An area for future improvement could be incorporating being able to animate the stellar objects in motion, as if in orbit.