My goal is to use AI to control an arduino robot. By using a machine learning module called handsfree.js, I was able to make the bot move.

The handsfree.js machine learning module identifies two hands through a video feed. It can also predict the position and motion of the fingers and thumb. Using the position of the hands, I implemented several postures that present forward, backward, left and right. Pinching your right and left index fingers with the thumb also increases and reduces the speed of the wheels.

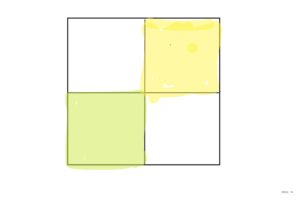

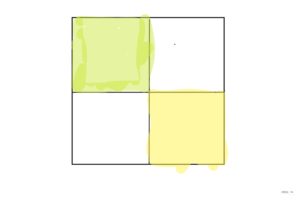

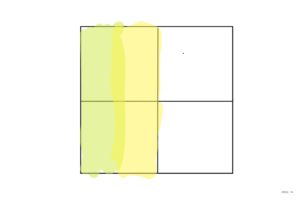

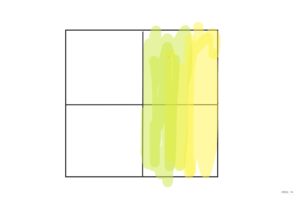

The movements are implemented on an imaginary four quadrant grid. Taking the cartesian plane as an example, to move the robot forward your right hand has to be in the first quadrant and your left hand in the third quadrant. For backward motion your left hand should be in the second quadrant and your right hand in the fourth quadrant. left movement, both hands should be in the left half of the plane and same for right movement.

Take the right hand as colored yellow and left hand as colored green. Then the movements are illustrated below:

Forward:

Backward:

Left:

Right:

User testing video demo:

Screen demo and hand gestures: