Concept

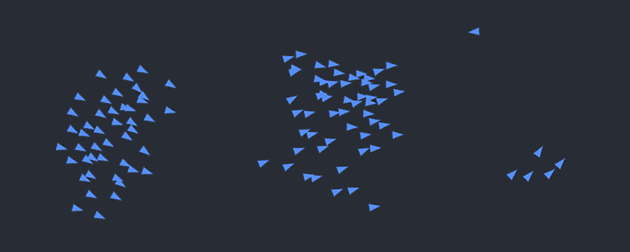

For my final project, I developed an interactive flocking simulation that users can control through hand gestures captured via their webcam. The project uses computer vision and machine learning to detect and interpret hand positions, allowing users to manipulate a swarm of entities (called “boids”) by making specific hand gestures.

The core concept was to create an intuitive and embodied interaction between the user and a digital ecosystem. I was inspired by the natural behaviors of flocks of birds, schools of fish, and swarms of insects, and wanted to create a system where users could influence these behaviors through natural movements.

User Testing Insights

- Most users initially waved at the screen, trying to understand how their movements affected the simulation

- Users quickly discovered that specific hand gestures (pinching fingers) changed the shape of the swarming elements

- Some confusion occurred about the mapping between specific gestures and shape outcomes

- Users enjoyed creating new boids by dragging the mouse, which added an additional layer of interactivity

- Initially, people weren’t sure if the system was tracking their whole body or just their hands

- Some users attempted complex gestures that weren’t part of the system

- The difference between the thumb-to-ring finger and thumb-to-pinkie gestures wasn’t immediately obvious

- The fluid motion of the boids created an engaging visual experience

- The responsiveness of the gesture detection felt immediate and satisfying

- The changing shapes provided clear feedback that user input was working

- The ability to add boids with mouse drag was intuitive

Interaction (p5 side for now):

The P5.js sketch handles the core simulation and multiple input streams:

- Flocking Algorithm:

- Three steering behaviors: separation (avoidance), alignment (velocity matching), cohesion (position averaging)

- Adjustable weights for each behavior to change flock characteristics

- Four visual representations: triangles (default), circles, squares, and stars

- Hand Gesture Recognition:

- Uses ML5.js with HandPose model for real-time hand tracking

- Left hand controls shape selection:

- Index finger + thumb pinch: Triangle shape

- Middle finger + thumb pinch: Circle shape

- Ring finger + thumb pinch: Square shape

- Pinky finger + thumb pinch: Star shape

- Right hand controls flocking parameters:

- Middle finger + thumb pinch: Increases separation force

- Ring finger + thumb pinch: Increases cohesion force

- Pinky finger + thumb pinch: Increases alignment force

- Serial Communication with Arduino:

- Receives and processes three types of data:

- Analog potentiometer values to control speed

- “ADD” command to add boids

- “REMOVE” command to remove boids

- Provides visual indicator of connection status

- Receives and processes three types of data:

- User Interface:

- Visual feedback showing connection status, boid count, and potentiometer value

- Dynamic gradient background that subtly responds to potentiometer input

- Click-to-connect functionality for Arduino communication

Technical Implementation

- p5 for rendering and animation

- ML5js for hand pose detection

- Flocking algorithm based on Craig Reynolds’ boids simulation

- Arduino integration (work in progress) for additional physical controls

Demo:

- Button Controls: Multiple buttons to add/remove boids for the simulation

- Potentiometer: A rotary control to adjust the speed of the boids in real-time, giving users tactile control over the simulation’s pace and energy