Link to video demo : https://drive.google.com/file/d/1KGj_M7xq6IdsS2Qwq-zbjjspCPPgcaj4/view?usp=sharing

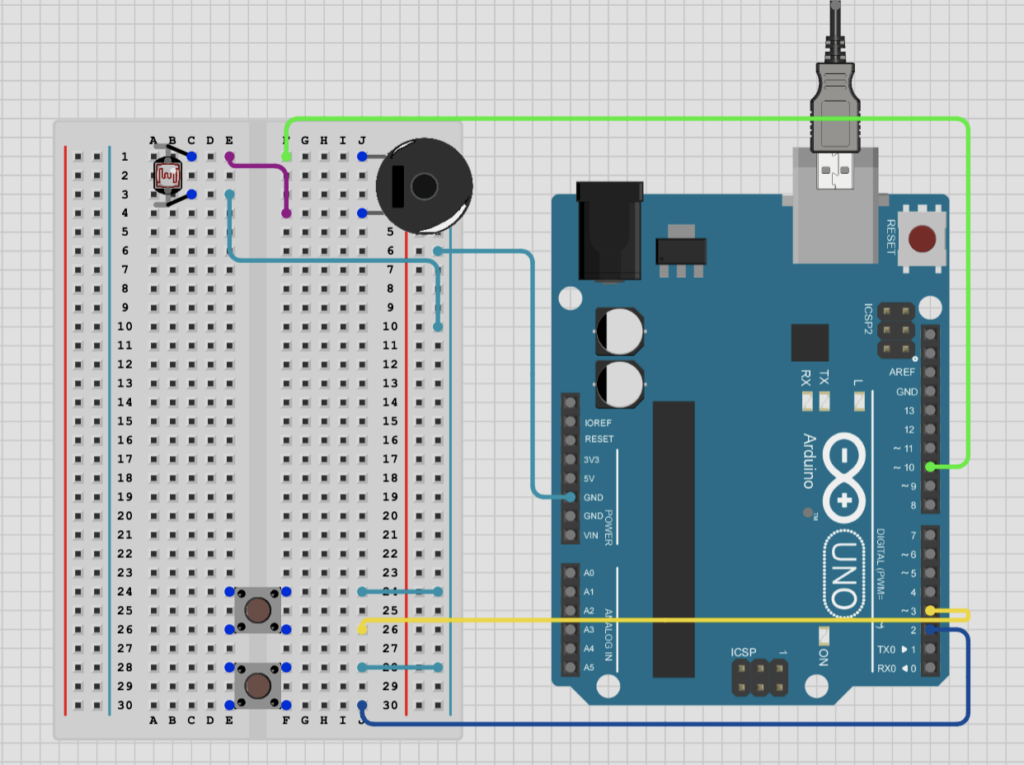

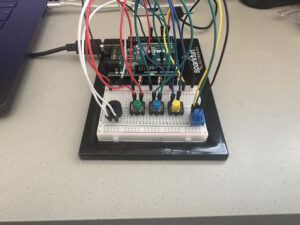

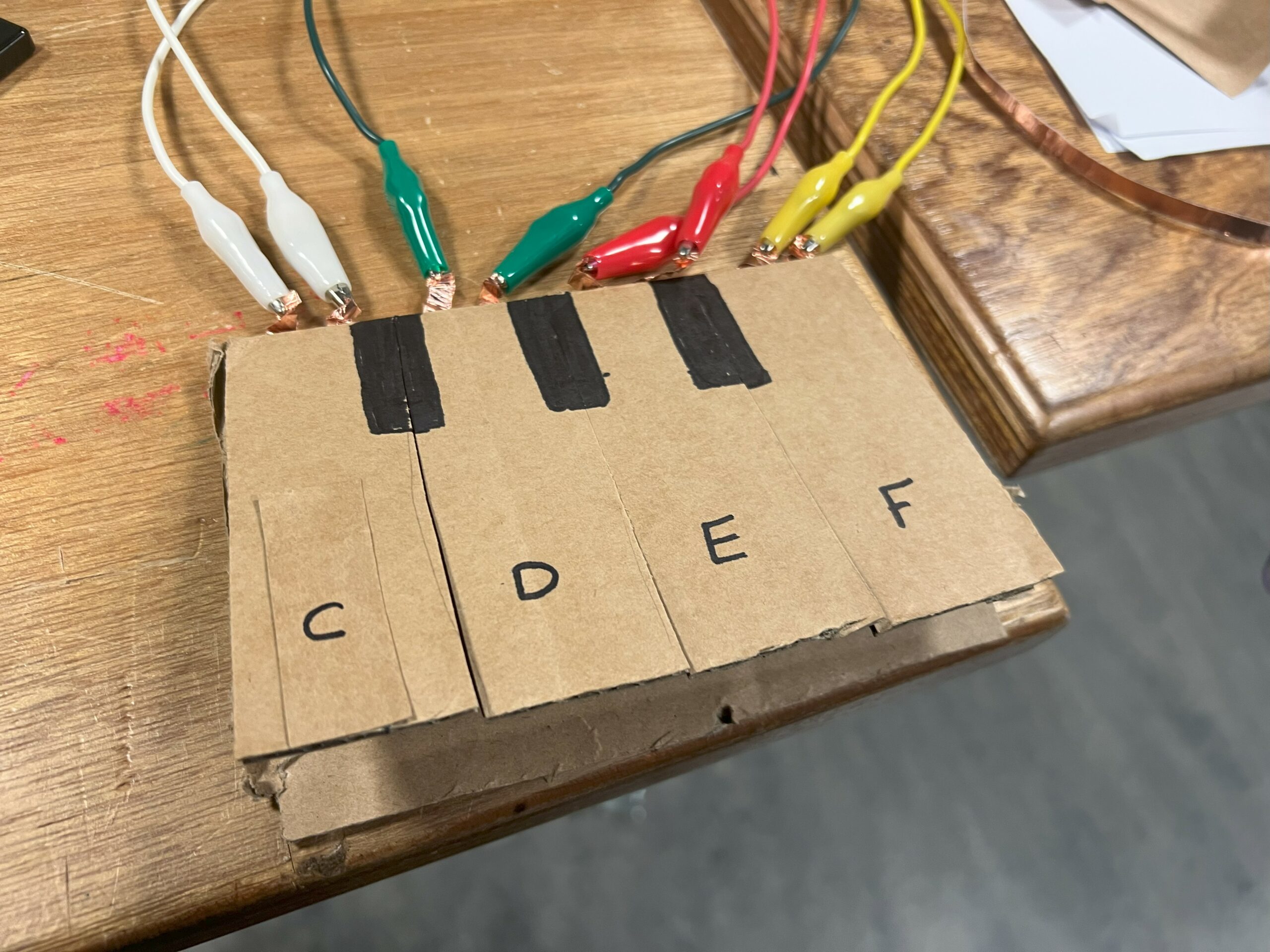

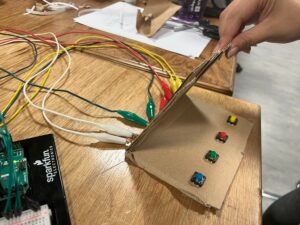

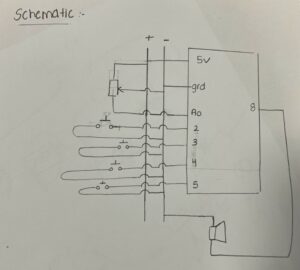

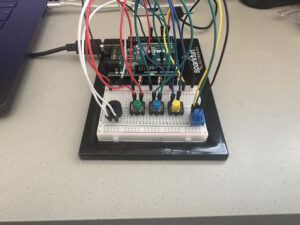

For this assignment, I decided to build a digital trumpet using an Arduino Uno, three push buttons, a potentiometer, and a speaker. My goal was to simulate the behavior of a real trumpet in a fun and creative way, even though I knew the sound would be more electronic than acoustic. It was a great opportunity for me to explore how hardware and code can come together to create music, and I ended up learning a lot about sound generation and analog input in the process.

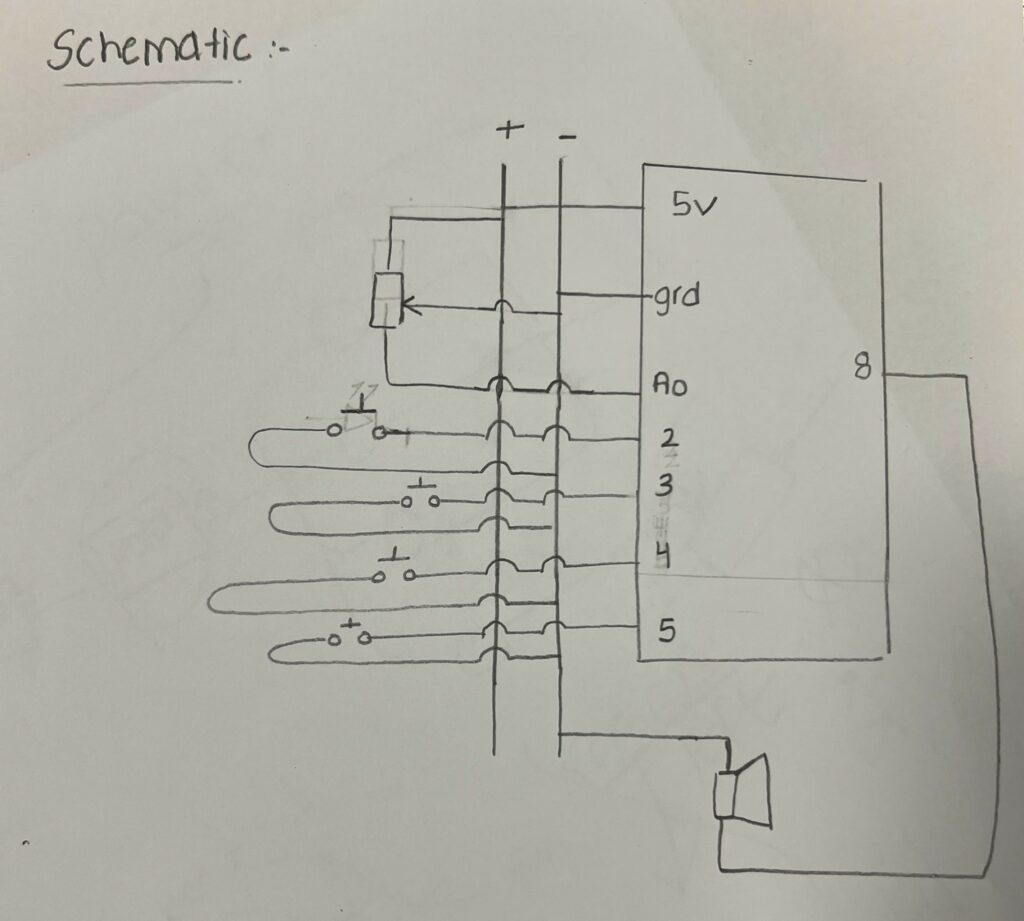

The concept was simple: each of the three buttons acts like a trumpet valve, and each one triggers a different note — specifically G4, A4, and B4. These are represented in the code as fixed frequencies (392 Hz, 440 Hz, and 494 Hz). When I press one of the buttons, the Arduino sends a signal to the speaker to play the corresponding note. The potentiometer is connected to analog pin A0 and is used to control the volume. This was a really cool addition because it gave the instrument a bit of expressive control — just like how a real musician might vary their breath to change the loudness of a note.

To make the sound a bit more interesting and less robotic, I added a little “vibrato” effect by randomly adjusting the pitch slightly while the note is playing. This gives the tone a subtle wobble that sounds more natural — kind of like the way a real trumpet player might shape a note with their lips. It’s still a square wave, and it’s definitely digital-sounding, but it gives it more character than just playing a flat, unchanging frequency.

If I were to continue developing this project, I have a few ideas for improvements. One would be to add more buttons or allow combinations of the three to create more notes — like a real trumpet with multiple valve positions. I’d also love to add some kind of envelope shaping, so the notes could have a smoother fade-in or fade-out instead of sounding flat and abrupt. It might also be fun to hook the project up to MIDI so it could control a software synthesizer and produce higher quality trumpet sounds. And for an extra visual touch, I could add LEDs that light up in sync with the music.

CODE :

const int potPin = A0; // Potentiometer for volume

const int speakerPin = 8; // Speaker on PWM pin

const int buttonPins[] = {2, 3, 4}; // 3 buttons = 3 different notes

// Trumpet-like frequencies (roughly G4, A4, B4)

const int trumpetNotes[] = {392, 440, 494};

void setup() {

for (int i = 0; i < 3; i++) {

pinMode(buttonPins[i], INPUT); // External pull-down resistors

}

pinMode(speakerPin, OUTPUT);

}

void loop() {

int volume = analogRead(potPin) / 4;

for (int i = 0; i < 3; i++) {

if (digitalRead(buttonPins[i]) == HIGH) {

playTrumpetNote(trumpetNotes[i], volume);

}

}

delay(10);

}

void playTrumpetNote(int baseFreq, int volume) {

unsigned long duration = 10000; // microseconds per cycle

unsigned long startTime = micros();

while (micros() - startTime < duration) {

// Slight pitch wobble

int vibrato = random(-3, 3);

int currentFreq = baseFreq + vibrato;

int halfPeriod = 1000000 / currentFreq / 2;

analogWrite(speakerPin, volume);

delayMicroseconds(halfPeriod);

analogWrite(speakerPin, 0);

delayMicroseconds(halfPeriod);

}

}