This is my Melodic Button Machine. It uses three push buttons (digital sensors) and a potentiometer (analog sensor) to create a simple, playful musical instrument. Each button plays a different musical note, while the potentiometer allows the player to bend the pitch of the note in real time- much like a musician bending a guitar string or sliding a trombone.

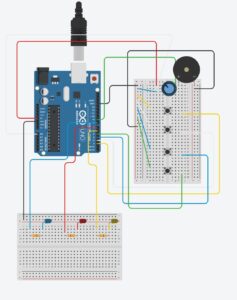

Machine Shown in Class

Assignment Brief

The assignment challenged us to create a musical instrument using Arduino technology. The requirements were clear: incorporate at least one digital sensor (such as a switch or button) and at least one analog sensor (like a potentiometer, photoresistor, or distance sensor). The instrument should respond to user input in a way that is both interactive and expressive.

Conceptualisation

The idea for this project emerged from my fascination with the simplicity of early electronic instruments. I remembered a childhood toy keyboard that could produce a handful of notes, and how magical it felt to create music with just a few buttons. I wanted to recreate that sense of wonder, but with a modern DIY twist. I also wanted to explore how analog and digital sensors could work together to give the user expressive control over the sound.

Process

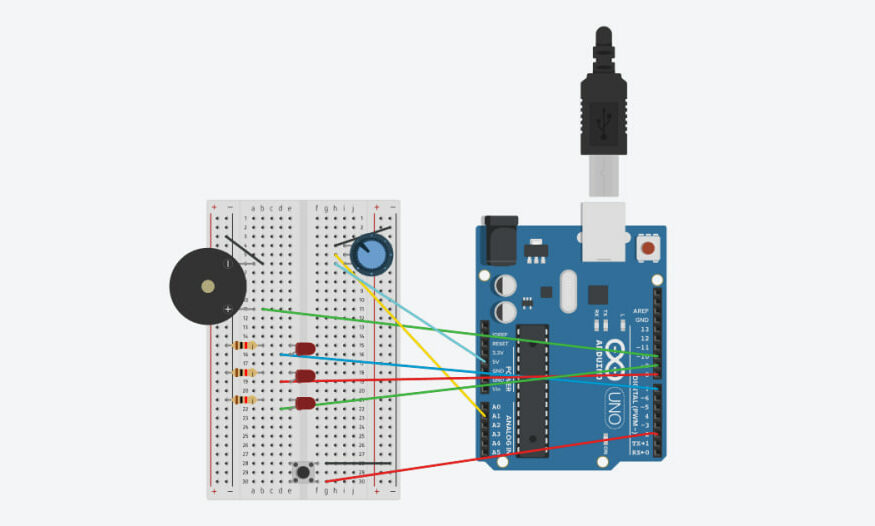

Component Selection: I started by gathering the essential components: an Arduino Uno, a breadboard, three push buttons, a potentiometer, a piezo buzzer, jumper wires, and a handful of resistors. The buttons would serve as the digital inputs for note selection, while the potentiometer would act as the analog input to modulate pitch.

Circuit Assembly: The buttons were wired to digital pins 2, 3, and 4 on the Arduino, with internal pull-up resistors enabled in the code. The potentiometer’s middle pin was connected to analog pin A0, with its outer pins going to 5V and GND. The piezo buzzer was connected to digital pin 8, ready to bring the project to life with sound.

Code Development: I wrote Arduino code that assigned each button a specific musical note: C, D, or E. The potentiometer’s value was mapped to a pitch modulation range, so turning it would raise or lower the note’s frequency. This allowed for playful experimentation and made the effect of the potentiometer obvious and satisfying. I tested the code, tweaking the modulation range to make sure the pitch bend was dramatic and easy to hear.

Testing and Tuning: Once everything was wired up, I played simple tunes like “Mary Had a Little Lamb” and “Hot Cross Buns” by pressing the buttons in sequence. The potentiometer added a fun twist, letting me add vibrato or slides to each note.

Challenges

Pitch Range Calibration:

Finding the right modulation range for the potentiometer was tricky. If the range was too wide, the notes sounded unnatural; too narrow, and the effect was barely noticeable. After some trial and error, I settled on a ±100 Hz range for a musical yet expressive pitch bend.

Wiring Confusion:

With multiple buttons and sensors, it was easy to mix up wires on the breadboard. I solved this by colour-coding my jumper wires and double-checking each connection before powering up.

Potential Improvements

More Notes:

Adding more buttons would allow for a wider range of songs and melodies. With just three notes, the instrument can play simple tunes, but five or more would open up new musical possibilities.

Polyphony:

Currently, only one note can be played at a time. With some code modifications and additional hardware, I could allow for chords or overlapping notes.

Alternative Sensors:

Swapping the potentiometer for a light sensor or distance sensor could make the instrument even more interactive.

Visual Feedback:

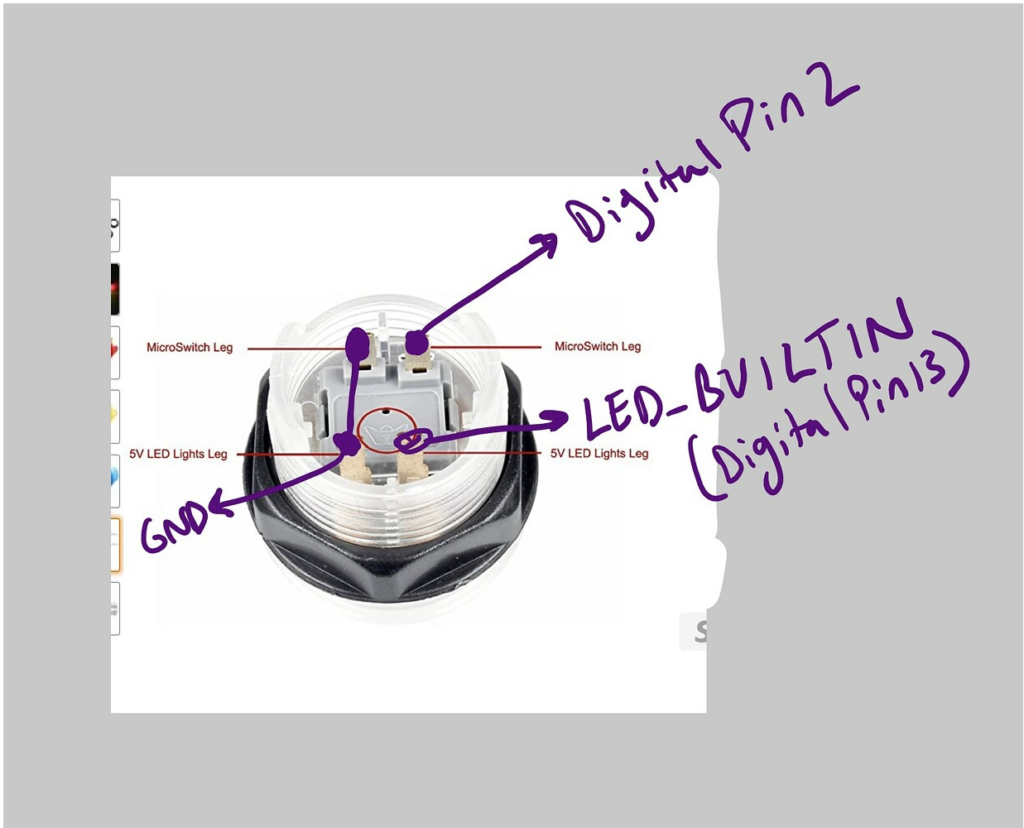

Adding LEDs that light up with each button press or change colour with the pitch would make the instrument more visually engaging.

Schematics

Source Code

const int button1Pin = 2;

const int button2Pin = 3;

const int button3Pin = 4;

const int potPin = A0;

const int buzzerPin = 8;

// Define base frequencies for three notes (C4, E4, G4)

const int noteC = 262; // C4

const int noteE = 330; // E4

const int noteG = 294; // D4

void setup() {

pinMode(button1Pin, INPUT_PULLUP);

pinMode(button2Pin, INPUT_PULLUP);

pinMode(button3Pin, INPUT_PULLUP);

pinMode(buzzerPin, OUTPUT);

}

void loop() {

int potValue = analogRead(potPin);

// Map potentiometer to a modulation range

int modulation = map(potValue, 0, 1023, -100, 100);

if (digitalRead(button1Pin) == LOW) {

tone(buzzerPin, noteC + modulation); // Button 1: C note modulated

} else if (digitalRead(button2Pin) == LOW) {

tone(buzzerPin, noteE + modulation); // Button 2: E note modulated

} else if (digitalRead(button3Pin) == LOW) {

tone(buzzerPin, noteG + modulation); // Button 3: G note modulated

} else {

noTone(buzzerPin);

}

}