Final Project Update

Hassan, Majid & Aaron.

CONCEPT

Our project is to create a remote-controlled car that can be controlled using hand gestures, specifically by tracking the user’s hand position. We will achieve this by integrating a P5JS tracking system into the car, which will interpret the user’s hand gestures and translate them into commands that control the car’s movements.

IMPLEMENTATION

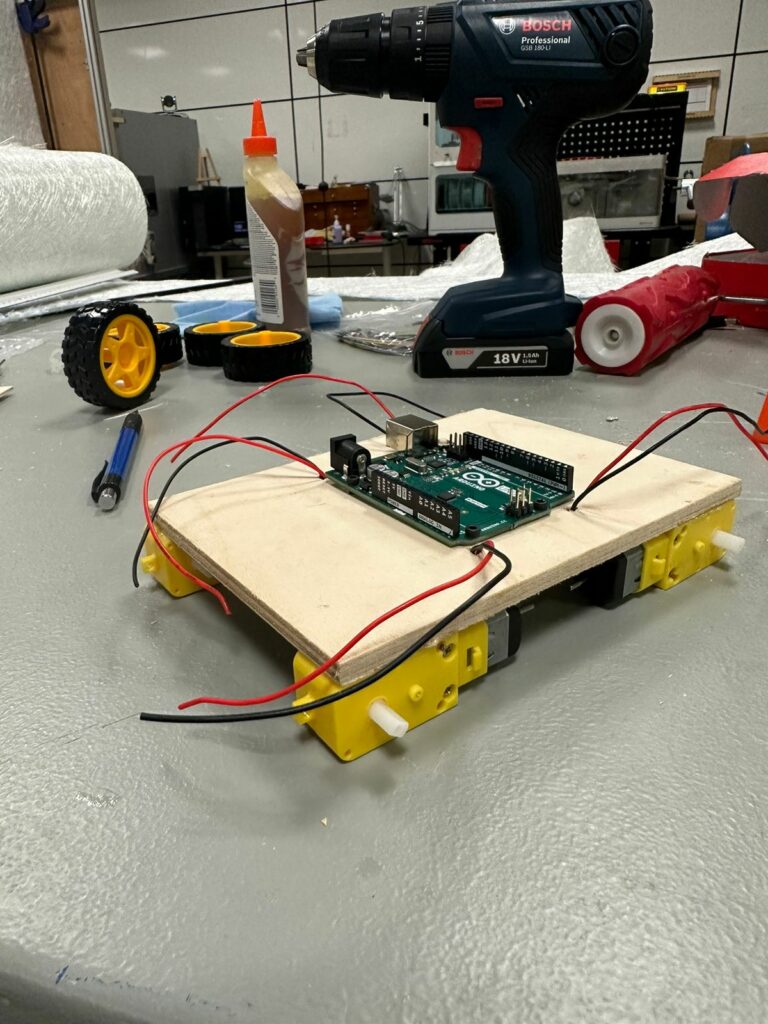

To implement this project, we will first build the remote-controlled car using an Arduino Uno board and other necessary components. We will then integrate the P5JS system, which will detect the user’s hand position and translate it into movement commands for the car. We have two options for detecting user’s hand position, either PoseNet or Teachable Machine. The camera tracking system will be programmed to interpret specific hand gestures, such as moving the hand forward or backward to move the car in those directions.

POTENTIAL CHALLENGES

One potential challenge we may face during the implementation is accurately interpreting the user’s hand gestures. The camera tracking system may require experimentation and programming adjustments to ensure that it interprets the user’s hand movements accurately. Also testing will be required to see if PoseNet performs better or Teachable Machine. Responsiveness will also be a factor as we would want the control to be fluid for the user. Additionally, ensuring the car responds correctly to the interpreted movement commands may also present a challenge, in terms of the physical construction of the cars and the variability of the motors.

Update

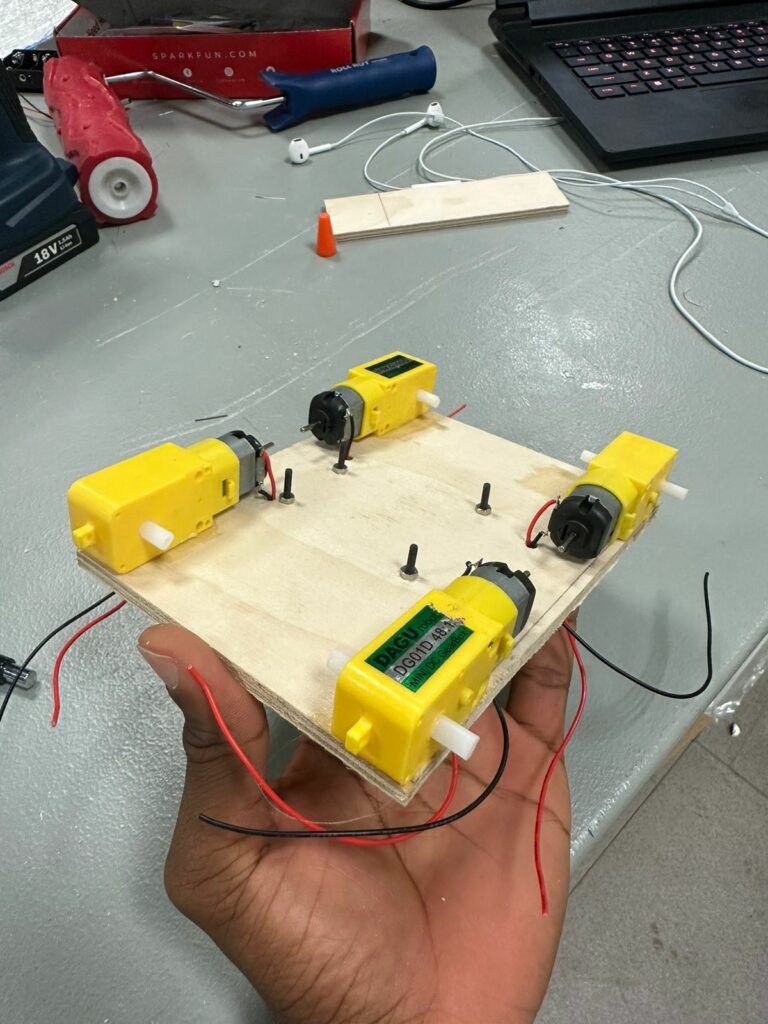

We were able able to come up with two car concept designs, but this was the best we decided to move ahead with. We believe we might need a motor driver, which we will connect to the Arduino Uno board to make controls possible.

1.

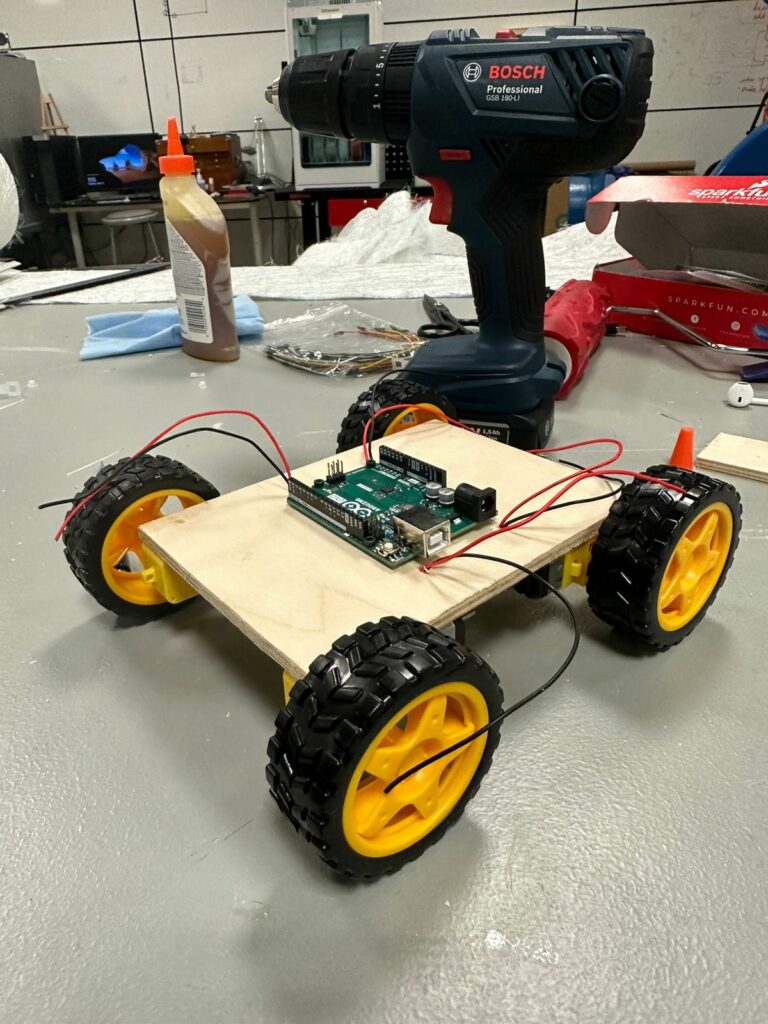

When we get the code to control the car right and time is on our side, we will update the car model to an acrylic body. We also plan to use a vacuum former to create a body for the car.