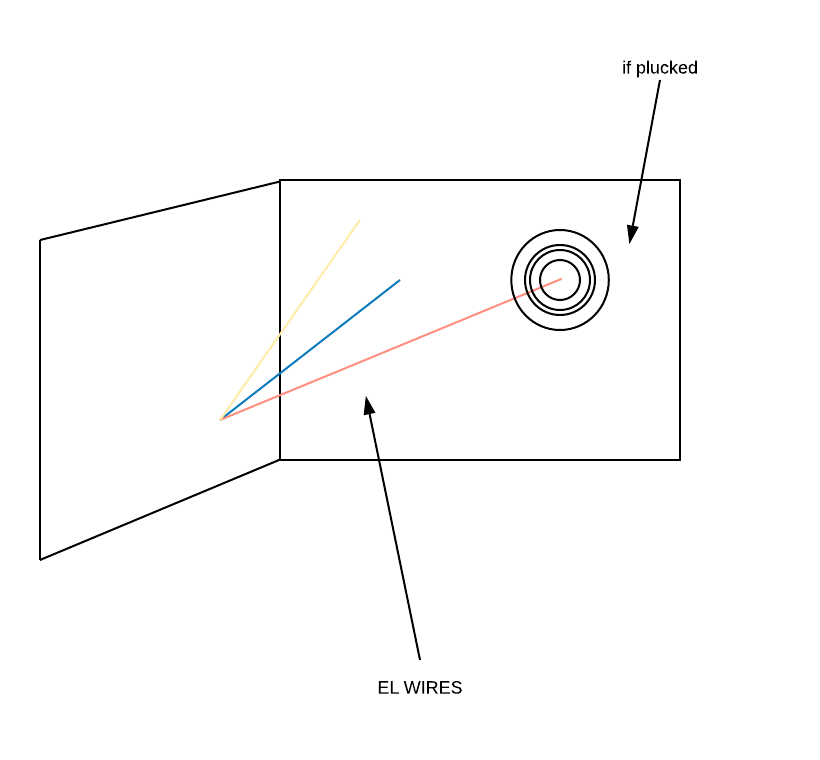

I made small changes to my final project idea conceptually, and in terms of certain technicalities. So in plain terms, I aim to design a piece of interactive and performative art installation, where the audience shape/silhouette and movements are translated into different complex patterns and colors onto different transparent surfaces. I will still be using a Kinect in order to detect the movement of the audience, and program a Processing sketch that will analyze the data from the Kinect and translate that into patterns and colors. Conceptually, my project aims to explore issues of surveillance in our modern world, where people place a lot of trust in technology and a lot of our personal data is present and recorded on clouds. Through choosing to interact with the installation, the audience are giving information about their movements, which will be recorded by the Kinect. This doesn’t necessarily imply that sharing our data is in inherently good or bad, but rather to make the audience think about the larger implications of that prospect.

I believe some of the complicated or challenging aspects of the project will be:

- Separating the data from the Kincet into three sections, since I want each section to present a change of patters as the user moves along the width of the screens.

- Programming the Kinect into detecting more than one body at a time rather than solid surfaces

- Assigning specific colors to each section and each pattern