# Jump To:

Update: Fixed the link, as it turns out, it was broken this entire time!

(wait, so you’re telling me that no one got to play my game? 🙁)

# Introduction & Project Concept

Hey everyone, welcome back! 👋

In this blog, I’ll be talking a little bit about my midterm project. Unfortunately (or fortunately, depending on how much you want them), this blog post isn’t going to be as detailed as my usual ones. Instead, it’ll just be a very cursory overview of a few parts of the project.

So, what is my project? Well, it’s a game where you control a character using your own body (pose detection), and you have to try to fit through the target pose cutouts, similar to the ones you see in cartoons when a character goes through a wall.

Though, since the poses are detected using a webcam, it can be hard to get the distance and space required to detect the whole body (and also still clearly see the screen), so instead the cutouts are only for the upper body, which should make it accessible to and playable by more people.

# Implementation Details

## How it Works (in a Nutshell)

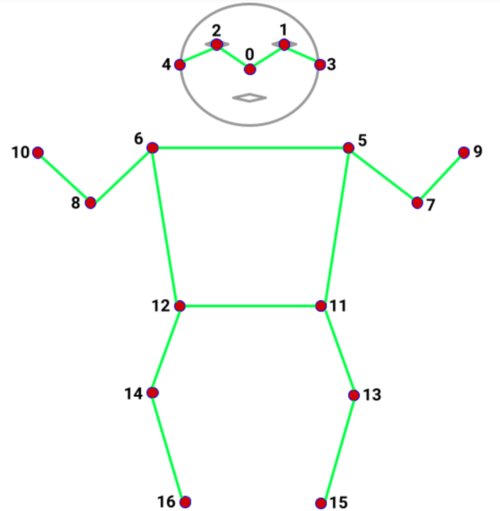

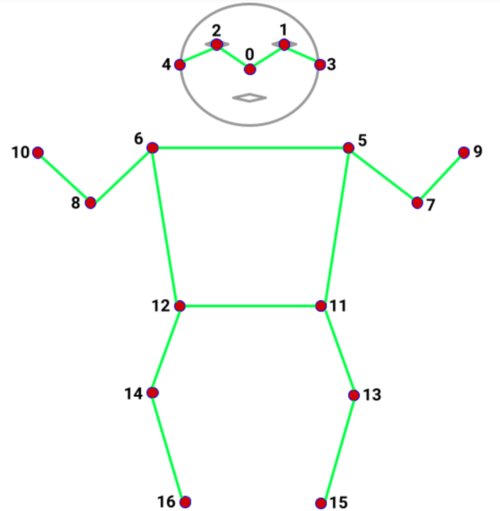

The core mechanic of the game is the player’s pose. Thankfully, this can be detected relatively easily with the help of ml5.js’s BodyPose, which after integrating, lets you know the locations of each of the keypoints. From the 2 models available (Movenet and BlazePose), I choose Movenet, and you can see the the keypoints it detects below.

Obviously, the keypoints the camera can’t see won’t be very accurate at all, but the model will still report them. Thankfully, it also reports its confidence, and so you can easily filter out the keypoints which don’t have a certain threshold confidence.

In terms of why I choose MoveNet, it’s because it is the newer model, and was built for faster recognition, which is important in a game like mine (you obviously don’t want players to notice or feel a lag in their movements, which makes it harder to control and less enjoyable) (though to be honest, BlazePose would work fine here too, it would just be a bit slower and laggier). Also, another one of the parameters I specified was the type (SINGLEPOSE_THUNDER), which means that it should only track 1 person, and with slightly higher accuracy.

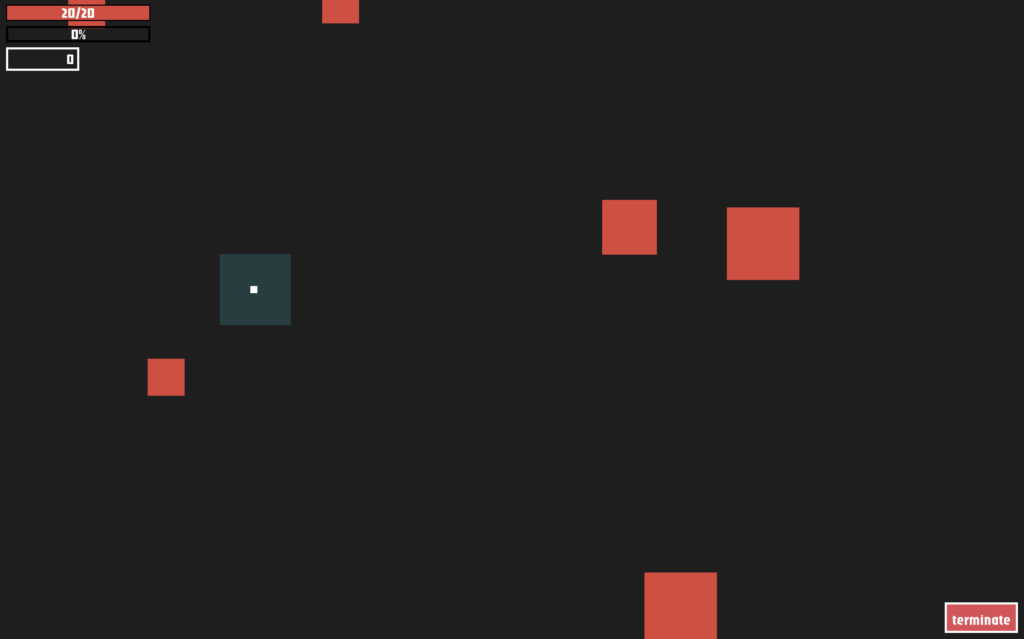

Anyways, so we got our pose, and I drew it on the screen. Then, the player sees a target pose cutout (which was generated by creating a random pose, and erasing those pixels from the surface), and tries to match it (hopefully, you don’t wanna crash into it!). Once the target is close enough, we check if the player’s pose matches the target cutout’s pose. Now, since it’s nearly (or literally) impossible to match it exactly to the subpixel (in this case, 13 decimal places smaller than a pixel!), especially for every point, we just check whether the pose’s are close enough, by adding a bit of margin to point and checking if the keypoints are within that margin. If it matches, hurray! The player scores a point and the target disappears (only for another one to come out later… 😈, ahem 😇). Otherwise, the target still disappears, but instead of scoring a point, they lose a heart/life. If they’ve lost too many, the game ends.

That basically sums up the main game loop.

Now, this is all implemented in an OOP manner, so there are classes for each scene, and for any objects that would benefit from being a class. So, for scene management, I have an object/dictionary containing a reference to all the scene objects instantiated from their respective classes, and field that holds a reference to the current scene (or alternatively a variable that keeps track of the current scene’s name).

Similarly, I also have an object/dictionary containing all the sounds and images to be used (which get loaded in preload, like the pose detection model). Other than that, the rest is mostly similar to other p5.js projects.

## Some Stuff I Liked

This may seem small, but one of the parts I like is my random pose generation. Since it is, you know, a core part of the gameplay (the pose for the target cutouts), I had to get this done fairly well. While I initially thought of using random offsets from a set pose, or even a set of custom poses I did (which would save a lot of time), I knew this solution wouldn’t be the best, and would have several issues (for example, others will likely have a different height and body segment lengths, as well as from different distances and angles to the camera). Instead, my current solution accounts for most of that, and is made it from a system of constraints.

Basically, I first measure some key lengths from the player (such as the distance between the wrist and the elbow, elbow to the shoulder, shoulder to the hip, original y-value of nose, etc). Then I first start by generating random coordinates for the nose, which are in the center 25% (horizontally) of the screen, and within 25px or so (vertically) of the player’s original nose height (btw, so instead of generating several points for the head (eyes, ears, & nose), like the model outputs, I can just treat the nose as the center of the head, which is much simpler and surprisingly doesn’t have almost any drawback for my usecase, so I do that). After this, I get a random angle between -30 to 30 degrees for the shoulder midpoint, and then calculate the shoulders from there (taking into account the player’s shoulder to shoulder length, and nose to shoulder midpoint length). Similarly, I also calculate a random angle in a certain acceptable range, and use the user’s segment length to calculate the exact position. Now, I also want to ensure that no part goes offscreen, or even within 10% of the edges, so I wrap the generation of each part in a do… while loop, which ensures that if a certain part does get generated too close to the edge, it tries again, calculating new positions from new random but constrained values. Additionally, I also don’t want any of the points to be too close to each other (which could especially be an issue with the wrists, both to each other and to the head).

But what if it is literally impossible to satisfy all these constraints for a certain part? Then the program will just keep trying and trying again, forever, which we obviously don’t want. So I keep a track of the number of current attempts, and if it goes above a certain threshold (say 10), then I start from scratch, and return a completely brand new random pose, using recursion (hoping that the new system won’t run into similar situations too many times, which thankfully is the case, as it’s quite rare for it to retry). I also keep a track of the number of attempts for the completely new pose, and if it gets too high, then I just return the default pose (this is more so just to be extra safe, since thankfully, this is almost never going to happen, as there is an extreeeemeeellyyyy small chance that it bails out and fails trying to return a completely new pose, that many times).

So, that’s it! Despite being really quite simple, it’s pretty effective. You can see the code for it, and try it out in the sketch, below (it’s actually a bit unnerving and intriguing how we can associate actions and feelings, just by looking at random poses made with simple rules).

// Initialised with some default values (roughly my measurements)

let playerBodyInfo = {

"nose y-value": 165,

"nose-shoulder midpoint length": 50,

"shoulder midpoint-hip midpoint length": 150,

"shoulder-shoulder length": 90,

"shoulder-elbow length": 70,

"elbow-wrist length": 60

}

// lots of other code ... (not relevant for this)

// method within Game class

generateRandomPose(attempts = 0) {

// Constraints / Ideas / Assumptions:

// - Nose should be in the middle 25% (horizontally) of the screen, and near the height of the player's nose originally (similar y-value)

// - 0 deg <= midpoint-shoulder-elbow angle (inside one) <= 180 deg (basically, the elbow should be outside the body, extending upwards)

// - 45 deg <= shoulder-elbow-wrist angle (inside one) <= 180 deg

// - All parts should be within the center 80% (the nose and shoulders don't need to be tested, since they can't reach there anyways)

// - Also, parts shouldn't be too close to each other (realistically the thing we need to check for is wrists to each other and the nose)

// - First generate nose position (center of head), then shoulders, then so on.

let outerMargin = 0.1; // 10%, so points should be in the middle 80% of the detection area (webcam feed)

let minX = webcamVideo.width * outerMargin

let maxX = webcamVideo.width * (1 - outerMargin)

let minY = webcamVideo.height * outerMargin

let maxY = webcamVideo.height * (1 - outerMargin)

let partAttempts, leftShoulderToElbowAngle, rightShoulderToElbowAngle, leftElbowToWristAngle, rightElbowToWristAngle

// Initialised with some default values (roughly my measurements)

let pose = {

nose: {x: 320, y: 165},

left_shoulder: {x: 275, y: 215},

right_shoulder: {x: 365, y: 215},

left_hip: {x: 295, y: 365},

right_hip: {x: 345, y: 365},

left_elbow: {x: 220, y: 255},

right_elbow: {x: 420, y: 255},

left_wrist: {x: 200, y: 200},

right_wrist: {x: 440, y: 200}

}

// If it takes too many attempts to generate a pose, just give up and output the default pose

if (attempts > 100) {

print('Pose generation took too many attempts, returning default pose.')

return pose

}

// Nose

pose.nose.x = random(0.375, 0.625) * webcamVideo.width // center 25%

pose.nose.y = random(-25, 25) + playerBodyInfo["nose y-value"] // y-value +- 25px of player's nose height

// Shoulders

let shoulderAngle = random(-PI/6, PI/6) // The angle from the nose to the shoulder's midpoint with origin below (think of a unit circle, but rotated clockwise 90 deg) (also equivalently, the angle from the left to right shoulder, on a normal unit circle). From -30 to 30 degrees

let shoulderMidpoint = {

x: pose.nose.x + sin(shoulderAngle) * playerBodyInfo["nose-shoulder midpoint length"],

y: pose.nose.y + cos(shoulderAngle) * playerBodyInfo["nose-shoulder midpoint length"]

}

pose.left_shoulder.x = shoulderMidpoint.x - cos(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.left_shoulder.y = shoulderMidpoint.y + sin(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.right_shoulder.x = shoulderMidpoint.x + cos(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.right_shoulder.y = shoulderMidpoint.y - sin(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

// Hips

let hipMidpoint = { // The hip's midpoint is really just the shoulder's midpoint, but extended further, so we can calculate it in a similar fashion

x: pose.nose.x + sin(shoulderAngle) * (playerBodyInfo["nose-shoulder midpoint length"] + playerBodyInfo["shoulder midpoint-hip midpoint length"] + 50*0), // [Nvm, disabled for now] Added 50 in the end, to ensure it's long enough (I'm not using the hips for accuracy or points, but rather just to draw the outline)

y: pose.nose.y + cos(shoulderAngle) * (playerBodyInfo["nose-shoulder midpoint length"] + playerBodyInfo["shoulder midpoint-hip midpoint length"] + 50*0) // (as above ^)

}

pose.left_hip.x = hipMidpoint.x - cos(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.left_hip.y = hipMidpoint.y + sin(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.right_hip.x = hipMidpoint.x + cos(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

pose.right_hip.y = hipMidpoint.y - sin(shoulderAngle) * 0.5 * playerBodyInfo["shoulder-shoulder length"]

// Elbows

partAttempts = 0;

do {

if (++partAttempts > 10) return this.generateRandomPose(attempts + 1); // If it takes too many attempts to generate this part, just give up and start from scratch

leftShoulderToElbowAngle = random(PI/2, 3 * PI/2) + shoulderAngle // From 90 to 270 (-90) degrees on a normal unit circle (basically 0 to 180 degrees, with the left half of a circle (imagine the unit circle rotated anticlockwise 90 deg))

pose.left_elbow.x = pose.left_shoulder.x + cos(leftShoulderToElbowAngle) * playerBodyInfo["shoulder-elbow length"]

pose.left_elbow.y = pose.left_shoulder.y - sin(leftShoulderToElbowAngle) * playerBodyInfo["shoulder-elbow length"]

} while (

minX > pose.left_elbow.x || pose.left_elbow.x > maxX || // Check if it's within the acceptable horizontal range

minY > pose.left_elbow.y || pose.left_elbow.y > maxY // Check if it's within the acceptable verticle range

);

partAttempts = 0;

do {

if (++partAttempts > 10) return this.generateRandomPose(attempts + 1); // If it takes too many attempts to generate this part, just give up and start from scratch

rightShoulderToElbowAngle = random(-PI/2, PI/2) + shoulderAngle // From 270 (-90) to 90 degrees on a normal unit circle (basically 0 to 180 degrees, with the right half of a circle)

pose.right_elbow.x = pose.right_shoulder.x + cos(rightShoulderToElbowAngle) * playerBodyInfo["shoulder-elbow length"]

pose.right_elbow.y = pose.right_shoulder.y - sin(rightShoulderToElbowAngle) * playerBodyInfo["shoulder-elbow length"]

} while (

minX > pose.right_elbow.x || pose.right_elbow.x > maxX || // Check if it's within the acceptable horizontal range

minY > pose.right_elbow.y || pose.right_elbow.y > maxY // Check if it's within the acceptable verticle range

);

// Wrists

partAttempts = 0;

do {

if (++partAttempts > 10) return this.generateRandomPose(attempts + 1); // If it takes too many attempts to generate this part, just give up and start from scratch

leftElbowToWristAngle = random(1.25*PI, 2*PI) + leftShoulderToElbowAngle // random(PI/4, PI) // From 45 to 180 degrees on a normal unit circle. Will be rotated to account for the elbow's existing rotation

pose.left_wrist.x = pose.left_elbow.x + cos(leftElbowToWristAngle) * playerBodyInfo["elbow-wrist length"]

pose.left_wrist.y = pose.left_elbow.y - sin(leftElbowToWristAngle) * playerBodyInfo["elbow-wrist length"]

} while (

minX > pose.left_wrist.x || pose.left_wrist.x > maxX || // Check if it's within the acceptable horizontal range

minY > pose.left_wrist.y || pose.left_wrist.y > maxY || // Check if it's within the acceptable verticle range

dist(pose.nose.x, pose.nose.y, pose.left_wrist.x, pose.left_wrist.y) < 50 // Check if the wrist is too close to the nose ); partAttempts = 0; do { if (++partAttempts > 10) return this.generateRandomPose(attempts + 1); // If it takes too many attempts to generate this part, just give up and start from scratch

rightElbowToWristAngle = random(0, 3/4 * PI) + rightShoulderToElbowAngle // From 270 (-90) to 90 degrees on a normal unit circle (basically 0 to 180 degrees, with the right half of a circle)

pose.right_wrist.x = pose.right_elbow.x + cos(rightElbowToWristAngle) * playerBodyInfo["elbow-wrist length"]

pose.right_wrist.y = pose.right_elbow.y - sin(rightElbowToWristAngle) * playerBodyInfo["elbow-wrist length"]

} while (

minX > pose.right_wrist.x || pose.right_wrist.x > maxX || // Check if it's within the acceptable horizontal range

minY > pose.right_wrist.y || pose.right_wrist.y > maxY || // Check if it's within the acceptable verticle range

dist(pose.nose.x, pose.nose.y, pose.right_wrist.x, pose.right_wrist.y) < 50 || // Check if the wrist is too close to the nose

dist(pose.left_wrist.x, pose.left_wrist.y, pose.right_wrist.x, pose.right_wrist.y) < 50 // Check if the wrist is too close to the other wrist

);

return pose;

}

(Click inside the sketch, then press any key to generate a new random pose)

Another part I liked was the illusion of perspective. So basically, I had to rush this project, so I thought, “Fine, I’ll do it in 2D, that’s much simpler”, and it is, but I still wanted that 3D perspective effect 😅. After playing around for a bit in a new temporary sketch, I found out that if I scale everything from the center, then the 2D sketch appears to have (a very basic, rudimentary, and probably physically inaccurate, but nonetheless, visible) perspective! While the best and fully implemented version is in the final game, you can see that temporary sketch below.

(Click inside the sketch, then press r to restart, or any other key to toggle between the first and second test (now basically just uncoloured vs coloured)… oh also, please, you know what I mean by “any other key”, so don’t press the power button or something 😂)

## Some Issues I Encountered

Oh boy oh boy, did I face several issues (as expected). Now, I can’t go over every issue I faced, so I’ll just mention a couple.

First one I’ll mention, is actually the random pose generator mentioned above! Yep, while I did end up getting it working (and well enough that I liked it and included it in my good parts section), and while it is conceptually pretty simple, it still took a fair bit of time (wayy longer than I thought it would, or even still think it needs to), and was a bit tricky, particularly with working out the angles and correct sin and cos transformations (oh, I messed up the signs more than once 😅). In fact, I actually made the sketch above to quickly test out my pose generation! I had written it all “blind” (aka without testing in between), and since it was fairly straightforward, I was confident it would work, but something nagged me to just try it once before the seeing it in the main game, and… yep, it didn’t work. *sighs*. I had to meticulously comment out and retry each portion and work back to a full solution, bit by bit. Fortunately it wasn’t too conceptually difficult (more time consuming than hard, but even the basic trig was a little rough tough at 3 AM), so I succeeded.

Another issue I faced was that the target cutouts, didn’t have any actual cutouts(!), which is, you know, a major issue. Again, I broke out a new temporary sketch to test out my logic, simplifying stuff and working things out. It turned out to be a simple issue of using the screen’s width instead of the webcam’s width, and a few other things. In case you’re wondering, yep, the screen and webcam not only have different resolutions, but also different aspect ratios! It was a bit of a pain initially to get it so they worked seamlessly together, but now I have. The reason behind this, is that some webcams (particularly those on laptops) give a 4:3 image for some reason, and since I need to run a machine learning model (MoveNet, for the poses), they usually reduce the resolution required (otherwise it would take a LOT longer to detect the pose, which would break the game). I wanted the game’s output on the other hand to be a crisp (scalable up or down but ideally) 1920×1080, 16:9 aspect ratio, hence the mismatch.

(It’s not really interactive (besides f for fullscreen), so don’t bother ;) )

Well anyways, that’s it for now (I think I might’ve made it a bit longer and more detailed than originally thought 😅), so without further ado, I present, my ✨midterm project✨! (I should really have a name for it, but I can’t decide 🤦♂️)

# Final Result – Midterm Project

While I would normally embed the sketch here, I think you should really open it full screen in a new tab, so follow this link.

# Additional Thoughts & Room for Improvement

With any project, there’s always room for improvement. But for this one specifically, there’s a huge room for improvement 😅 (mainly because I keep seeing new features to implement or ideas to improve it). As usual, there are a bunch of things I didn’t get time to implement or do, ranging from better graphics and polish, to levels and features. I particularly wanted to implement a character that actually goes ahead of you (that you are trying to catch) that “breaks” the walls (causes the cutouts), and also a level that took place in a top secret laboratory setting, where the character is actually stealing some top sneaky info, and so you have to stop them. I kind of really want to continue this and flesh it out (but I also know it’ll not be of much use, and besides, p5 isn’t the best platform to implement this idea, so why waste time here… so anyways *cuts to me working on this months into the future* 😅). Well, I’m really glad you went through the entire post (and definitely didn’t skip till the final result), but regardless, thanks a lot for reading it (does anyone even read these, or am I just talking to myself? ._. ). Unfortunately, that’s all I’ve got time for, so we’ll have to end it here. Until next time, and enjoy your break!

(psst, do you know what time his watch shows? Ok, fine, it isn’t wearing one, but if it did, it would show)

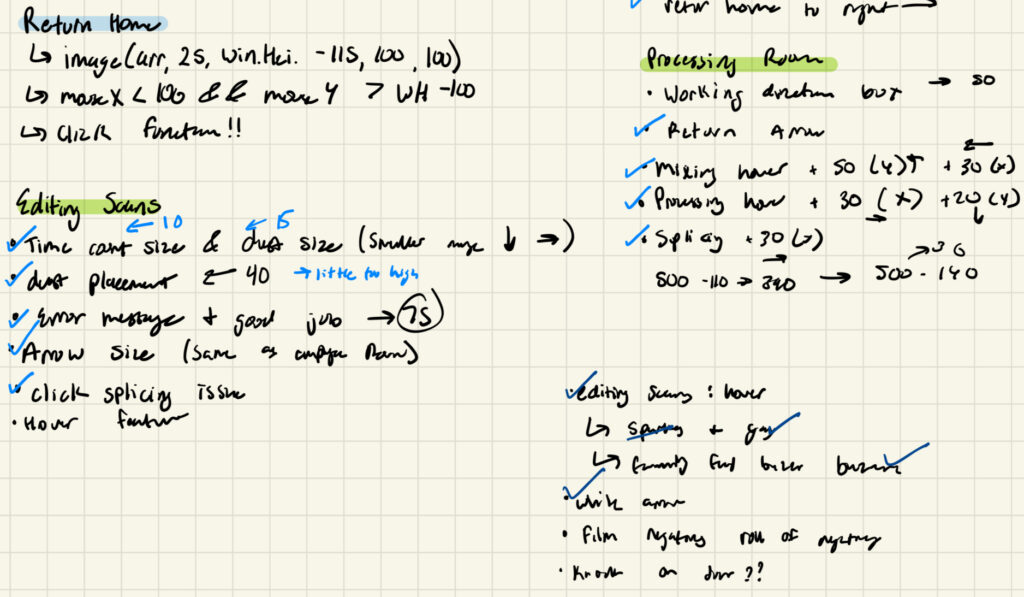

Please excuse my awful handwriting, it was nearing 3 AM when I wrote this…

Please excuse my awful handwriting, it was nearing 3 AM when I wrote this…