Concept:

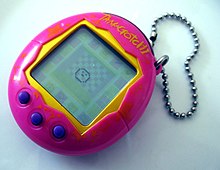

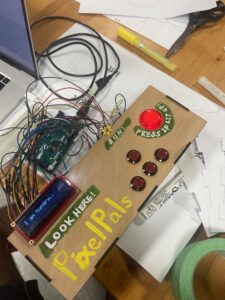

This project follows my initial idea of making a digital petting zoo. The name of this zoo is PixelPals. In this petting zoo, you are able to approach different animals and make different interactions with them based on their needs and personalities. The theme and design is quite adorable, aiming to create an atmosphere of comfort and fun. The soundtrack of the background music is from the Nintendo Game, Animal Crossing, something very similar to this project. This project also includes a dedicated control box.

https://editor.p5js.org/Yupuuu/full/2L2O-pC8e

Challenges and Highlights:

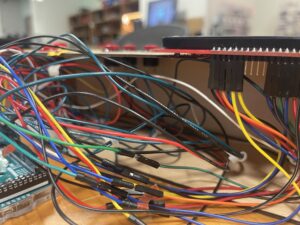

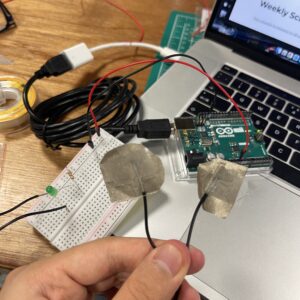

The most difficult challenge I had was the serial communication. It took me sometime understand how the serial communication truly works so that I could send more complicated codes. Designing levels of the experience is also hard: it’s important to decide which levels one animal uses, and how to differentiate among all these animals, etc. This is especially difficult because I had to deal with two ends of the communication at the same time. Therefore, it took me a lot of time to figure these things out. And also, sometimes the circuit itself did not work and I had to try some methods such as resetting the Arduino or rewiring things to make it work.

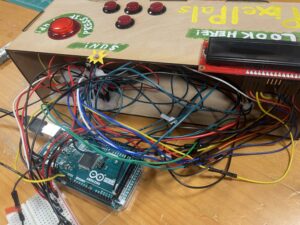

Another problem I ran into the wiring the wires and making the control panel. The control panel is smaller than I thought when I was using the laser cutter to make it. This gave me a hard time putting everything into this enclosed box. In fact, I am still not able to put all wires into it. But one advantage of keeping things outside is that I can easily spot the problem if anything happens. However, this is still a lesson learnt: always make the box bigger than I think.

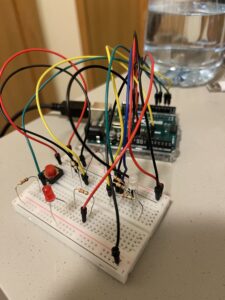

I am especially proud of using many different features in this single project, including state machine, spritesheet, colliders, etc. All these parts work smoothly with each other to make this project possible. And I am also glad how I used fours buttons to complete several different tasks. Allocating these buttons is also important for the implementation of this project.

Arduino code: https://drive.google.com/drive/folders/1tKOEiyYHFteIk_WYt9-tBES8K4-c9bhL?usp=sharing

VIDEO: IMG_1755

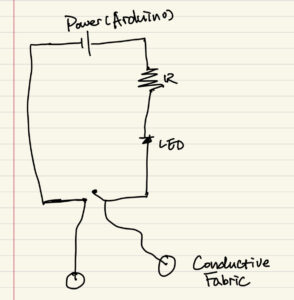

Control Panel: Arduino Mega, LCD Display, Buttons, Light Sensor

User Testing

The current control panel is a result of user testing. Originally, there were no instructions at all. Especially when it came to the light sensor, people who did not know what it was had no idea what it could do and how it could relate to the owl. Therefore, I decided to add a shape of the sun to indicate that this can represent a sun and you need to cover this sun for the owl to sleep. Following this, I added instructions to the control panel. However, as a relaxing experience, I do not want the player to spend a lot of time reading the instructions. Therefore, I ended up using some simple illustrations and words to guide the player.

(After the showcase) It was interesting that almost nobody followed the instructions before I told them to. They liked pushing the button even when it was not lit up. And they never read what was on the LCD screen. The only persons who read them were kids. Probably this game was meant for kids. This really shows me the gap between the designed experience and the actual experience people have. It actually reflects on one of our readings that the interactive media artists should let the audience explore on themselves. Obviously, my project is not such a good example in which audience should be allowed to freely explore it as it might break my project… However, overall, people could understand what my project was about and made a laugh when they actually read the texts on the LCD screen!

Reflection & Improvements

This project is very interesting. It prompted me to utilize all the skills I learnt in this class with fabrication skills make this project. It is very satisfactory to see the final product. Reflecting on this project, I realized the power of physical computing and how the hardware and softwire can work together to create unique experiences. However, one of my initial ideas was to create an animal like controller that can act like an animal. However, that would require much more physical constructions, which I did not have enough time for this time. In this future improvement, this could be added, and more interactions could be added to more animals in this zoo. Maybe some customization functions could also be added to make this experience more interesting and personal.