Source

The full source code, and accompanying documentation, is available at on Github.

Concept

This project is an interactive media piece that combines audio, visuals, and interactive elements to share the personal stories of students who faced repercussions for their politics. Using both digital and physical interfaces, users can explore these narratives at their own pace and in thier own manner.

My motivation behind choosing this cause was to highlight the similarity and elicit the empathy of the audience, as fellow international students.

How It Works

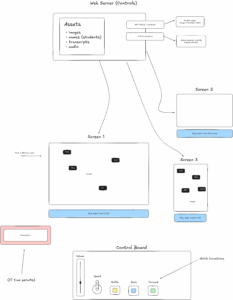

The implementation consists of three main components working together:

1. Client Interface: A browser-based application built with p5.js that handles the visualization, audio playback, and user interaction.

2. Server Backend: A FastAPI backend that serves content and manages WebSocket connections for real-time control.

3. Physical Controls: An Arduino-based controller that provides tangible ways to interact with the digital content.

When a user accesses the application, they’re presented with a multimedia canvas that displays images related to a student’s story while playing their audio narrative. As the audio plays, transcribed text appears dynamically on screen, visualizing the spoken words. Users can control the experience either through on-screen interactions or using the physical Arduino controller.

Interaction Design

The interaction design prioritizes intuitive exploration and meaningful engagement:

– Starting the Experience: Users simply click anywhere on the screen to begin

– Content Navigation: Pressing buttons or clicking on screen transitions to the next story

– Playback Controls:

– A physical switch pauses/resumes the audio

– Potentiometers adjust volume and playback speed

– On-screen interactions mirror these controls for users without the physical interface

The design deliberately minimizes explicit instructions, encouraging exploration and discovery. As found during user testing, providing just a small amount of context greatly improved the experience while still allowing for personal discovery. Users who spent more time interacting with the piece often discovered deeper layers of meaning as they engaged with multiple stories.

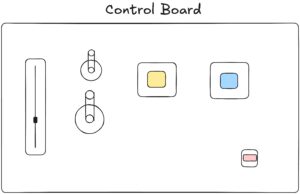

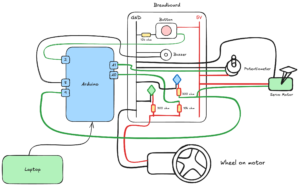

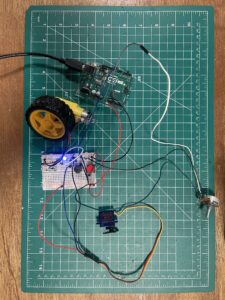

Arduino Implementation

The Arduino controller serves as a physical interface to the digital content, creating a more tangible connection to the stories. The circuit includes:

– A toggle switch for pausing/resuming content

– Two push buttons for transitioning between stories

– Three potentiometers for adjusting various playback parameters

The Arduino code continuously monitors these inputs and sends structured data to the server whenever a change is detected. The Arduino communicates with the server over a serial connection, sending a compact binary structure containing the state of all controls.

Future Improvements

Based on user testing and reflection, several areas could be enhanced in future iterations:

1. Introductory Context: Adding a brief introduction screen would help orient users.

2. More Intuitive Transitions: Some users were initially uncertain about how to navigate between stories.

3. Additional Narratives: Expanding the collection of stories would create a more comprehensive experience.