Project Documentation

Project Title:

Traffic Light Game

Project Idea:

The original idea was to use LED lights connected to an Arduino to simulate a traffic light. The lights would signal when a car (controlled by the user) should stop or move. However, I transitioned to using p5.js to create a digital simulation of the traffic light and car movement, while the Arduino controlled the car’s behavior through a physical button.

Concept:

The project combines physical hardware (Arduino) and digital visualization (p5.js) to simulate real-world traffic rules:

- Traffic Lights: Designed in p5.js to change states (green, yellow, red) at timed intervals.

- Car Movement: Controlled through an Arduino-connected button. Pressing the button sends “MOVE” signals to the p5.js interface, while releasing it sends “STOP.”

- Strike System: Violations occur when the car moves during a red light or fails to move during green light. A buzzer (Arduino) provides audible feedback for violations.

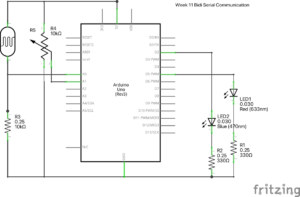

Step-by-Step Arduino Connection

Components Required:

- Arduino Uno

- Breadboard

- 1 Push Button (Switch)

- 1 10kΩ Resistor

- 1 Buzzer

- Jumper Wires (Male-to-Male)

Step-by-Step Connection:

- Connect the Switch:

- Place the push button (switch) on the breadboard, bridging the middle gap.

- Connect one leg of the switch to Digital Pin 2 on the Arduino.

- Connect the same leg of the switch to 5V on the Arduino.

- Connect the Resistor:

- Attach a 10kΩ resistor from the other leg of the switch to the GND rail on the breadboard.

- This acts as a pull-down resistor, ensuring the switch reads LOW when not pressed.

- Connect the Buzzer:

- Place the buzzer on the breadboard.

- Connect the positive leg of the buzzer to Digital Pin 8 on the Arduino.

- Connect the negative leg of the buzzer to the GND rail on the breadboard.

- Power Connections:

- Connect the 5V pin on the Arduino to the breadboard’s + rail.

- Connect the GND pin on the Arduino to the breadboard’s – rail.

Arduino Connection

The diagram below shows the Arduino and Breadboard connections for the push button and buzzer:

https://drive.google.com/file/d/198DnUSxek9c-3ebID0bJrLljIN0VNa_O/view?usp=drive_link

Code Implementation

1. p5.js Code

The p5.js code handles the simulation of traffic lights, car movement, and strike detection. It also integrates with the Arduino using serial communication to receive “MOVE” and “STOP” signals and send feedback for violations (buzzer activation).

/*

Course: Introduction to interactive media

Final Project

Section: Mang-F2024

Name: Bismark Buernortey Buer

Title: Superman Saves

*/

// Declare global variables

let roadY = 0; // Vertical position of the road markings

let gameState = "INSTRUCTIONS"; // Tracks the current state of the game

let carImage, backgroundImage, startSound, gameSound, gameOverSound; // Assets: images and sounds

let gameOverImage, restartImage, quitImage, gameOverBg, startButtonImage; // UI images

let countdownSound; // Countdown sound effect

let carX, carY; // Car position coordinates

let lightState = "green"; // Current state of the traffic light

let strikes = 0; // Counter for traffic violations

let lightTimer = 0; // Timer to track light changes

let isMoving = false; // Boolean flag for car movement (controlled by Arduino)

let violationTimer = 0; // Timer to check violations

let countdown = 3; // Countdown value before the game starts

let countdownStartTime = 0; // Timer start time for countdown

let countdownActive = false; // Flag for countdown state

let serial; // Serial communication object for Arduino

let gracePeriodActive = false; // Flag for grace period after light change

let graceStartTime = 0; // Timer start for grace period

// Preload images and sounds before setup

function preload() {

carImage = loadImage("car.png");

backgroundImage = loadImage("background.jpg");

gameOverImage = loadImage("gameover.png");

restartImage = loadImage("restart.png");

quitImage = loadImage("quit.png");

gameOverBg = loadImage("gameover_bg.jpg");

startButtonImage = loadImage("start.png");

startSound = loadSound("start_sound.mp3");

gameSound = loadSound("gameplay_sound.mp3");

gameOverSound = loadSound("gameover_sound.mp3");

countdownSound = loadSound("countdown_go.mp3");

}

// Initial setup for the game

function setup() {

fullscreen(); // Set fullscreen mode

createCanvas(windowWidth, windowHeight); // Create a canvas with full window size

carX = width / 2; // Set car's horizontal position

carY = height - 200; // Set car's vertical position

// Initialize serial communication with Arduino

serial = new p5.SerialPort();

serial.open("/dev/tty.usbmodem1201"); // serial port

serial.on("data", serialEvent); // Define event for incoming serial data

startSound.loop(); // Play start sound on loop

}

// Resize canvas dynamically when the window size changes

function windowResized() {

resizeCanvas(windowWidth, windowHeight);

}

// Main draw loop: controls the game state

function draw() {

if (gameState === "INSTRUCTIONS") {

showInstructions(); // Display instructions screen

} else if (gameState === "COUNTDOWN") {

showCountdown(); // Display countdown before game starts

} else if (gameState === "PLAY") {

playGame(); // Main game logic

} else if (gameState === "END") {

endGame(); // End game screen

}

}

// Display instructions screen

function showInstructions() {

background(backgroundImage); // Set background

textAlign(CENTER);

textSize(32);

fill("black");

text("🚦 Traffic Light Game 🚦", width / 2, height / 6); // Title

textSize(24);

text("Green: Move | Red: Stop | Yellow: Keep Moving", width / 2, height / 4);

text("Press and hold the button to stop the car", width / 2, height / 3);

text("React in time to avoid strikes!", width / 2, height / 2.75);

image(startButtonImage, width / 2 - 100, height / 2, 200, 100); // Start button

// Start game on button press

if (mouseIsPressed && mouseX > width / 2 - 100 && mouseX < width / 2 + 100 && mouseY > height / 2 && mouseY < height / 2 + 100) {

startSound.stop();

countdownSound.play();

countdownStartTime = millis(); // Start countdown timer

countdownActive = true;

gameState = "COUNTDOWN";

}

}

// Show countdown before game starts

function showCountdown() {

let currentTime = millis();

let elapsed = Math.floor((currentTime - countdownStartTime) / 1000); // Time passed

let flashColor = frameCount % 20 < 10 ? color(255, 0, 0) : color(255, 255, 0); // Flashing background effect

background(flashColor);

textAlign(CENTER);

textSize(150);

fill(255);

if (elapsed <= 3) {

countdown = 3 - elapsed;

text(countdown, width / 2, height / 2); // Show countdown numbers

} else {

fill(0, 255, 0);

text("GO!", width / 2, height / 2); // Show "GO!" when countdown ends

if (countdownActive) {

countdownActive = false;

setTimeout(() => {

gameSound.loop(); // Start gameplay sound

gameState = "PLAY";

lightTimer = millis(); // Start light timer

violationTimer = millis(); // Start violation timer

startGracePeriod();

}, 1000);

}

}

}

// Main gameplay logic

function playGame() {

background("SkyBlue");

let currentTime = millis();

updateTrafficLight(currentTime); // Update traffic light state

updateRoad(); // Draw road

drawCar(carX, carY); // Draw car

drawTrafficLight(); // Draw traffic light

if (isMoving && !gameSound.isPlaying()) gameSound.loop(); // Loop game sound when moving

else if (!isMoving && gameSound.isPlaying()) gameSound.stop(); // Stop sound if not moving

// Check for violations every 2 seconds

if (currentTime - violationTimer >= 2000) {

checkViolations();

violationTimer = currentTime;

}

fill("black");

textSize(24);

text(`Strikes: ${strikes}`, 50, 50); // Display strikes

// End game after 3 strikes

if (strikes >= 3) {

gameSound.stop();

gameOverSound.play();

gameState = "END";

}

}

// Display game over screen

function endGame() {

background(gameOverBg);

image(gameOverImage, width / 2 - 150, height / 4, 300, 150);

image(restartImage, width / 2 - 220, height / 2, 200, 100); // Restart button

image(quitImage, width / 2 + 20, height / 2, 200, 100); // Quit button

textAlign(CENTER);

textSize(24);

fill("black");

text("Choose an option:", width / 2, height / 2 - 50);

// Restart or quit game based on mouse position

if (mouseIsPressed) {

if (mouseX > width / 2 - 220 && mouseX < width / 2 - 20 && mouseY > height / 2 && mouseY < height / 2 + 100) {

restartGame();

}

if (mouseX > width / 2 + 20 && mouseX < width / 2 + 220 && mouseY > height / 2 && mouseY < height / 2 + 100) {

returnToStartPage();

}

}

}

// Function to restart the game

function restartGame() {

gameState = "COUNTDOWN"; // Set game state to countdown

strikes = 0; // Reset strikes

lightState = "green"; // Reset traffic light to green

lightTimer = millis(); // Reset light timer

violationTimer = millis(); // Reset violation timer

isMoving = false; // Stop the car movement

gameOverSound.stop(); // Stop the game over sound

countdownSound.play(); // Play countdown sound

countdownStartTime = millis(); // Start countdown timer

countdownActive = true; // Activate countdown

}

// Function to return to the start page

function returnToStartPage() {

gameState = "INSTRUCTIONS"; // Return to the instructions screen

strikes = 0; // Reset strikes

isMoving = false; // Stop car movement

lightState = "green"; // Reset traffic light to green

lightTimer = millis(); // Reset light timer

violationTimer = millis(); // Reset violation timer

gameOverSound.stop(); // Stop the game over sound

startSound.loop(); // Replay the start sound

}

// Function to update the traffic light based on time

function updateTrafficLight(currentTime) {

if (lightState === "green" && currentTime - lightTimer > 15000) {

lightState = "yellow"; // Change to yellow after 15 seconds

lightTimer = millis(); // Reset timer

} else if (lightState === "yellow" && currentTime - lightTimer > 5000) {

lightState = "red"; // Change to red after 5 seconds

lightTimer = millis(); // Reset timer

startGracePeriod(); // Start grace period for violations

} else if (lightState === "red" && currentTime - lightTimer > 8000) {

lightState = "green"; // Change back to green after 8 seconds

lightTimer = millis(); // Reset timer

startGracePeriod(); // Start grace period for green light

}

}

// Function to check for traffic light violations

function checkViolations() {

let currentTime = millis();

if (gracePeriodActive && currentTime - graceStartTime < 1000) return; // Skip checks during grace period

// Add strikes for incorrect actions based on traffic light state

if (lightState === "green" && !isMoving) addStrike("Didn't move during green!");

else if (lightState === "red" && isMoving) addStrike("Moved during red!");

else if (lightState === "yellow" && !isMoving) addStrike("Stopped during yellow!");

}

// Function to handle strikes and send feedback to Arduino

function addStrike(message) {

strikes++; // Increment strikes count

console.log(message); // Log the violation message

serial.write("BUZZER\n"); // Send a buzzer signal to Arduino

}

// Function to draw the traffic light on the screen

function drawTrafficLight() {

fill("black");

rect(20, 20, 50, 150, 10); // Draw the traffic light box

// Draw the red light

fill(lightState === "red" ? "red" : "gray");

ellipse(45, 50, 30, 30);

// Draw the yellow light

fill(lightState === "yellow" ? "yellow" : "gray");

ellipse(45, 95, 30, 30);

// Draw the green light

fill(lightState === "green" ? "green" : "gray");

ellipse(45, 140, 30, 30);

}

// Function to draw the car on the screen

function drawCar(x, y) {

image(carImage, x - 50, y, 100, 150); // Draw the car image centered at (x, y)

}

// Function to update the road movement

function updateRoad() {

let centerY = height / 2; // Center of the screen vertically

let centerX = width / 2; // Center of the screen horizontally

let roadUpOffset = 50; // Width of the road at the top

let roadDownOffset = 150; // Width of the road at the bottom

let markingLength = 40; // Length of road markings

roadY += isMoving ? 5 : 0; // Move road markings downward if car is moving

if (roadY > markingLength * 2) roadY = 0; // Reset markings position when off-screen

// Draw the grass background

noStroke();

fill("lime");

rect(0, centerY, width, centerY);

// Draw the road as a trapezoid

fill("gray");

quad(centerX - roadUpOffset, centerY, centerX + roadUpOffset, centerY,

centerX + roadDownOffset, height, centerX - roadDownOffset, height);

// Draw dashed road markings

stroke(255);

strokeWeight(5);

for (let i = centerY; i < height; i += markingLength * 2) {

let y = i + roadY; // Adjust position with road movement

line(centerX, y, centerX, y + markingLength);

}

}

// Event function for receiving data from Arduino

function serialEvent() {

let data = serial.readStringUntil("\n"); // Read serial data line by line

if (data.trim() === "MOVE") isMoving = true; // Set car to moving if Arduino sends "MOVE"

if (data.trim() === "STOP") isMoving = false; // Stop car if Arduino sends "STOP"

}

// Function to activate grace period after light changes

function startGracePeriod() {

gracePeriodActive = true; // Activate grace period

graceStartTime = millis(); // Set grace period start time

}

2. Arduino Code

The Arduino code reads input from the physical button and sends “MOVE” or “STOP” signals to p5.js via serial communication. Additionally, it listens for the “BUZZER” command from p5.js and activates the buzzer for violations.

const int buttonPin = 2; // Pin for the switch

const int buzzerPin = 8; // Pin for the buzzer

int buttonState = HIGH; // Current state of the switch

int lastState = HIGH; // Last state of the switch

unsigned long lastDebounceTime = 0; // For debounce timing

const unsigned long debounceDelay = 50; // Debounce delay in milliseconds

void setup() {

pinMode(buttonPin, INPUT_PULLUP); // Use internal pull-up resistor for the switch

pinMode(buzzerPin, OUTPUT); // Set buzzer pin as OUTPUT

Serial.begin(9600); // Start serial communication at 9600 baud

}

void loop() {

int currentButtonState = digitalRead(buttonPin);

// Debounce the button input

if (currentButtonState != lastState) {

lastDebounceTime = millis();

}

if ((millis() - lastDebounceTime) > debounceDelay) {

if (currentButtonState != buttonState) {

buttonState = currentButtonState;

// Send "MOVE" or "STOP" signal based on button state

if (buttonState == LOW) {

Serial.println("MOVE"); // Button pressed

} else {

Serial.println("STOP"); // Button released

}

}

}

// Check for commands coming from p5.js

if (Serial.available() > 0) {

String command = Serial.readStringUntil('\n'); // Read command until newline

command.trim(); // Remove unnecessary whitespace

if (command == "BUZZER") {

activateBuzzer(); // Activate buzzer when "BUZZER" command is received

}

}

lastState = currentButtonState; // Update last state

}

// Function to activate the buzzer for 0.5 seconds

void activateBuzzer() {

digitalWrite(buzzerPin, HIGH);

delay(500); // Turn buzzer ON for 500 milliseconds

digitalWrite(buzzerPin, LOW);

}

User Testing

Objective:

To test the game’s functionality, usability, and interaction between the Arduino hardware and p5.js interface.

Process:

I asked my roommate to play the game:

- He interacted with the physical button to control the car and watched how the car responded to the traffic lights on the screen.

- He initially found the yellow light behavior confusing but quickly understood the rules after a second try.

Feedback and Observations:

- Challenge: Understanding the yellow light instructions.

- Solution: Clarified instructions on the screen.

- Positive Feedback: He found the buzzer feedback helpful for identifying violations.

Video:

Final Testing

Objective:

To ensure smooth operation of the system after incorporating feedback from user testing.

Testing Checklist:

- Traffic Light Changes: Tested timed transitions between green, yellow, and red lights.

- Car Movement: Verified car responded immediately to MOVE/STOP signals from Arduino.

- Strike System: Checked that violations were detected correctly, and strikes were incremented.

- Buzzer Activation: Ensured the buzzer activated for 0.5 seconds during violations.

- Game Over Screen: Verified that the game ended and displayed the correct options after 3 strikes.

Results:

- All functionalities worked as expected without delays or glitches.

- User feedback was implemented successfully, resulting in an improved experience.

Videos:

Conclusion:

The project successfully integrates p5.js for visual simulation with Arduino hardware for user interaction. Combining digital visuals with physical inputs created an interactive and engaging experience that simulates real-world traffic rules.

This is the whole game: