Concept

For my midterm project, I thought of making something unique, which seemed like art for the casual viewers, but on closer inspection would be seen as a form of data. For this, I decided to make a circular spectrogram, i.e, visualizing sound in circular patterns. That’s when I saw an artwork on Vimeo, which visualized sound in a unique way:

Using FFT analysis, and the concept of rotating layers, I decided to recreate this artwork in my own style, and go beyond this artwork, thus, creating the SpectroLoom. I also decided that since most people sing along or hum to their favourite tunes, why not include them in the loop too?

At its core, SpectroLoom offers two distinct modes: “Eye of the Sound” and “Black Hole and the Star.” The former focuses solely on the auditory journey, presenting a circular spectrogram that spins and morphs in harmony with the music. The latter introduces a dual-layered experience, allowing users to sing along via microphone input, effectively merging their voice with the pre-loaded tracks, thus creating a sense of closeness with the song.

The Code/Science

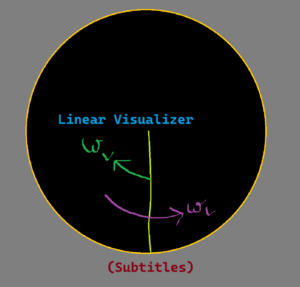

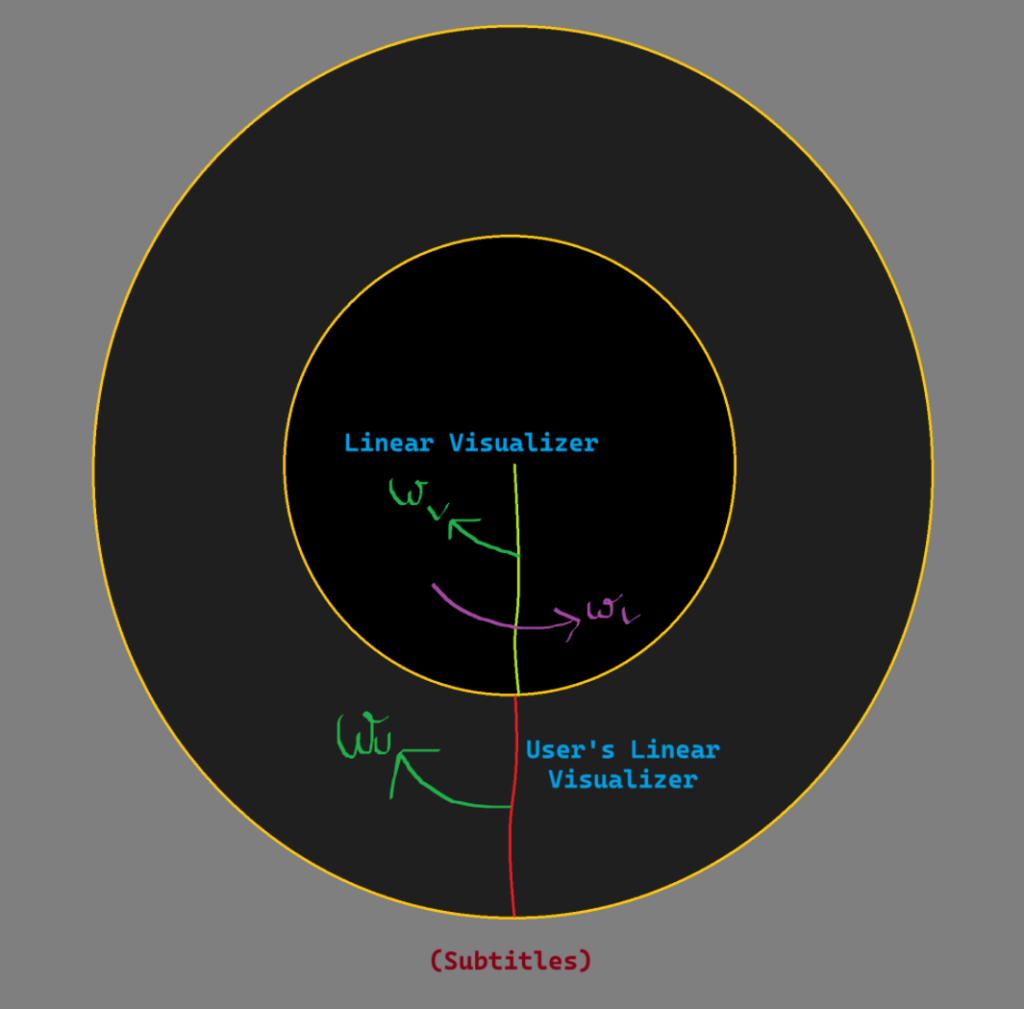

Apart from FFT analysis, the project surprisingly used a lot of concepts related to “Relative Angular Velocity”, so that I could make the sketch behave in the way I want it to be. Using FFT analysis, I was able to get the amplitude of every frequency at any given point of time. I used these values to make a linear visualizer on a layer. The background canvas is rotating at an angular velocity of one revolution for the song’s duration in anti-clockwise direction, and the visualizing layer is rotating in the opposite direction (clockwise), making it seem that the linear visualizer is stationary because the Relative Angular Velocity is “Zero”. The other user layer, which have the user’s waveform is also doing the same, but uses the mic input as the input source for the FFT Analysis (and is only in the second mode).

Also, once the user finishes the song, they can again left click for restarting the same music. This is done by resetting the angle rotated by the layer to “Zero” after a complete revolution and clearing both song visualization layer and the User input layer.

// Visualizer screen drawing function for "Black Hole and the Star" mode

function drawBlackHoleAndStar() {

if (song.isPlaying()) {

background(0);

// Get the frequency spectrum for the song

let spectrumA = fft.analyze();

let spectrumB = spectrumA.slice().reverse();

spectrumB.splice(0, 40);

blendAmount += colorBlendSpeed;

if (blendAmount >= 1) {

currentColor = targetColor;

targetColor = color(random(255), random(255), random(255));

blendAmount = 0;

}

let blendedColor = lerpColor(currentColor, targetColor, blendAmount);

// Draw song visualizer

push();

translate(windowWidth / 2, windowHeight / 2);

noFill();

stroke(blendedColor);

beginShape();

for (let i = 0; i < spectrumB.length; i++) {

let amp = spectrumB[i];

let x = map(amp, 0, 256, -2, 2);

let y = map(i, 0, spectrumB.length, 30, 215);

vertex(x, y);

}

endShape();

pop();

layer.push();

layer.translate(windowWidth / 2, windowHeight / 2);

layer.rotate(radians(-currentAngle));

layer.noFill();

layer.colorMode(RGB);

for (let i = 0; i < spectrumB.length; i++) {

let amp = spectrumB[i];

layer.strokeWeight(0.02 * amp);

layer.stroke(amp, amp, 255 - amp, amp / 40);

layer.line(0, i, 0, i);

}

layer.pop();

var userSpectrum = micFFT.analyze()

userLayer.push();

userLayer.translate(windowWidth / 2, windowHeight / 2);

userLayer.rotate(radians(-currentAngle));

userLayer.noFill();

userLayer.colorMode(RGB);

for (let i = 0; i < userSpectrum.length; i++) {

let amp = userSpectrum[i];

userLayer.strokeWeight(0.02 * amp);

userLayer.stroke(255 - amp, 100, 138, amp / 40);

userLayer.line(0, i + 250, 0, i + 250); // Place the user imprint after the song imprint

}

userLayer.pop();

push();

translate(windowWidth / 2, windowHeight / 2);

rotate(radians(currentAngle));

imageMode(CENTER);

image(layer, 0, 0);

image(userLayer, 0, 0);

pop();

currentAngle += angularVelocity * deltaTime / 1000;

if (currentAngle >= 360) {

currentAngle = 0;

userLayer.clear();

layer.clear();

}

let level = amplitude.getLevel();

createSparkles(level);

drawSparkles();

}

}

Also, there is the functionality for the user to restart too. The functionality was added via the back function. This brings the user back to the instruction screen.

function setup(){

...

// Create back button

backButton = createButton('Back');

backButton.position(10, 10);

backButton.mousePressed(goBackToInstruction);

backButton.hide(); // Hide the button initially

...

}

// Function to handle returning to the instruction screen

function goBackToInstruction() {

// Stop the song if it's playing

if (song.isPlaying()) {

song.stop();

}

// Reset the song to the beginning

song.stop();

// Clear all layers

layer.clear();

userLayer.clear();

// Reset mode to instruction

mode = "instruction";

countdown = 4; // Reset countdown

countdownStarted = false;

// Show Go button again

goButton.show();

blackHoleButton.show();

songSelect.show();

}

The user also has the option to save the imprint of their song via the “Save Canvas” button.

// Save canvas action

function saveCanvasAction() {

if (mode === "visualizer") {

saveCanvas('rotating_visualizer', 'png');

}

if (mode === "blackhole") {

saveCanvas('user_rotating_visualizer', 'png')

}

}

Sketch

Full Screen Link: https://editor.p5js.org/adit_chopra_18/full/v5S-7c7sj

Problems Faced

Synchronizing Audio with Visualization:

-

- Challenge: Ensuring that the visual elements accurately and responsively mirror the nuances of the audio was paramount. Variations in song durations and frequencies posed synchronization issues, especially when dynamically loading different tracks.

- Solution: Implementing a flexible angular velocity calculation based on the song’s duration helped maintain synchronization. However, achieving perfect alignment across all tracks remains an area for refinement, potentially through more sophisticated time-frequency analysis techniques.

Handling Multiple Layers and Performance:

-

-

- Challenge: Managing multiple graphics layers (

layer, userLayer, tempLayer, etc.) while maintaining optimal performance was intricate. Rendering complex visualizations alongside real-time audio analysis strained computational resources, leading to potential lag or frame drops.

- Solution: Optimizing the rendering pipeline by minimizing unnecessary redraws and leveraging efficient data structures can enhance performance. Additionally, exploring GPU acceleration or WebGL-based rendering might offer smoother visualizations.

Responsive Resizing with Layer Preservation:

-

- Challenge: Preserving the state and content of various layers during window resizing was complex. Ensuring that visual elements scaled proportionally without distortion required meticulous calculations and adjustments.

- Solution: The current approach of copying and scaling layers using temporary buffers serves as a workaround. However, implementing vector-based graphics or adaptive scaling algorithms could provide more seamless and distortion-free resizing.