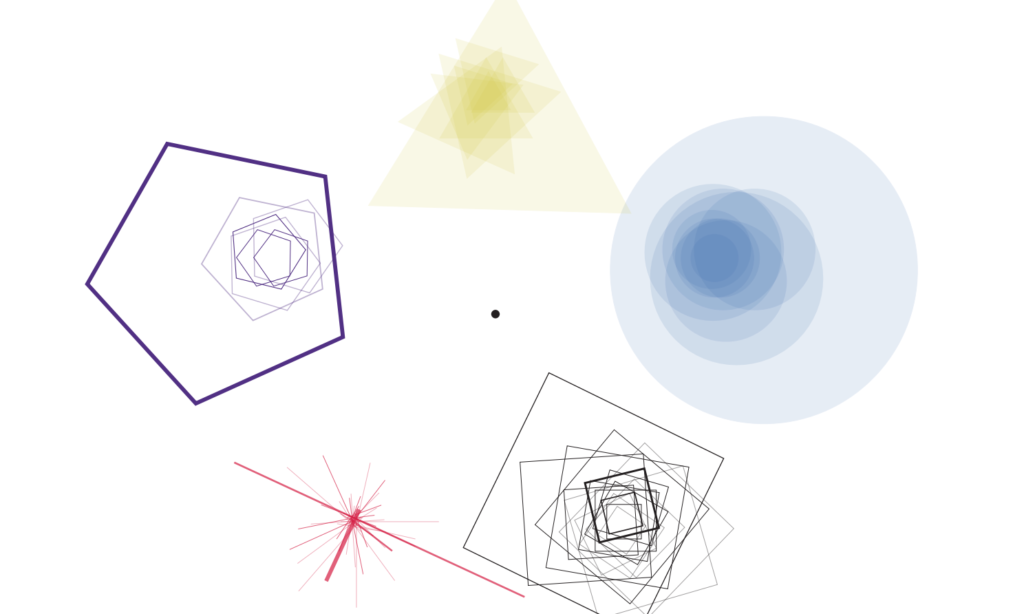

My and Nisala’s final project is slowly coming to life. We started by finalizing the inputs we want to collect: 5 emotions (through 5 buttons), the stress level (using a microphone) and an energy level (using a potentiometer). Then we roughly sketched the way we want the data to be visualized- divided into 5 segments of 5 different shapes (based on the chosen emotion), having the microphone and potentiometer input adjust the size, speed, and radius of each individual shape.

When we started programming our first class, we struggled a bit with figuring out the logic of how to make individual shapes to be open to adjustments only until a new shape is drawn. We solved this by including a “submit button” at the end, which also makes it easier for the user to play around the different inputs, see how it reflects on the screen and submit the values only when all the inputs are finalized.

A similar problem occurred when we were recording the volume of the microphone input. The interaction was not clear- the volume kept changing even after the scream (so unless you were continuously screaming and simultaneously pressing the submit button, the size of the shape would not be changed). Therefore we decided to include another button, which would mimic the way we record voice messages on our phones. The user will simply hold the button down in order to record the scream – but even after that, he is able to record again and adjust other inputs before submitting the response.

The next uncertainty/challenge is definitely the physical part of the project that shapes the user experience. So far we heavily focused on the conceptual part and coding, therefore we really need to finalize how we are going to organize all of the components to achieve a smooth and straightforward interaction. We also need to figure out a couple of other details regarding the aesthetics of the shapes as well as how to distinguish the shape that is being adjusted at the moment from all the other ones that are already drawn.