For the midterm, I plan to make a game called Pyro Dancer. It is a single-player endless runner game, where players assume the role of a knight trying to save the princess. Previous notable titles, such as Castlevania and Metroid inspire the game.

Concept

Pyro Dancer uses pixel art as its main artistic choice. I believe that this is the element that captures the experience and feeling of playing similar titles. Paired with 8-bit sound and theme by Haynes, Pyro Dancer tries to mimic the retro feel of older games.

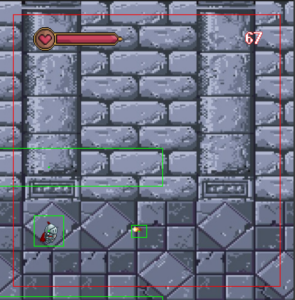

Knight Sprite

Sketches, Ideas, Drawings!

Sketches, Ideas, Drawings!

As the name suggests, Pyro Dancer will feature a knight running through a supervillain castle (unknown for now) to save the princess. However, he is greeted by dozens of fireballs in the process.

Gameplay

Players will have to dodge the fireballs by going up or down. They also have the choice to speed up their running by pressing the D key. As the score increases, so does the difficulty.

Above a certain score, players will be greeted by stationary monsters that instantly kill them upon touching. This would balance out the difficulty and create varied gameplay, as dodging only fireballs would be boring!

To win the game, players must reach a score of 1000 and proceed to the boss battle. (It is still in development, and I am unsure whether I will have the time to code it!)

Development Process

While trying to program the game, I ran across a dilemma.

I could program things from scratch, but it will take more time and the chances of running into bugs is higher.

Or, I could use some external libraries to help me develop the game, where I could devote my time and energy to create a better game instead.

I used an external Javascript library called p5 Play to help me develop the game. The library introduces a sprite class that allows me to easily manage the characters, obstacles, and even the game environment with a built-in physics engine.

Asset-wise, I have compiled a lot of the resources in this document. Please refer to it for the assets that I use.

Programming-wise, I have laid down the basics and finished testing the library itself.

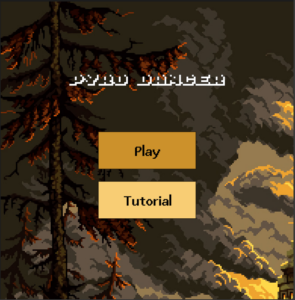

Main Menu Screen (Still in Development)

Main Menu Screen (Still in Development)

Hence, I only need to clean up the code and mash them all together plus adding some sound effects to make sure they are neatly packed as a game.

Concerns & Optimisms

Because of how busy and tight the deadlines are, I am concerned that I might not be able to play test the game extensively. By the end product, there might be a lot of bugs or unexpected exploits (could be a feature/mechanic too!) that would go unnoticed. However, I am optimistic that I will be able to deliver an enjoyable game in the end.

For some reason, I could not import the p5 sketches into this WordPress without it spiraling out of control. I am posting my reference links below instead!

References

p5.js Web Editor | Pyro Dancer – Main Menu Cleaned (p5js.org)

p5.js Web Editor | Pyro Dancer – Game Screen (p5js.org)

p5.js Web Editor | Pyro Dancer – Knight Animation copy (p5js.org)