Group Members: Maliha Nahiyan Srotosshini & Shamsa Alremeithi

Exercise 1

task: make something that uses only one sensor on arduino and makes an ellipse (or other shape) in p5 move on the horizontal axis, in the middle of the screen, and nothing on arduino is controlled by p5

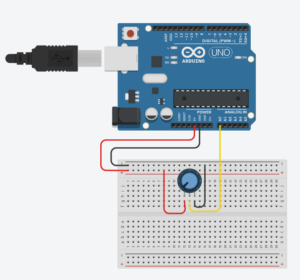

For this exercise, we had connected a potentiometer to the Arduino by connecting the middle pin to analog pin A1, and the other two pins to 5V and GND. we had written a simple Arduino code to read the analog value from the potentiometer and map it to a range of 0 to 400, which was then sent to the computer through the serial port. With p5.js and the p5.webserial library, a circle moves left to right across the screen based on the potentiometer’s position. we also included “Connect” and “Disconnect” buttons to control the serial connection from the browser with ease.

arduino code

void setup() {

Serial.begin(9600); // Initialize serial communication at 9600 baud rate

}

void loop() {

// Read the analog value from pin A1 (range: 0 to 1023)

int potentiometer = analogRead(A1);

// Map the potentiometer value (0-1023) to a new range (0-400)

int mappedPotValue = map(potentiometer, 0, 1023, 0, 400);

// Send the mapped value to p5.js via serial

Serial.println(mappedPotValue);

delay(100); // Wait for 100 milliseconds before the next reading

}

); }

P5.js code

let port;

let connectBtn;

let disconnectBtn;

let baudrate = 9600;

let isConnected = false;

function setup() {

createCanvas(400, 400);

background(220);

// Create a new Web Serial port instance using p5.webserial

port = createSerial();

// If a port was previously used, auto-connect to it

let usedPorts = usedSerialPorts();

if (usedPorts.length > 0) {

port.open(usedPorts[0], baudrate);

isConnected = true;

}

// Create the Connect button and open the port when clicked

connectBtn = createButton("Connect to Serial");

connectBtn.position(10, 10);

connectBtn.mousePressed(() => {

port.open(baudrate); // Opens a serial connection using the chosen baud rate

isConnected = true;

});

// Create the Disconnect button to close the serial port

disconnectBtn = createButton("Disconnect");

disconnectBtn.position(150, 10);

disconnectBtn.mousePressed(() => {

port.close(); // Closes the serial connection

isConnected = false;

// Clear screen and show "Disconnected" message

background(255);

textAlign(CENTER, CENTER);

textSize(18);

fill(100);

text("Disconnected.", width / 2, height / 2);

});

}

function draw() {

if (isConnected) {

// Read until newline character

let str = port.readUntil("\n");

if (str.length > 0) {

background("white");

// Convert the received string to an integer (e.g., mapped potentiometer value)

let x = int(str);

// Make sure x stays within the canvas width (safety measure)

x = constrain(x, 0, width);

// Draw an ellipse at the position based on incoming data

ellipse(x, 200, 40, 40);

}

}

}

Excercise 2

task:

make something that controls the LED brightness from p5

p5.js interface:

Arduino Code:

int ledPin = 9; // PWM-capable pin to control LED brightness

void setup() {

Serial.begin(9600); // Start serial communication at 9600 baud rate

pinMode(ledPin, OUTPUT); // Set the LED pin as an output

}

void loop() {

if (Serial.available()) { // Check if data is available to read from serial

int brightness = Serial.parseInt(); // Read the integer value (brightness)

brightness = constrain(brightness, 0, 255); // Limit the value to the 0-255 range

analogWrite(ledPin, brightness); // Write the brightness value to the LED pin

}

}

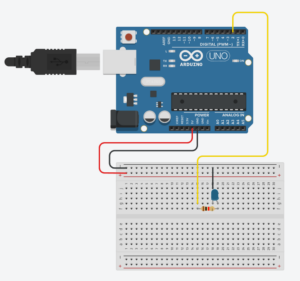

This project creates a real-time visual and physical interface to control an LED’s brightness using a slider in a p5.js sketch. The brightness value is sent from the browser to an Arduino board via serial communication. As the user moves the slider, the LED’s intensity changes accordingly, both in the physical circuit and on-screen through a glowing animation and gauge ring. The interface also includes a connect/disconnect button for flexible hardware control.

Excercise 3

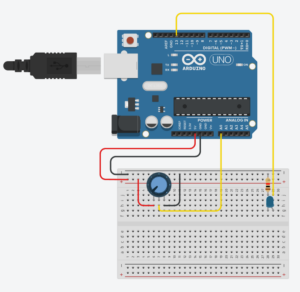

In this exercise, we took the gravity and wind example and instead connected it to the Arduino. We replaced the digital wind control with a potentiometer, allowing us to control the wind force by hand. Additionally, we used an LED to light up every time the ball hit the ground and is at rest.

Arduino Code:

// Define the pin connected to the LED

const int ledPin = 5;

// Define the analog pin connected to the potentiometer

const int potPin = A0;

void setup() {

// Start serial communication at 9600 baud

Serial.begin(9600);

// Set the LED pin as an output

pinMode(ledPin, OUTPUT);

}

void loop() {

// Read the analog value from the potentiometer

int sensorValue = analogRead(potPin);

// Print the sensor value to the Serial Monitor

Serial.println(sensorValue);

// Check if data is available to read from the Serial Monitor

if (Serial.available() > 0) {

// Read the incoming character

char msg = Serial.read();

// If the received character is '1', turn on the LED

if (msg == '1') {

digitalWrite(ledPin, HIGH);

}

// If the received character is '0', turn off the LED

else if (msg == '0') {

digitalWrite(ledPin, LOW);

}

// Clear any extra characters in the serial buffer

while (Serial.available() > 0) {

Serial.read();

}

}

// Small delay to avoid flooding the serial output

delay(50);

}

p5.js Code:

let port;

let baudrate = 9600;

let position, velocity, acceleration, gravity, wind;

let drag = 0.99;

let mass = 50;

let val = 0;

let str = "";

let hitGround = false;

function setup() {

createCanvas(640, 360);

noFill();

position = createVector(width / 2, 0);

velocity = createVector(0, 0);

acceleration = createVector(0, 0);

gravity = createVector(0, 0.5 * mass);

wind = createVector(0, 0);

port = createSerial();

let connectButton = createButton("Connect");

connectButton.position(10, 10);

connectButton.mousePressed(() => {

if (!port.opened()) port.open(baudrate);

});

let disconnectButton = createButton("Disconnect");

disconnectButton.position(100, 10);

disconnectButton.mousePressed(() => {

if (port.opened()) port.close();

});

let dropButton = createButton("Drop Ball");

dropButton.position(220, 10);

dropButton.mousePressed(dropBall);

}

function draw() {

background(255);

applyForce(gravity);

applyForce(wind);

velocity.add(acceleration);

velocity.mult(drag);

position.add(velocity);

acceleration.mult(0);

ellipse(position.x, position.y, mass, mass);

if (position.y > height - mass / 2) {

velocity.y *= -0.9;

position.y = height - mass / 2;

if (!hitGround) {

hitGround = true;

if (port.opened()) {

port.write("1\n"); // turn LED on

}

}

} else {

hitGround = false;

if (port.opened()) {

port.write("0\n"); // turn LED off

}

}

str = port.readUntil("\n");

val = int(str.trim());

if (!isNaN(val)) {

updateWind(val);

}

}

function applyForce(force) {

let f = p5.Vector.div(force, mass);

acceleration.add(f);

}

function updateWind(val) {

wind.x = map(val, 0, 1023, -1, 1);

}

function dropBall() {

// Reset ball to the top

position.y = 0;

velocity.set(0, 0);

acceleration.set(0, 0);

hitGround = false;

// Force LED off

if (port.opened()) {

port.write("0\n");

}

}

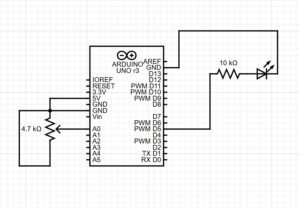

SCHEMATIC

SchematiC

P5.js Artwork

P5.js Artwork

Video

Link: https://drive.google.com/file/d/1bVrW1jPjtYBfAijWJPNHeHTnERkzNfPb/view?usp=sharing