I think that the reading really challenged my idea of computer-generated art and how much meaning does it have. I didn’t associate much meaning to algorithmically generated art as I thought it’s just a bunch of code, but after seeing this lecture I find it to be way more meaningful. It is essentially a study of how randomness gives meaning to things in nature, as in nature we see things that follow certain rules, but with slight variation just like how Casey Reas mentions in his lecture.

I am also intrigued by the idea that the universe was all random and chaotic before a God or almighty power brought order to it, as Casey mentions in the beginning of the lecture. I want to relate it to the concept of entropy, which entails that the universe is constantly moving towards a state of randomness; it’s going toward chaos from a state of orderliness. If a certain part is cold and another hot, the laws of physics are making sure that it all becomes equal in temperature everywhere. My questions are:

Is the existence of life and current state of the universe the most likely state that the universe can be in? If entropy is a real thing, is the current state of the universe the most likely scenario, the most homogenous it can get? Or is it merely a stage in the process?

Category: Fall 2022 – Mang

Assignment 2 – AakifR

Concept:

I wanted to use the Urdu word عشق or Ishq and create a fun pattern with it while also involving some level of animation in it. I created a function that would make the word and then I called it in the draw function to repeatedly draw the word on the canvas and create a repetitive pattern. I changed how the word looks each time it is called by using random(). If you want the animation to restart, you can click your mouse.

Highlight:

Not technically too exciting, but I’m kind of proud of creating the word عشق through programming. It was a slightly tedious process and I’m also interested in learning how to streamline that process.

Sketch:

Reflection:

I really wanted each عشق to rotate around a point, but it turned out that it was harder than I thought and it’s something I still need to figure out.

let angle = 0;

function setup() {

createCanvas(1200, 1200);

background(250, 141, 141);

// let's draw ishq

}

// function executeishq(){

// for (let xchange = 0; xchange < width; xchange = xchange +30){

// for (let ychange = 0; ychange < height ; ychange = ychange +30){

// noStroke();

// fill(random(100,105),random(10,55),random(25,55));

// rotate(random(1,90));

// drawishq(xchange + random (1, 100),ychange + random(1,100), random(1,5));

// }

// }

// }

function drawishq(xpos,ypos, size){ //the xpos, ypos change the position of ishq on screen

//first letter

square(16 + xpos,20 + ypos,size,2);

square(22 + xpos,20 + ypos,size,2);

square(28 + xpos,20 + ypos,size,2);

square(34 + xpos,25 + ypos,size,2);

square(16 + xpos,26 + ypos,size,2);

square(16 + xpos,32 + ypos,size,2);

square(16 + xpos,38 + ypos,size,2);

square(22 + xpos,38 + ypos,size,2);

square(28 + xpos,38 + ypos,size,2);

square(34 + xpos,38 + ypos,size,2);

square(40 + xpos,38 + ypos,size,2);

square(10 + xpos,38 + ypos,size,2);

square(4 + xpos,38 + ypos,size,2);

//2nd letter

square(-2 + xpos,38 + ypos,size,2);

square(-2 + xpos,32 + ypos,size,2);

square(-8 + xpos,38 + ypos,size,2);

square(-14 + xpos,32 + ypos,size,2);

square(-14 + xpos,38 + ypos,size,2);

square(-20 + xpos,38 + ypos,size,2);

square(-26 + xpos,38 + ypos,size,2);

square(-26 + xpos,32 + ypos,size,2);

square(-32 + xpos,38 + ypos,size,2);

square(-38 + xpos,38 + ypos,size,2);

//dots

square(-14 + xpos,14 + ypos,size,2);

square(-20 + xpos,20 + ypos,size,2);

square(-8 + xpos,20 + ypos,size,2);

//third letter

square(-44 + xpos,38 + ypos,size,2);

square(-50 + xpos,38 + ypos,size,2);

square(-50 + xpos,32 + ypos,size,2);

square(-50 + xpos,26 + ypos,size,2);

square(-50 + xpos,20 + ypos,size,2);

square(-56 + xpos,26 + ypos,size,2);

square(-56 + xpos,20 + ypos,size,2);

square(-50 + xpos,44 + ypos,size,2);

square(-50 + xpos,50 + ypos,size,2);

square(-50 + xpos,56 + ypos,size,2);

square(-50 + xpos,62 + ypos,size,2);

square(-56 + xpos,62 + ypos,size,2);

square(-62 + xpos,62 + ypos,size,2);

square(-68 + xpos,62 + ypos,size,2);

square(-74 + xpos,62 + ypos,size,2);

square(-80 + xpos,62 + ypos,size,2);

square(-86 + xpos,62 + ypos,size,2);

square(-92 + xpos,62 + ypos,size,2);

square(-92 + xpos,56 + ypos,size,2);

square(-92 + xpos,50 + ypos,size,2);

square(-92 + xpos,44 + ypos,size,2);

// dots

square(-50 + xpos,8 + ypos,size,2);

square(-62 + xpos,8 + ypos,size,2);

}

function draw() {

rotate(angle);

drawishq(random(0,width),random(0,height),random(1,5));

angle += radians(2);

if(mouseIsPressed){

background(250, 141, 141);

}

print(mouseX + "," + mouseY);

}

Assignment 2 _Beauty in Randomness

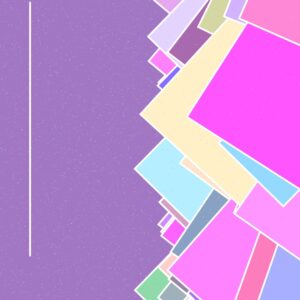

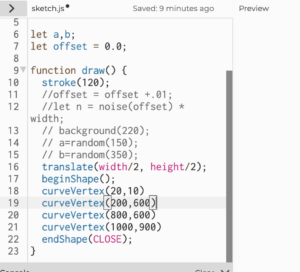

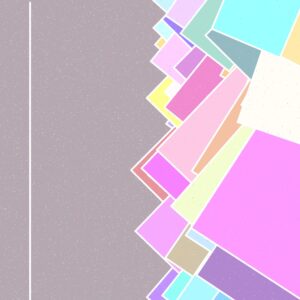

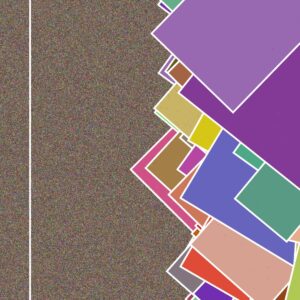

Creating interactive and artistic pieces of digital art seemed interesting to me. I cannot even imagine the endless possibilities when art and technology are mixed. In this assignment, I wanted to explore the P5JS tools and functions. I did not have a specific idea of what I wanted the outcome to be, instead, I sought to embrace the concept of randomness and let it guide my exploration. It was a challenge for me to start coding without having a specific idea, but when I started the code, I began to visualize and unwind the concept of this assignment.

I found inspiration in the work of the artist Piet Mondrian, who is a pioneer in modern art. However, I wanted to create an interactive element to it. I wanted to recreate the feel I get when I look at his art, in the sense that it can be interpreted and connected within a more individualized sense.

Highlight:

Before settling on one final idea, I tried many others one of which is to create random shapes using the startShape and endShape functions. They were fun and all but I did not know how I could embed for loops and conditionals into the code. As a result, I tried another approach, instead of focusing on the shapes I focused on the loops and conditionals and then added the shapes that fit best.

I began with colors. I declared some variables and gave them some random values. Then I decided to set a size for the rectangles. I also created my function for the line on the left. For it, I had to declare some variables too for its speed, direction, and position. What was challenging was drawing the colorful rectangles because I had to experiment a lot and use the push and poll, rotate, keyTyoed, and translate functions. Of course, I learned about those by watching a lot of videos and reading the reference page. I used nested loops to create the rectangles with random colors. I wanted the rectangles to appear when the mouse is pressed so I also created a conditional.

for (x = width; x > -size * 4; x = -x - size) {

for (

y = height;

y > -size * 4;

y = y - size

) // when I add the + the code was cracking so I decided to add the - sign instead and it worked! Here y starts at the height of the canvas and with each iter,ation it decreases the size. the same happens to tvaluevlaue (width)

{

fill(random(R, R + 190), random(G, G + 190), random(B, B + 190));

// the lines sets the colors for the rectangles in random values up to 190

push();

// the push and pull temporarily changing the effecting the rest of the piece.

if (mouseIsPressed) {

translate(x, y);

rotate(random(0, 45));

rect(0, 0, size * random(1, 4), size * random(1, 4));

}

// here the x and y values are randomly original their origional position

pop();

The stars were a last touch to the image, and I liked the texture they gave. I also used this part of the code in my previous assignment because it is so pretty. This time I made it move.

Reflection and ideas for future work or improvements:

Getting the idea was a little challenging. It took me some time to figure out the logic for the x and y values in the loops. As a beginner in coding, I think I was able to learn a lot of things with this assignment, especially with the previous failed attempts. I hope that for future assignments I will have improved my coding abilities to do something better than this.

Resources:

https://www.youtube.com/watch?v=ig0q6vfpD38

Week 2 | Creative Reading Reflection: Eyeo2012 – Casey Reas + Computer Art Loops Assignment

Creative Reading Reflection – (Eyeo2012 – Casey Reas):

Casey Reas’ video explores the evolution of serendipity and artistic expression from early 20th-century rebellious art movements to their current recognition in the art world. He delves into the complex relationship between serendipity and creative structure, emphasizing their impact on art.

Reas also discusses the significance of grid-like images in contrast to chaotic generative art, highlighting Rosalind Krauss’ critical perspective and emphasizing their importance both in aesthetics and metaphorically. He touches on how humans perceive order emerging from randomness and the concept of symmetry.

Additionally, Reas, a significant contributor to the development of Processing, explores the philosophy of art and technology. He argues that computers, although known to be organized, can surprisingly create random and chaotic art, linking these concepts to nature and science.

Personally, I share Reas’ perspective on machines being an ideal platform to explore the complex relationship between chance and order. His examples show how chance and order coexist within code and machines, even revealing patterns within chaos. In conclusion, Casey Reas’ video provides inspiring and thought-provoking insights into the interplay of chance, order, and technology in art.

Computer Art – Random Circle Poppers:

I was feeling confused and struggling to come up with a project idea. After some thought, I decided to create something simple yet visually captivating. The result was a colorful circle collage. It might seem unconventional, but it held a special significance for me. I used to have an inexplicable fear of multiple colored objects, and this project was my way of confronting that fear. Surprisingly, I found the combination of vibrant circles to be not only pleasing but also artistic. It reminded me that art can be found in the most unexpected places, even in something as seemingly mundane as a collection of colorful circles.

let circlesNumbers = 10; // Change this to the desired number of circles

function setup() {

createCanvas(400, 400);

background('white');

frameRate(5);

}

function draw() {

for (let i = 0; i < circlesNumbers; i++) {

r = random(0, 200);

g = 0;

b = random(300);

x = random(600);

y = random(400);

noStroke();

fill(r, g, b, 95);

circle(x, y, 35);

}

}

Reflection:

I tried to use the new “for” function we learned in class, but I had trouble understanding how to use it in my project. I did my best to learn it and include it my project somehow. I don’t consider myself an intermediate in coding yet, but I enjoy expressing my artistic ideas and learning more along the way.

WEEK 2 ASSIGNMENT

Concept:

I wasn’t sure what I wanted to do when I first started designing my animation. I simply went with the flow. When I was finished, I was very pleased with what I had produced. My animation shows a moving train from the side perspective. The lines are supposed to represent how fast the train is moving, and the large circles are the train’s windows! This train brought back memories of the trains I used to ride in Berlin. I’d be waiting at a stop when the train would fly by, taking my breath away with its speed. The colors and shapes become more saturated and beautifully mesh together as you move your mouse upward.

Highlight of the Code:

//LAYER 1

noStroke(0)

fill('rgb(93,35,253)')

circle(circleX,400,10);

fill('rgb(243,72,137)')

circle(circleX,300,10);

fill('rgb(7,204,29)')

circle(circleX,180,10);

circle(circleX,190,10);

circle(circleX,160,10);

circle(circleX,150,10);

circle(circleX,130,10);

circle(circleX,120,10);

circle(circleX,100,10);

circle(circleX,90,10);

fill('rgb(93,35,253)')

circle(circleX,390,10)

fill('rgb(243,72,137)')

circle(circleX,310,10);

fill('rgb(219,243,72)')

circle(circleX,380,10);

circle(circleX,350,10);

circle(circleX,170,10);

circle(circleX,320,10);

circle(circleX,260,10);

circle(circleX,290,10);

circle(circleX,230,10);

circle(circleX,200,10);

circle(circleX,140,10);

circle(circleX,110,10);

circle(circleX,80,10);

circle(circleX,50,10);

circle(circleX,20,10);

fill('rgb(93,35,253)')

circle(circleX,370,10)

circle(circleX,360,10)

circle(circleX,340,10)

circle(circleX,330,10)

circle(circleX,70,10);

circle(circleX,60,10);

circle(circleX,40,10);

circle(circleX,30,10);

circle(circleX,10,10);

circle(circleX,0,10);

fill('rgb(243,72,137)')

circle(circleX,280,10);

fill('rgb(243,72,137)')

circle(circleX,270,10);

circle(circleX,250,10);

circle(circleX,240,10);

circle(circleX,220,10);

circle(circleX,210,10);

circle(circleX,150,80)

I used 10 layers of circles and colors and arranged them in different positions on the x – axis. Depending on the layer, I also changed the coordinates of certain circles and placed them in different color blocks for contrast.

Reflection and Improvements:

I really enjoyed creating this piece! I swear that I will begin to move on to different shapes in the future…lol. For this project, I felt that circles fit best to the image that I was trying to depict. I hope to become better at condensing my code using loops since I had a lot of repetition throughout my code.

TRAIN VIDEO ⬇️

TouchDesigner Workshop

Artist bio:

Harshini J. Karunaratne is a Sri Lankan-Peruvian digital artist working at the intersections of film, theatre and technology. Rooted in a practice of artistic research and performance, she develops projects that explore themes of eco-grief, gender, the body and afterlife. Harshini’s explorations are centered in the visual, combining a background in photography with VJing, utilizing Resolume and TouchDesigner primarily to create interactive and real-time performances.

Assignment 1 – AakifR

For the first assignment, I wanted to create a character that represents me in some way. I used a simple pencil sketch to figure out which shapes go where and then started coding. The final sketch turned out to be different than what I originally planned for as the fluffy hair was quite difficult to achieve.

Highlight:

I think something that stood out to me was figuring out the semicircles by browsing the reference library. I think it gave my portrait more dimensionality instead of being all circles.

Future:

I think I would like to add some animations – like blinking eyes or moving mouth. Or some interactive elements where the user could change the portrait’s mood by clicking, or change the hat.

Code:

function setup() {

createCanvas(600, 600);

background(254, 226, 221);

//body

fill(10);

rect(140, 460, 325, 250, 100);

fill(255, 219, 172);

ellipse(145, 293, 50, 50 ); //left ear

ellipse(454, 290, 50, 50 ); // right ear

arc(300, 240, 325, 450, 0, 3.14, [OPEN]);

//ellipse(300, 300, 300, 400); // face

fill(255);

arc(230, 280, 60, 40, 0, 3.14, [OPEN]);

fill(0);

circle(220, 290, 15);

//circle(230, 273, 60); // left eye

fill(255);

arc(354, 280, 60, 40, 0, 3.14, [OPEN]);

fill(0);

circle(342, 290, 15);

//circle(354, 273, 60); // right eye

//circle(294, 410, 60);

fill(0, 0, 0)

arc(295, 380, 60, 45, 0, 3.14, [OPEN]) //mouth

arc(162, 238, 60, 45, 0, 3.14, [OPEN]);

arc(192, 238, 60, 45, 0, 3.14, [OPEN]);

arc(220, 238, 60, 45, 0, 3.14, [OPEN]);

arc(260, 238, 60, 45, 0, 3.14, [OPEN]);

arc(290, 238, 60, 45, 0, 3.14, [OPEN]);

arc(320, 238, 60, 45, 0, 3.14, [OPEN]);

arc(350, 238, 60, 45, 0, 3.14, [OPEN]);

arc(390, 238, 60, 45, 0, 3.14, [OPEN]);

arc(420, 238, 60, 45, 0, 3.14, [OPEN]);

arc(436, 238, 60, 45, 0, 3.14, [OPEN]);

//hat

fill(111, 78, 55);

rect(110, 230, 375, 10);

arc(300, 230, 340, 300, 3.14, 0, [OPEN])

//nose

line(293, 308, 282, 347);

line(282, 347, 301, 348);

}

function draw() {

print(mouseX + "," + mouseY);

}

Final Project

Hassan, Aaron, Majid.

CONCEPT

How would someone virtually learn how complicated it is to drive a car? Would teaching someone how to drive a car virtually save a lot of money and decrease potential accidents associated with driving? These questions inspired our project, which is to create a remote-controlled car that can be controlled using hand gestures (that imitate the driving steering wheel movements), specifically by tracking the user’s hand position and a foot pedal. The foot pedal will be used to control the acceleration, braking, and reversing. We will achieve all these by integrating a P5JS tracking system into the car, which will interpret the user’s hand gestures and translate them into commands that control the car’s movements. The hand gestures and pedal control will be synced together via two serial ports that will communicate with the microcontroller of the car.

Experience

The entire concept is not based only on a driving experience. We introduce a racing experience by creating a race circuit. The idea is for a user to complete a lap in the fastest time possible. Before you begin the experience, you can view the leaderboard. After your time has been recorded, a pop-up appears for you to input your name to be added to the leaderboard. For this, we created a new user interface on a separate laptop. This laptop powers an Arduino circuit connection which features an ultrasonic sensor. The ultrasonic sensor checks when the car has crossed the start line and begins a timer, and detects when the user ends the circuit. After this, it records the time it took a user to complete the track and sends this data to the leaderboard.

This piece of code is how we’re able to load and show the leaderboard.

function loadScores() {

let storedScores = getItem("leaderboard");

if (storedScores) {

highscores = storedScores;

console.log("Highscores loaded:", highscores);

} else {

console.log("No highscores found.");

}

}

function saveScores() {

// make changes to the highscores array here...

storeItem("leaderboard", highscores);

console.log("Highscores saved:", highscores);

}

IMPLEMENTATION(The Car & Foot Pedal)

We first built the remote-controlled car using an Arduino Uno board, a servo motor, a Motor Shield 4 Channel L293D, an ultrasonic sensor, 4 DC motors, and other peripheral components. Using the Motor Shield 4 Channel L293D decreased numerous wired connections and allowed us space on the board on which we mounted all other components. After, we created a new Arduino circuit connection to use the foot pedal.

The foot pedal sends signals to the car by rotating a potentiometer whenever the pedal is engaged. The potentiometer value is converted into forward/backward movement before it reaches p5.js via serial communication.

P5/Arduino Communication

At first, a handshake is established to ensure communication exists before proceeding with the program:

//////////////VarCar/////

// Define Serial port

let serial;

let keyVal;

//////////////////////////

const HANDTRACKW = 432;

const HANDTRACKH = 34;

const VIDEOW = 320;

const VIDEOH = 240;

const XINC = 5;

const CLR = "rgba(200, 63, 84, 0.5)";

let smooth = false;

let recentXs = [];

let numXs = 0;

// Posenet variables

let video;

let poseNet;

// Variables to hold poses

let myPose = {};

let myRHand;

let movement;

////////////////////////

let Acceleration = 0;

let Brake = 0;

let data1 = 0;

let data2 = 0;

let s2_comp=false;

function setup() {

// Create a canvas

//createCanvas(400, 400);

// // Open Serial port

// serial = new p5.SerialPort();

// serial.open("COM3"); // Replace with the correct port for your Arduino board

// serial.on("open", serialReady);

///////////////////////////

// Create p5 canvas

The Hand Gestures

Two resources that helped detect the user’s hand position were PoseNet and Teachable Machine. We used these two resources to create a camera tracking system which was then programmed to interpret specific hand gestures, such as moving the hand right or left to move the car in those directions. This aspect of our code handles the hand tracking and gestures.

if (myPose) {

try {

// Get right hand from pose

myRHand = getHand(myPose, false);

myRHand = mapHand(myRHand);

const rangeLeft2 = [0, 0.2 * HANDTRACKW];

const rangeLeft1 = [0.2 * HANDTRACKW, 0.4 * HANDTRACKW];

const rangeCenter = [0.4 * HANDTRACKW, 0.6 * HANDTRACKW];

const rangeRight1 = [0.6 * HANDTRACKW, 0.8 * HANDTRACKW];

const rangeRight2 = [0.8 * HANDTRACKW, HANDTRACKW];

// Check which range the hand is in and print out the corresponding data

if (myRHand.x >= rangeLeft2[0] && myRHand.x < rangeLeft2[1]) {

print("LEFT2");

movement = -1;

} else if (myRHand.x >= rangeLeft1[0] && myRHand.x < rangeLeft1[1]) {

print("LEFT1");

movement = -0.5;

} else if (myRHand.x >= rangeCenter[0] && myRHand.x < rangeCenter[1]) {

print("CENTER");

movement = 0;

} else if (myRHand.x >= rangeRight1[0] && myRHand.x < rangeRight1[1]) {

print("RIGHT1");

movement = 0.5;

} else if (myRHand.x >= rangeRight2[0] && myRHand.x <= rangeRight2[1]) {

print("RIGHT2");

movement = 1;

}

// Draw hand

push();

const offsetX = (width - HANDTRACKW) / 2;

const offsetY = (height - HANDTRACKH) / 2;

translate(offsetX, offsetY);

noStroke();

fill(CLR);

ellipse(myRHand.x, HANDTRACKH / 2, 50);

pop();

} catch (err) {

print("Right Hand not Detected");

}

print(keyVal)

The Final Result & Car Control

The final result was an integrated system consisting of the car, the pedal, and gesture control in P5.JS. When the code is run in p5.js, the camera detects a user’s hand position and translates it into movement commands for the car.

The entire code for controlling the car.

//////////////VarCar/////

// Define Serial port

let serial;

let keyVal;

//////////////////////////

const HANDTRACKW = 432;

const HANDTRACKH = 34;

const VIDEOW = 320;

const VIDEOH = 240;

const XINC = 5;

const CLR = "rgba(200, 63, 84, 0.5)";

let smooth = false;

let recentXs = [];

let numXs = 0;

// Posenet variables

let video;

let poseNet;

// Variables to hold poses

let myPose = {};

let myRHand;

let movement;

////////////////////////

let Acceleration = 0;

let Brake = 0;

let data1 = 0;

let data2 = 0;

let s2_comp=false;

function setup() {

// Create a canvas

//createCanvas(400, 400);

// // Open Serial port

// serial = new p5.SerialPort();

// serial.open("COM3"); // Replace with the correct port for your Arduino board

// serial.on("open", serialReady);

///////////////////////////

// Create p5 canvas

createCanvas(600, 600);

rectMode(CENTER);

// Create webcam capture for posenet

video = createCapture(VIDEO);

video.size(VIDEOW, VIDEOH);

// Hide the webcam element, and just show the canvas

video.hide();

// Posenet option to make posenet mirror user

const options = {

flipHorizontal: true,

};

// Create poseNet to run on webcam and call 'modelReady' when model loaded

poseNet = ml5.poseNet(video, options, modelReady);

// Everytime we get a pose from posenet, call "getPose"

// and pass in the results

poseNet.on("pose", (results) => getPose(results));

}

function draw() {

// one value from Arduino controls the background's red color

//background(0, 255, 255);

/////////////////CAR///////

background(0);

strokeWeight(2);

stroke(100, 100, 0);

line(0.2 * HANDTRACKW, 0, 0.2 * HANDTRACKW, height);

line(0.4 * HANDTRACKW, 0, 0.4 * HANDTRACKW, height);

line(0.6 * HANDTRACKW, 0, 0.6 * HANDTRACKW, height);

line(0.8 * HANDTRACKW, 0, 0.8 * HANDTRACKW, height);

line(HANDTRACKW, 0, HANDTRACKW, height);

line(1.2 * HANDTRACKW, 0, 1.2 * HANDTRACKW, height);

if (myPose) {

try {

// Get right hand from pose

myRHand = getHand(myPose, false);

myRHand = mapHand(myRHand);

const rangeLeft2 = [0, 0.2 * HANDTRACKW];

const rangeLeft1 = [0.2 * HANDTRACKW, 0.4 * HANDTRACKW];

const rangeCenter = [0.4 * HANDTRACKW, 0.6 * HANDTRACKW];

const rangeRight1 = [0.6 * HANDTRACKW, 0.8 * HANDTRACKW];

const rangeRight2 = [0.8 * HANDTRACKW, HANDTRACKW];

// Check which range the hand is in and print out the corresponding data

if (myRHand.x >= rangeLeft2[0] && myRHand.x < rangeLeft2[1]) {

print("LEFT2");

movement = -1;

} else if (myRHand.x >= rangeLeft1[0] && myRHand.x < rangeLeft1[1]) {

print("LEFT1");

movement = -0.5;

} else if (myRHand.x >= rangeCenter[0] && myRHand.x < rangeCenter[1]) {

print("CENTER");

movement = 0;

} else if (myRHand.x >= rangeRight1[0] && myRHand.x < rangeRight1[1]) {

print("RIGHT1");

movement = 0.5;

} else if (myRHand.x >= rangeRight2[0] && myRHand.x <= rangeRight2[1]) {

print("RIGHT2");

movement = 1;

}

// Draw hand

push();

const offsetX = (width - HANDTRACKW) / 2;

const offsetY = (height - HANDTRACKH) / 2;

translate(offsetX, offsetY);

noStroke();

fill(CLR);

ellipse(myRHand.x, HANDTRACKH / 2, 50);

pop();

} catch (err) {

print("Right Hand not Detected");

}

print(keyVal)

//print(movement);

// print("here")

//print(writers);

}

//////////////////////////

if (!serialActive1 && !serialActive2) {

text("Press Space Bar to select Serial Port", 20, 30);

} else if (serialActive1 && serialActive2) {

text("Connected", 20, 30);

// Print the current values

text("Acceleration = " + str(Acceleration), 20, 50);

text("Brake = " + str(Brake), 20, 70);

mover();

}

}

function keyPressed() {

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial1();

} else if (key == "x") {

// important to have in order to start the serial connection!!

setUpSerial2();

s2_comp=true

}

}

/////////////CAR/////////

function serialReady() {

// Send initial command to stop the car

serial.write("S",0);

print("serialrdy");

}

function mover() {

print("mover");

// Send commands to the car based wwon keyboard input

if (Acceleration==1) {

writeSerial('S',0);

//print(typeof msg1)

}else if (Brake==1) {

writeSerial('W',0);

}else if ( movement < 0) {

print("left")

writeSerial('A',0);

}else if ( movement > 0) {

print("right")

writeSerial('D',0);

}else if (movement== 0) {

print("stop");

writeSerial('B',0);

}

}

// When posenet model is ready, let us know!

function modelReady() {

console.log("Model Loaded");

}

// Function to get and send pose from posenet

function getPose(poses) {

// We're using single detection so we'll only have one pose

// which will be at [0] in the array

myPose = poses[0];

}

// Function to get hand out of the pose

function getHand(pose, mirror) {

// Return the wrist

return pose.pose.rightWrist;

}

// function mapHand(hand) {

// let tempHand = {};

// tempHand.x = map(hand.x, 0, VIDEOW, 0, HANDTRACKW);

// tempHand.y = map(hand.y, 0, VIDEOH, 0, HANDTRACKH);

// if (smooth) tempHand.x = averageX(tempHand.x);

// return tempHand;

// }

function mapHand(hand) {

let tempHand = {};

// Only add hand.x to recentXs if the confidence score is greater than 0.5

if (hand.confidence > 0.2) {

tempHand.x = map(hand.x, 0, VIDEOW, 0, HANDTRACKW);

if (smooth) tempHand.x = averageX(tempHand.x);

}

tempHand.y = map(hand.y, 0, VIDEOH, 0, HANDTRACKH);

return tempHand;

}

function averageX(x) {

// the first time this runs we add the current x to the array n number of times

if (recentXs.length < 1) {

console.log("this should only run once");

for (let i = 0; i < numXs; i++) {

recentXs.push(x);

}

// if the number of frames to average is increased, add more to the array

} else if (recentXs.length < numXs) {

console.log("adding more xs");

const moreXs = numXs - recentXs.length;

for (let i = 0; i < moreXs; i++) {

recentXs.push(x);

}

// otherwise update only the most recent number

} else {

recentXs.shift(); // removes first item from array

recentXs.push(x); // adds new x to end of array

}

let sum = 0;

for (let i = 0; i < recentXs.length; i++) {

sum += recentXs[i];

}

// return the average x value

return sum / recentXs.length;

}

////////////////////////

// This function will be called by the web-serial library

// with each new *line* of data. The serial library reads

// the data until the newline and then gives it to us through

// this callback function

function readSerial(data) {

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

if (data != null) {

// print(data.value);

let fromArduino = data.value.split(",");

if (fromArduino.length == 2) {

//print(int(fromArduino[0]));

//print(int(fromArduino[1]));

Acceleration = int(fromArduino[0]);

Brake = int(fromArduino[1])

}

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

if(s2_comp){

let sendToArduino = Acceleration + "," + Brake + "\n";

// mover()

//print("output:");

//print(sendToArduino);

//writeSerial(sendToArduino, 0);

}

}

}

The car moves forward/backward when a user engages the foot pedals, and steers left/right when the user moves his/her hand left/right. The components of the car system are able to communicate via serial communication in p5.js. To enable this, we created 2 serial ports(one for the car and the other for the foot pedal).

CHALLENGES

One challenge we may face during the implementation is accurately interpreting the user’s hand gestures. The camera tracking system required a lot of experimentation and programming adjustments to ensure that it interprets the user’s hand movements while also being light and responsive. Originally the camera was tracking the X and Y axis, but it caused p5 to be very slow and laggy because of the number of variables that it needs to keep track of. The solution was to simply remove one of the axes, this improved the responsiveness of the program drastically.

The initial plan was to operate the car wirelessly, however, this was not possible due to many factors, such as using the wrong type of Bluetooth board. With limited time, we resorted to working with two serial ports for communication between the car, the pedals, and the hand gesture control. This introduced a new problem- freely moving the car. However, we solved the issue by using an Arduino USB extension cable for the car to be able to move freely. To allow users at the showcase to be able to move the car around without the car tripping over the wire, we came up with an ingenious solution to tie a fishing line across the pillars of the Art Center where we were set-up, and then use our extended USB cable to basically hang of the fishing line, so it acted like a simulated roof for our setup. This way, the car could freely roam around the circuit and never trip.

Another major roadblock was the serial ports in p5js. Since the project uses both a pedal and the car, there was the need to use 2 separate Arduino Uno boards to control both systems. This necessitated the use of 2 serial ports in p5js. The original starter code for connecting p5 to Arduino was only for 1 serial port. A lot of time was spent adjusting the existing code to function with 2 serial ports.

Lessons learned, especially with regard to robots, is be grouped into the following points:

Planning is key: The project can quickly become overwhelming without proper planning. It’s important to define the project goals, select appropriate devices and how to sync the code to those devices and create a detailed project plan.

Test as often as you can before the showcase date: Testing is crucial in robotics projects, especially when dealing with multiple hardware components and sensors. This one was no exception. It’s important to test each component and module separately before combining them into the final project.

Future steps needed to take our project to the next level.

- Expand functionality: While the current design allows for movement in various directions, there are other features that could be added to make the car more versatile. We plan on adding cameras and other sensors(LiDar) to detect obstacles to create a mapping of an environment while providing visual feedback to a user.

- Optimize hardware and software: We also plan on optimizing the hardware and software components used. This would involve changing the motors to more efficient or powerful motors, using more accurate sensors (not using the ultrasonic sensor), or exploring other microcontrollers that can better handle the project’s requirements. Additionally, optimizing the software code can improve the car’s responsiveness and performance. For example, our software code can detect obstacles but cannot detect the end of a path. Regardless, we believe we can engineer a reverse obstacle-sensing algorithm to create an algorithm that could detect cliffs and pot-holes, and dangerous empty spaces on roads to ultimately reduce road accidents.

Demonstration

FINAL PROJECT PRODUCTION

USER TESTING

During the testing phase of the project, participants found it relatively easy to understand how to control it, primarily thanks to the instructions menu provided on the introduction screen and the clear grid divisions on the control page that explicitly indicated the directions. However, one aspect that caused confusion for many was determining which hand controlled the left movement and which controlled the right movement. This confusion arose because the camera captures a laterally inverted image, which is then processed by the computer. To address this, explaining the control scheme after the initial confusion helped participants grasp the concept of how the handpose.js library maps the tracked hand movements to the car’s controls.

The most successful aspect of my project is undoubtedly the mapping of hand movements to the car’s controls. The serial connection between the Arduino and p5.js worked flawlessly, ensuring smooth communication. However, there is room for improvement in the implementation of the hand tracking model. Optimizing it is necessary to ensure that the regions of the canvas corresponding to the various controls are accurately defined. Also, i realized that the lightning conditions also greatly affects how easily the model is able to track the hands. In an attempt to improve that i will either go back to using posenet because i realized it is more accurate as compared to the handpose.js I am currently using.

FINAL PROJECT DOCUMENTATION- Mani Drive

PROJECT DESCRIPTION

My project, Mani-Drive, consists of a robot car controlled by an Arduino board and a p5.js program. The robot car’s movements are controlled through a combination of hand tracking and user input via the p5.js program. The program utilizes the ml5.js library for hand tracking using a webcam. The position of the tracked hand determines the movement of the robot car. Moving the hand to the left, right, or forward commands the robot to move in the corresponding direction, while moving the hand downward makes the robot car move backward. The p5.js program communicates with the Arduino board via serial communication to control the robot’s movements. The circuit setup consists of the arduino uno board, basic wire connections, the DRV8833 Controller DC Motor driver, resistor, an ultrasonic sensor, four wheels, a buzzer, an LED and a bread board.The Arduino Uno board is responsible for motor control and obstacle detection. It uses an ultrasonic sensor to measure the distance to obstacles. If an obstacle is detected within a safe distance, the Arduino stops the robot’s movement, plays a sound using the buzzer, and turns on the LED as a warning. The Arduino code continuously checks the distance to handle object detection and resumes normal movement if no obstacles are detected. The Arduino board also receives commands from the p5.js program to control the robot’s movements based on the hand tracking data.

INTERACTION DESIGN

The interaction design of the project provides a user-friendly and intuitive experience for controlling and interacting with the robot car. By running the p5.js program and pressing the “s” key, the user initiates the program and activates hand tracking. The webcam captures the user’s hand movements, which are visually represented by a circular shape on the screen. Moving the hand left, right, up, or down controls the corresponding movement of the robot car. The color of the circular shape changes to indicate the intended movement direction, enhancing user understanding. Visual feedback includes a live video feed from the webcam and a warning message if an obstacle is detected. The Arduino board measures obstacle distances using an ultrasonic sensor and provides visual feedback through an LED and auditory feedback through a buzzer to alert the user about detected obstacles. This interactive design empowers users to control the robot car through natural hand gestures while receiving real-time visual and auditory feedback, ensuring a seamless and engaging interaction experience.

CODE(ARDUINO)

// Pin definitions for motor control

const int ain1Pin = 3;

const int ain2Pin = 4;

const int pwmaPin = 5;

const int bin1Pin = 8;

const int bin2Pin = 7;

const int pwmbPin = 6;

const int triggerPin = 9; // Pin connected to the trigger pin of the ultrasonic sensor

const int echoPin = 10; // Pin connected to the echo pin of the ultrasonic sensor

const int safeDistance = 10; // Define a safe distance in centimeters

const int buzzerPin = 11; // Pin connected to the buzzer

const int ledPin = 2; // Pin connected to the LED

long duration;

int distance;

bool isBraking = false;

void setup() {

// Configure motor control pins as outputs

pinMode(ain1Pin, OUTPUT);

pinMode(ain2Pin, OUTPUT);

pinMode(pwmaPin, OUTPUT);

pinMode(bin1Pin, OUTPUT);

pinMode(bin2Pin, OUTPUT);

pinMode(pwmbPin, OUTPUT);

// Initialize the ultrasonic sensor pins

pinMode(triggerPin, OUTPUT);

pinMode(echoPin, INPUT);

// Initialize the buzzer pin

pinMode(buzzerPin, OUTPUT);

// Initialize the LED pin

pinMode(ledPin, OUTPUT);

// Initialize serial communication

Serial.begin(9600);

}

void loop() {

// Measure the distance

digitalWrite(triggerPin, LOW);

delayMicroseconds(2);

digitalWrite(triggerPin, HIGH);

delayMicroseconds(10);

digitalWrite(triggerPin, LOW);

duration = pulseIn(echoPin, HIGH);

// Calculate the distance in centimeters

distance = duration * 0.034 / 2;

// Handle object detection

if (distance <= safeDistance) {

if (!isBraking) {

isBraking = true;

stopRobot();

playNote();

digitalWrite(ledPin, HIGH); // Turn on the LED when braking

}

} else {

isBraking = false;

digitalWrite(ledPin, LOW); // Turn off the LED when not braking

// Continue with normal movement

if (Serial.available() > 0) {

char command = Serial.read();

// Handle movement commands

switch (command) {

case 'L':

moveLeft();

break;

case 'R':

moveRight();

break;

case 'U':

moveForward();

break;

case 'D':

moveBackward();

break;

case 'S':

stopRobot();

break;

}

}

}

}

// Move the robot left

void moveLeft() {

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 0);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 255);

}

// Move the robot right

void moveRight() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, HIGH);

analogWrite(pwmbPin, 0);

}

// Move the robot forward

void moveForward() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 255);

}

// Move the robot backward

void moveBackward() {

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, HIGH);

analogWrite(pwmbPin, 255);

// Check the distance after moving backward

delay(10); // Adjust this delay based on your needs

// Measure the distance again

digitalWrite(triggerPin, LOW);

delayMicroseconds(2);

digitalWrite(triggerPin, HIGH);

delayMicroseconds(10);

digitalWrite(triggerPin, LOW);

duration = pulseIn(echoPin, HIGH);

// Calculate the distance in centimeters

int newDistance = duration * 0.034 / 2;

// If an obstacle is detected, stop the robot

if (newDistance <= safeDistance) {

stopRobot();

playNote();

digitalWrite(ledPin, HIGH); // Turn on the LED when braking

}

else {

digitalWrite(ledPin, LOW); // Turn off the LED when not braking

}

}

// Stop the robot

void stopRobot() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 0);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 0);

}

// Play a note on the buzzer

void playNote() {

// Define the frequency of the note to be played

int noteFrequency = 1000; // Adjust this value to change the note frequency

// Play the note on the buzzer

tone(buzzerPin, noteFrequency);

delay(500); // Adjust this value to change the note duration

noTone(buzzerPin);

}

DESCRIPTION OF CODE

The code begins by defining the pin assignments for motor control, ultrasonic sensor, buzzer, and LED. These pins are configured as inputs or outputs in the setup() function, which initializes the necessary communication interfaces and hardware components.

The core functionality is implemented within the loop() function, which is executed repeatedly. Within this function, the distance to any obstacles is measured using the ultrasonic sensor. The duration of the ultrasonic pulse is captured and converted into distance in centimeters. This distance is then compared to a predefined safe distance.

If an object is detected within the safe distance, the robot enters a braking mode. The stopRobot() function is called to stop its movement by setting the appropriate motor control pins and turning off the motors. The playNote() function is called to emit an audible alert using the buzzer, and the LED is illuminated by setting the corresponding pin to high.

On the other hand, if no objects are detected within the safe distance, the robot continues with normal movement. It waits for commands received through serial communication. These commands correspond to different movement actions:

moveLeft(): This function is called when the command ‘L’ is received. It sets the motor control pins to make the robot turn left by activating the left motor in one direction and the right motor in the opposite direction.

moveRight(): This function is called when the command ‘R’ is received. It sets the motor control pins to make the robot turn right by activating the left motor in the opposite direction and the right motor in one direction.

moveForward(): This function is called when the command ‘U’ is received. It sets the motor control pins to make the robot move forward by activating both motors in the same direction.

moveBackward(): This function is called when the command ‘D’ is received. It sets the motor control pins to make the robot move backward by activating both motors in the opposite direction. After a small delay, it performs an additional obstacle check by measuring the distance using the ultrasonic sensor. If an obstacle is detected, the stopRobot() function is called, and the playNote() function emits an audible alert. The LED is also illuminated.

stopRobot(): This function is called to stop the robot’s movement. It sets all motor control pins to low and stops the motors by setting the PWM value to 0.

playNote(): This function is called to generate tones on the buzzer. The frequency and duration of the played note can be adjusted by modifying the variables within the function. It uses the tone() and noTone() functions to play the note and pause the sound, respectively.

The modular structure of the code, with separate functions for each movement action, allows for easier maintenance and future enhancements. The implementation enables the robot car to navigate its environment, detect obstacles, and take appropriate actions for collision avoidance. It showcases the integration of hardware components with the Arduino microcontroller and demonstrates the practical application of sensor-based control in robotics.

P5.js CODE( For hand detection and movement of robot )

function gotHands(results = []) {

if (!programStarted && results.length > 0) {

const hand = results[0].annotations.indexFinger[3];

handX = hand[0];

handY = hand[1];

// Start the program when hand is detected and 's' is pressed

if (handX && handY && keyIsPressed && (key === 's' || key === 'S')) {

programStarted = true;

startRobot();

}

} else if (results.length > 0) {

const hand = results[0].annotations.indexFinger[3];

handX = hand[0];

handY = hand[1];

} else {

handX = null;

handY = null;

}

}

function moveLeft() {

if (isConnected) {

serial.write('L');

}

}

function moveRight() {

if (isConnected) {

serial.write('R');

}

}

function moveForward() {

if (isConnected) {

serial.write('U');

}

}

function moveBackward() {

if (isConnected) {

serial.write('D');

}

}

function stopRobot() {

if (isConnected) {

serial.write('S');

}

}

function startRobot() {

// Start the robot movement when the hand tracking model is ready

console.log('Hand tracking model loaded');

}

// Function to detect obstacle

function detectObstacle() {

obstacleDetected = true;

carMovingBack = true;

}

// Function to stop obstacle detection

function stopObstacleDetection() {

obstacleDetected = false;

carMovingBack = false;

}

DESCRIPTION OF P5.js code

The code starts by declaring variables such as isConnected, handX, handY, video, handpose, obstacleDetected, carMovingBack, and programStarted. These variables are used to track the connection status, hand coordinates, video capture, hand tracking model, obstacle detection status, and program status.

In the preload() function, images for the instructions and introduction screen are loaded using the loadImage() function.

The keyPressed() function is triggered when a key is pressed. In this case, if the ‘s’ key is pressed, the programStarted variable is set to true, and the startRobot() function is called.

The setup() function initializes the canvas and sets up the serial communication with the Arduino board using the p5.serialport library. It also creates a video capture from the webcam and initializes the hand tracking model from the ml5.handpose library. The gotHands() function is assigned as the callback for hand tracking predictions.

The introScreen() function displays the introduction screen image using the image() function, and the instructions() function displays the instructions image.

The draw() function is the main loop of the program. If the programStarted variable is false, the intro screen is displayed, and the function returns to exit the draw loop. Otherwise, the webcam video is displayed on the canvas.

If the handX and handY variables have values, an ellipse is drawn at the position of the tracked hand. Based on the hand position, different movement commands are sent to the Arduino board using the moveLeft(), moveRight(), moveForward(), and moveBackward() functions. The color of the ellipse indicates the direction of movement.

The code checks if the hand position is out of the frame and stops the robot’s movement in that case. It also checks for obstacle detection and displays a warning message on the canvas if an obstacle is detected.

The gotHands() function is the callback function for hand tracking predictions. It updates the handX and handY variables based on the detected hand position. If the programStarted variable is false and a hand is detected while the ‘s’ key is pressed, the programStarted variable is set to true, and the startRobot() function is called.

The moveLeft(), moveRight(), moveForward(), moveBackward(), and stopRobot() functions are responsible for sending corresponding commands to the Arduino board through serial communication.

The startRobot() function is called when the hand tracking model is loaded successfully. Currently, it only logs a message to the console.

The detectObstacle() function sets the obstacleDetected and carMovingBack variables to true, indicating an obstacle has been detected and the robot should move back.

The stopObstacleDetection() function resets the obstacleDetected and carMovingBack variables, indicating the obstacle has been cleared and the robot can resume normal movement.

PARTS I’M PROUD OF AND FUTURE IMPROVEMENTS

The most obvious part of the project that i’m very proud of is how i got to implement the hand tracking library into the code to make it work even though it has some minor control bugs. Initially, i started off by setting the control system to the arrow keys on the keyboard and after i got that to work, i went ahead to integrate the ml5.js library into the code to track the user’s hands through the webcam and then map the movement of the hands to the corresponding arrow keys to make the robot move. Future improvements include making the whole arduino setup work with the p5.js part wirelessly to allow free movement of the car and also improving the implementation and integration of the hand tracking model to ensure accurate response to the movement s of the user’s hands. I also intend to add an LCD screen and also more LED lights to make it very similar to how an actual car looks.