Project Concept:

I’ve always had a fear of snakes. Overcoming it seemed impossible until I started working on my game. Inspired by how snakes consume food whole, I had the idea of the game I am working on for my midterm called “Going Through It”. It is be an obstacle course game where the obstacle course is be designed in the shape of a snake. The player controls a small stick character trying to escape the snake as fast as possible. Adding my own unique twist to the game, the player cannot directly control the jumping ability of the stick figure, instead the stick is more akin to a ‘pogo stick’ where it bounces off with every obstacle it collides with and the player only controls the rotation of the stick figure using their keyboard.

User Interaction Design:

Player Input: The player uses the “A” and “D” keys to control the rotation of the stick figure. Player needs to angle the jump correctly to get through each part of the obstacle course.

Obstacles: The whole level design acts as the obstacle in this game, where the stick figure can bounce around by hitting walls or the insides of the ‘snake’ and can even get stuck in some traps laid out to make the game trickier to play.

Game Progression: As death is not an element of this game, the players race against themselves and other players to complete the level in the shortest possible time as they understand the mechanics of the game better.

Code Design: Functions, Classes, and Interactivity:

‘Going Through It’ is built using Object Oriented Programming (OOP) where every entity within the game belongs to a separate class that interact closely to make the game function.

The primary classes and their major functionalities are as follows:

Obstacle Class: The obstacle class defines the parameters for the rectangles make up the ‘snake’, it also contains functions such as display() which display the obstacles as one cohesive interactable object.

Stick Class: The Stick class defines the stick object itself and contains functions such as rotate() and update() which help establish rules for collision and input caused rotation. This serves as the biggest and most important class for the game code

Snake Level Class: The snake level class instantiates the obstacle class objects with coordinates to make one cohesive snake level and adds them to a list so collision checks can be performed.

Game Class: Game class deals with all functionality not handled by the other classes, this includes functions for pausing the game, showing the start screen, showing a lowest time, and starting the level itself. It also checks when a level has ended and displays a text with the time taken to clear the obstacle.

Identifying and Addressing Risks:

The most challenging aspect of the project is implementing accurate collision detection between the stick character and the obstacles. Since there are a multitude of angles the stick can collide with the obstacles with, figuring out accurate and responsive collision detection has involved a lot of trigonometry and I definitely believe there is more to come in order to account for all possible scenarios.

In order to mitigate the risk of the stick phasing through the level, or getting stuck at certain points I have tried to account for detection with each corner of the stick, each side vertical and horizontal and collision with vertical, horizontal and obstacle walls at diagonals. This is a tedious process and there is a lot of room for error which I am minimizing through meticulous play testing of the level.

Another risk I am trying to mitigate is the complexity of the level, as this is a single player game, a level that is too easy will make the game not enjoyable after a few playthroughs, on the contrary a level that is too complex and difficult to compete will cause players to quit and not wish to progress sooner. I hope to continue doing play testing, also involving friends who have never played the game before so that I can get input from them and adjust the level and individual paths within the level to make the game the perfect mix of complex but pushing people to beat the level in shorter and shorter times.

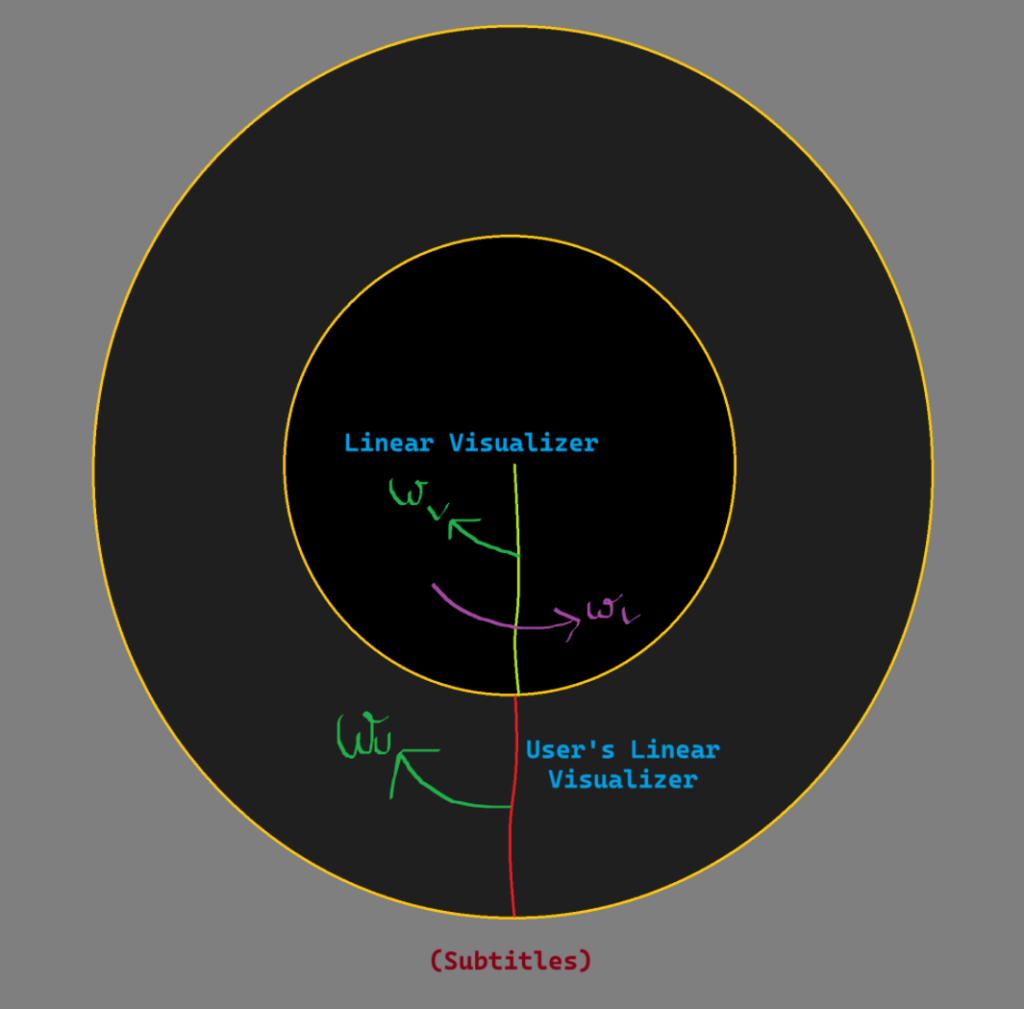

Snapshots from the game and further steps:

This is the design of the Snake Level, the time is shown in the top left corner and stops running once the level is completed

Next Steps:

Adding a Lowest Score: I want to keep a track of the lowest time achieved which is displayed on the game start screen and is meant to be an initial challenge for new players to beat.

Start Screen: I want to add a start screen which shows, the level itself, the tutorial for the game and lowest time someone has taken to complete the level.