Concept:

The idea for this project came from something I’ve always found fascinating: the transition from night to day, especially the way streetlights turn off as the new day begins and the sun begins to rise.

I’ve always been captivated by the natural beauty of a sunrise, how the sky gradually shifts colors as the day begins. The goal was to create something interactive where turning off a “streetlight” (represented by a blue LED) would trigger a sunrise sequence using an RGB LED. I used a photoresistor that detects the change in light when the streetlight is turned off, this then turns on the RGB light sequence.

Demonstration:

Code:

const int blue_led_pin = 9; // Blue light (streetlight)

const int switch_pin = 2; // Switch pin

const int photo_resistor_pin = A0; // Photoresistor pin

const int red_pin = 3; // Red pin of RGB

const int green_pin = 5; // Green pin of RGB

const int blue_pin = 6; // Blue pin of RGB

// Variables

bool streetlight_on = true; // bool to track if the stretlight is on

bool sunrise_started = false; // bool to track is the sunrise sequence has started

void setup() {

// Initialize pins

pinMode(blue_led_pin, OUTPUT);

pinMode(switch_pin, INPUT);

pinMode(red_pin, OUTPUT);

pinMode(green_pin, OUTPUT);

pinMode(blue_pin, OUTPUT);

// streetlight starts off as on

digitalWrite(blue_led_pin, HIGH);

}

void loop() {

// Check if the switch is pressed

if (digitalRead(switch_pin) == HIGH && streetlight_on) {

digitalWrite(blue_led_pin, LOW); // Turn off streetlight

streetlight_on = false; // change the bool to reflect the streetlight being off

}

// Read light level from photoresistor

int light_level = analogRead(photo_resistor_pin);

// If light level drops below threshold, start sunrise sequence, can be if the blue light turns off or if the light is manually blocked

if ( !sunrise_started && light_level < 500) {

sunrise_effect();

sunrise_started = true; // bool confirms that sunrise has started

}

}

void sunrise_effect() {

// Gradually change colors like a sunrise

for (int i = 0; i <= 255; i++) {

analogWrite(red_pin, i); // Increase red brightness first

delay(30); // Adjust delay for smooth transition

if (i > 100) {

analogWrite(green_pin, i - 100); // Add green for yellow/orange colors

}

if (i > 200) {

analogWrite(blue_pin, i - 200); // Add blue for full white at to show noon

}

delay(30);

}

}

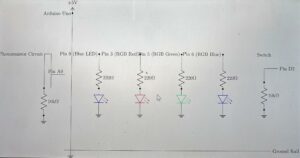

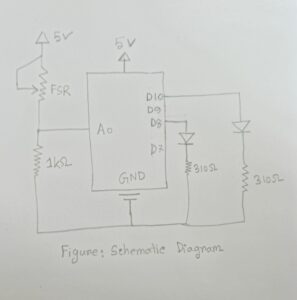

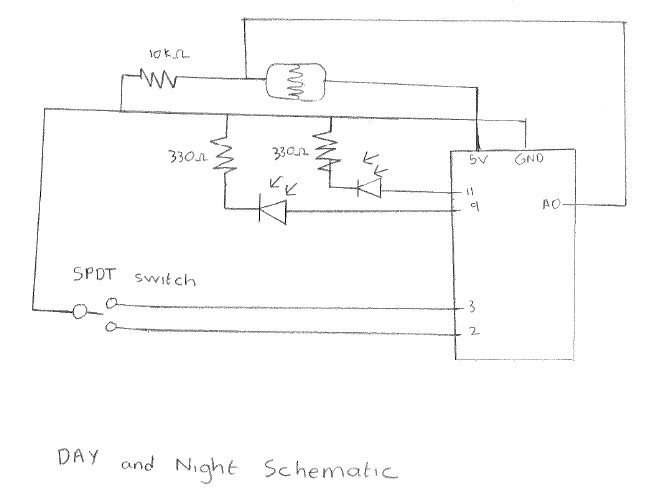

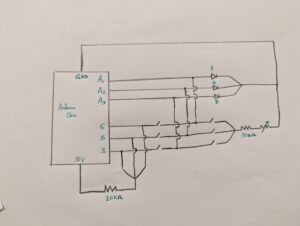

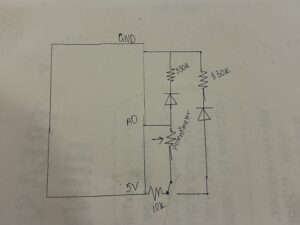

Schematics:

Workings:

The blue led is shone directly into a photoresistor. When the switch is used to turn it off, the photoresistor reading goes down which triggers the sunrise sequence on the rgb led. It is demonstrated that the photoresistor triggers the sequence and not the button press as even when the photoresistor is covered with a finger with the blue led on, the reading goes down and the sunrise sequence is initiated.

Future Considerations:

I had tremendous fun working on this project especially learning how the rgb led works. In the future I’d like to add more leds which trigger different sequences in the rgb led or perhaps a sound aspect to it as well where a loud sound triggers the sequence.