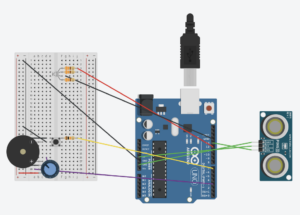

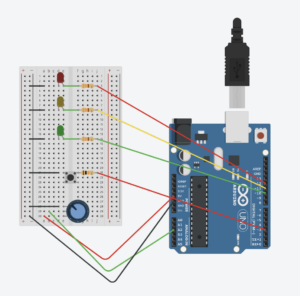

My final project is a motion tracking CCTV camera that utilizes poseNet. The basic idea is to use poseNet to locate a person on the screen, and then send that data to a 3D printed CCTV camera mounted on top of a servo motor. By mapping the location data to the angles of the servo motor, it creates the illusion that the CCTV camera is following the person. In addition to the camera tracking, I also wanted to create a motion detecting p5 interface. After watching coding train tutorials on this effect, I discovered a method that uses pixel array data to isolate the moving person from the background, which I found really cool.

A large part of my process involved testing whether the servo-poseNet idea would work or not, and my draft of the final project documents this discovery. For the final project, I had several challenges ahead of me, including creating the p5 interface, figuring out the CCTV camera, and building a base for the camera and motor.

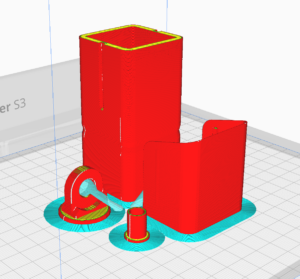

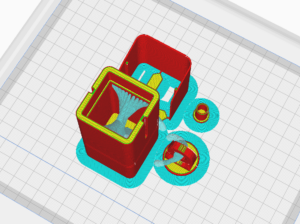

First, with the CCTV camera, I referred to the professor’s slides and came across a website with various 3D models that could be 3D printed. With the professor’s guidance on using the Ultimaker 3, I successfully 3D printed a CCTV camera that was the perfect size for the motor, in my opinion.

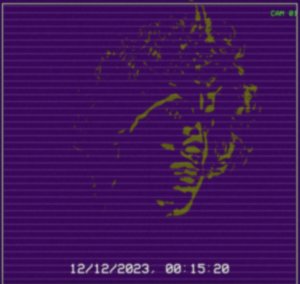

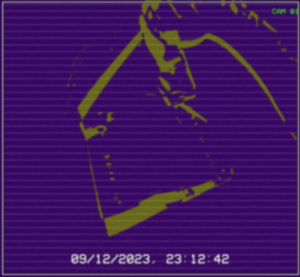

Next, I focused on the p5 interface. As mentioned earlier, I aimed to achieve a motion detection look. By applying multiple layers of effects such as grain, blur, and posterize, I was able to create an old-school CCTV footage vibe while also giving it a unique appearance that doesn’t resemble a typical CCTV camera. I wanted to capture the point of view of a camera trying to detect motion.

The final step for me was priming and spray painting the CCTV camera white, and finding the right base for it. Since I wanted to position it behind the laptop, I needed a base of suitable height. I found a cardboard box in my room and repurposed it as the shell for the CCTV camera base. I drilled a large piece of wood into it, which serves as a sturdy base for the motor. I then used wood glue to attach the motor to the wood slab, and glued the motor’s base plate to the CCTV camera.

The following is the code for my Arduino and p5 project:

// video, previous frame and threshold for motion detection

let video;

let prev;

let threshold = 25;

// Variables for motion functions and positions

let mfun = 0;

let motionY = 0;

let lerpX = 0;

let lerpY = 0;

// Font for overlay text and PoseNet related variables

let myFont;

let poseNet;

let pose;

let skeleton;

let loco = 0;

function preload() {

myFont = loadFont("VCR_OSD_MONO_1.001.ttf");

}

function setup() {

// low frame rate for a cool choppy motion detection effect

frameRate(5);

createCanvas(windowWidth, windowHeight);

pixelDensity(1);

video = createCapture(VIDEO);

video.size(windowWidth, windowHeight);

video.hide();

// Create an image to store the previous frame

prev = createImage(windowWidth, windowHeight);

// Initialize PoseNet and set up callback for pose detection

poseNet = ml5.poseNet(video, modelLoaded);

poseNet.on("pose", gotPoses);

}

// Callback for when poses are detected by PoseNet

function gotPoses(poses) {

//console.log(poses);

if (poses.length > 0) {

pose = poses[0].pose;

skeleton = poses[0].skeleton;

}

}

// Callback for when PoseNet model is loaded

function modelLoaded() {

console.log("poseNet ready");

}

function draw() {

// Check for serial port

if (!serialActive) {

text("Press Space Bar to select Serial Port", 20, 30);

} else {

text("Connected", 20, 30);

}

// Check for pose and get nose pose data

if (pose) {

fill(255, 0, 0);

ellipse(pose.nose.x, pose.nose.y, 20);

// location of pose nose

loco = int(pose.nose.x);

// value mapped for servo motor

val = int(map(loco, 0, windowWidth, 60, 120));

print(val);

}

background(0);

// load pixels for motion detection

video.loadPixels();

prev.loadPixels();

threshold = 40;

let count = 0;

let avgX = 0;

let avgY = 0;

// Flip the canvas for video display

push();

translate(width, 0);

scale(-1, 1);

image(video, 0, 0, video.width, video.height);

pop();

// Analyzing the pixels for motion detection

loadPixels();

for (let x = 0; x < video.width; x++) {

for (let y = 0; y < video.height; y++) {

// Current and previous pixel colors

let loc = (x + y * video.width) * 4;

let r1 = video.pixels[loc + 0];

let g1 = video.pixels[loc + 1];

let b1 = video.pixels[loc + 2];

let r2 = prev.pixels[loc + 0];

let g2 = prev.pixels[loc + 1];

let b2 = prev.pixels[loc + 2];

// Calculate color distance

let d = distSq(r1, g1, b1, r2, g2, b2);

if (d > threshold * threshold) {

avgX += x;

avgY += y;

count++;

// Fliped motion effect pixels

let flippedLoc = (video.width - x - 1 + y * video.width) * 4;

pixels[flippedLoc + 0] = 155;

pixels[flippedLoc + 1] = 155;

pixels[flippedLoc + 2] = 255;

} else {

let flippedLoc = (video.width - x - 1 + y * video.width) * 4;

pixels[flippedLoc + 0] = 190;

pixels[flippedLoc + 1] = 255;

pixels[flippedLoc + 2] = 155;

}

}

}

// Updating the pixels on the canvas

updatePixels();

// Calculate the average motion position if significant motion is detected

if (count > 200) {

motionX = avgX / count;

motionY = avgY / count;

}

// Mirror the motion tracking coordinates

// let flippedMotionX = width - motionX;

// lerpX = lerp(lerpX, flippedMotionX, 0.1);

// lerpY = lerp(lerpY, motionY, 0.1);

// fill(255, 0, 255);

// stroke(0);

// strokeWeight(2);

// ellipse(lerpX, lerpY, 36, 36);

// MOREE EFFECTZZZZ

filter(INVERT);

prev.copy(

video,

0,

0,

video.width,

video.height,

0,

0,

prev.width,

prev.height

);

filter(ERODE);

filter(POSTERIZE, random(10, 20));

drawGrid(); // Draw the grid on top of your content

drawSurveillanceOverlay(); //surveillance overlay cam

drawGrain(); // grain effect for old school cctv vibes

filter(BLUR, 1.5); // blur effect to achieve that vhs quality

}

function distSq(x1, y1, z1, x2, y2, z2) {

return sq(x2 - x1) + sq(y2 - y1) + sq(z2 - z1);

}

// toggle full screen

function mousePressed() {

if (mouseX > 0 && mouseX < width && mouseY > 0 && mouseY < height) {

let fs = fullscreen();

fullscreen(!fs);

}

}

function drawGrain() {

loadPixels();

for (let i = 0; i < pixels.length; i += 4) {

let grainAmount = random(-10, 10);

pixels[i] += grainAmount; // red

pixels[i + 1] += grainAmount; // green

pixels[i + 2] += grainAmount; // blue

// pixels[i + 3] is the alpha channel

}

updatePixels();

}

function drawSurveillanceOverlay() {

textFont(myFont); // Set the font

textSize(32); // Set the text size

// Draw border

noFill();

strokeWeight(5);

stroke(0, 0, 0, 255);

rect(9, 9, width - 16, height - 16);

stroke(250, 250, 250, 255);

strokeWeight(2.1);

rect(9, 9, width - 16, height - 16);

// Display timestamp

fill(250, 50, 50);

fill(250, 250, 250);

stroke(0, 120);

textSize(30);

textAlign(CENTER, TOP);

text(

new Date().toLocaleString(),

windowWidth / 2,

windowHeight - windowHeight / 11

);

// cam 01

textSize(17);

fill(50, 250, 55);

text("CAM 01", width - width / 19, windowHeight / 29);

}

function drawGrid() {

let gridSize = 15; // Size of each grid cell

// only the horizontal lines

stroke(205, 3); // Grid line color (white with some transparency)

strokeWeight(1); // Thickness of grid lines

for (let x = 0; x <= width; x += gridSize) {

for (let y = 14; y <= height + 16; y += gridSize) {

// line(x, 10, x, height);

line(11, y, width - 10, y);

}

}

}

// serial connection

function keyPressed() {

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial();

}

}

function readSerial(data) {

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

if (data != null) {

// make sure there is actually a message

// split the message

let fromArduino = split(trim(data), ",");

// if the right length, then proceed

if (fromArduino.length == 2) {

// only store values here

// do everything with those values in the main draw loop

print("nice");

// We take the string we get from Arduino and explicitly

// convert it to a number by using int()

// e.g. "103" becomes 103

}

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

let sendToArduino = val + "\n";

writeSerial(sendToArduino);

}

}

p5 👆

#include <Servo.h>

Servo myservo; // create servo object to control a servo

void setup() {

Serial.begin(9600);

myservo.attach(9);

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0,0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

myservo.write(0); // sets the servo position according to the scaled value

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

Serial.println("0,0");

digitalWrite(LED_BUILTIN, HIGH); // led on while receiving data

int value = Serial.parseInt();

if (Serial.read() == '\n') {

myservo.write(value); // sets the servo position according to the scaled value

}

}

}

arduino 👆

Overall, I am happy with how the project was realized. It has been a very educational experience for me, as it has allowed me to learn about posenet, 3D printing, and visual effects. These skills will be valuable for my future capstone project, which will focus on surveillance.

Documentation/User Testing from the IM Showcase: