Concept

As I shared in last week’s blog post, I wanted my project to be a gamified story. I also wanted to center it around a butterfly, an extension of a theme I have been committed to for the past few weeks. Additionally, the narrative created would convey my own desire to reunite with my family – a goal that I hope to achieve eventually in my lifetime. This could be seen in the final scene unlocked if the player passes the levels successfully. The butterfly returns to her family of four, which is the number of members in my own family. The storyline and flow of the game go like this:

-

- A butterfly finds herself lost in the city on a rainy day. She flutters through the window of the main player’s room.

- the player is prompted to help the butterfly find her way back to her home and family, going through game levels in the city and forest and avoiding (jumping over) obstacles to preserve their health. Here the player has a chance to replenish their health by collecting potions.

- If the player manages to successfully complete the mission, they unlock the final scene, in which the butterfly is finally reunited with her family.

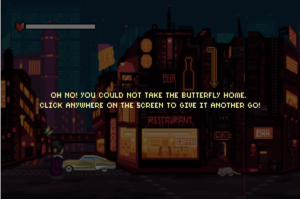

- If the player loses, they are prompted to restart the game.

In the making of this, I emphasized the animations for an increased focus on the narrative component over the game one. I spent a lot of time playing with different parameters and finding ways to control time and the movement of sprites in response to changes in their environment. The storyboarding I had done last week greatly aided in visualizing how I wanted the animations to eventually look.

Implementation and Parts I am Most Proud of

In terms of execution, I implemented everything as a class based on the rough UML structure I sketched out in my last blog. The main Gameclass had methods for each level and its attributes were objects instantiated from the Player, Butterfly, Enemy, Potion, and HealthBar classes. Certain classes were also abstracted from parent classes using inheritance (such as the Playerclass inheriting from a parent Sprite class that has basic attributes and methods shared between all its child classes). Each level/scene is separated by overlays, where the user is prompted to click anywhere on the screen to continue to the next stage of the experience. In terms of assets, all sounds, images, and fonts were sourced from the following open-source community/free platforms:

- https://freesound.org/

- https://opengameart.org/

- https://www.free-stock-music.com/

- https://www.dafont.com/

For the mechanics of the game, the Player sprite is placed at the lower left corner of the screen. Its main movement, jumping, is only triggered when the player presses the Space bar. Jumping occurs by setting the vertical velocity to a certain jump power attribute. As the player falls down, the player’s velocity is incremented by an acceleration due to gravity attribute. The player also has the ability to double jump once while in air, which comes in handy if a flock of enemies is headed its way. In terms of the collision detection mechanism, a collision occurs when the distance between the center of the player and that of an enemy object is less than the sum of their respective radii (minus a certain amount to account for the free pixels in the sprite images). Below is the code for the collision detection mechanism, which is a Player class method:

detectCollision(obj, offset=30) {

if (!obj.collided) {

// get distance between the center of the character and that of the enemy object

let objHeight = obj.h;

let objWidth = obj.w;

let playerWidth = this.w;

let playerHeight = this.h;

// update height and width based on resized parameters if the player/object was resized

if (obj.resize) {

objHeight = obj.resize_y;

objWidth = obj.resize_x;

}

if (this.resize) {

playerWidth = this.resize_x;

playerHeight = this.resize_y;

}

let centerX = this.x + playerWidth / 2;

let centerY = this.y + playerHeight / 2;

let d = dist(

centerX,

centerY,

obj.x + objWidth / 2,

obj.y + objHeight / 2

);

// collision detected

// distance is less than the sum of objects' radii

// minus a distance to account for free pixels in the sprite images

if (d < playerWidth / 2 + objWidth / 2 - offset) {

if (!obj.potion) { // lose health if the object is an enemy

loseHealth.play();

this.currHealth -= obj.damage;

} else if (obj.potion && this.currHealth < game.healthBar.maxHealth) { // regain health if the object is a potion

gainHealth.play();

this.currHealth += 1;

}

obj.collided = true; // set object collided to true

return true; // return true if collision is detected

}

} else {

return false; // return false if collision is not detected

}

}

}

An implementation highlight that I think is worth noting is the use of the smoothing algorithm in simulating the butterfly’s movement in the first scene as she makes her way from the window to the desk. This was implemented as part of the Butterfly class.

move(targetX, targetY) {

// move butterfly toward destination -- smoothing algorithm

if (this.moving) {

this.x += (targetX - this.x) * 0.009;

this.y += (targetY - this.y) * 0.007;

}

There are quite a few things that I am proud of in the implementation of this project. The first is the emotional feel of the overall experience. I made sure that the combination of animations created, the sounds chosen, the font, and the color palettes – going from night to day and from city to nature – created a wholesome backdrop to the game engulfed within. I also am proud that I made sure to bring to life the storyboard from last week’s blog. Finally, more than anything, I loved working on the animated start and final scenes (code shown below) and how the flow from animation to instructions to game and then to animation again (with transition overlays in between) turned out to be quite seamless.

firstScene() {

// show background of the scene

image(room[this.firstSceneAnimationStep], 0, 0, width, height);

// change the background based on frameCount to show animated changes in the player's room

if (frameCount % 7 == 0) {

this.firstSceneAnimationStep = (this.firstSceneAnimationStep + 1) % 6;

}

// show player

this.player.show();

// show butterfly, passing in rotation paramaters

this.butterfly.show(100, 170);

// player faces the butterfly once it is 40 pixels from the point (100, 170)

if (dist(this.butterfly.x, this.butterfly.y, 100, 170) < 40) {

this.player.dir = 1;

// move player toward butterfly once it lands on the desk

if (

dist(this.player.x, this.player.y, this.butterfly.x, this.butterfly.y) >

50

)

this.player.x -= 0.6;

else { // once the player is close to the butterfly, display instructions overlay

this.gameMode = 1;

let text = `This poor butterfly seems to be far away from home!

You have to help her find her way back to her family!

The first step on your journey is to go through the city.

Beware the obstacles on your way.

Press the Space bar to jump.

Collect potions to replenish your health.

Click anywhere on the screen if you are

ready to embark on the journey!`;

twinkleSound.play(); // play twinkle sound

this.overlay(text);

}

}

}

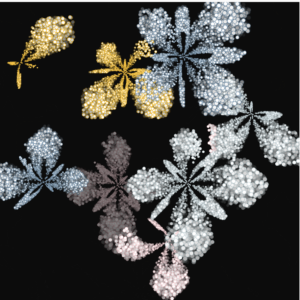

finalScene() {

// display the flower field background

image(flowerField, 0, 0, width, height);

this.player.dir = 3; // change direction so that the player' front side is facing the flower field

this.levelButterfly.dir = 3;

// resize the butterfly and player to show advancing movement

if (frameCount % 10 == 0) {

this.player.resize_x -= 4;

this.player.resize_y = this.player.resize_x / 0.75;

}

if (frameCount % 15 == 0) {

this.levelButterfly.resize_x -= 1.5;

this.levelButterfly.resize_y = this.levelButterfly.resize_x * 2;

}

this.resizeObject(this.player);

this.resizeObject(this.levelButterfly);

// show background butterflies

for (let i = 0; i < 4; i++) {

this.butterflyFamily[i].show();

}

// stop the animation once the player's y position is less than

// 255 pixels

if (this.player.y <= 225) {

this.player.moving = false;

this.levelButterfly.moving = false;

// change into overlay, prompting the player to restart the game

this.gameMode = 5;

let text = `Click anywhere to restart the game!`;

twinkleSound.play();

this.overlay(text);

noLoop();

}

// move player and butterfly diagonally across the screen to move

// upward through the field

this.player.moveDiagonally();

this.levelButterfly.moveDiagonally();

}

Challenges Encountered and Proposed Improvements

One of the challenges I encountered was during the implementation of the final scene animation, where the main player and the butterfly had to be iteratively resized to create the animation of moving into the distance. I found that using the resize() method consecutively blurred the images and I, thus, had to find another way to resize them. After some googling, I found a way to resize the image by creating a resized image object and copying the pixels into the resized image as a way to avoid calling the resize() method:

resizeObject(obj) {

// scale with copy --

https://stackoverflow.com/questions/72368646/images-blur-with-resize-with-p5-js

// create an image object with the resized parameters

let resizedImg = createImage(int(obj.resize_x), int(obj.resize_y));

// get the image to resize from the object's sprites array

let srcImg = obj.sprites[obj.dir][obj.step];

// copy the pixels from the source image to the resized image

resizedImg.copy(

srcImg,

0,

0,

srcImg.width,

srcImg.height,

0,

0,

obj.resize_x,

obj.resize_y

);

// rotate object if needed and display the resized image

if (obj.rotated) {

push();

translate(obj.x, obj.y);

rotate(radians(obj.rotationAngle));

image(resizedImg, 0, 0);

pop();

} else {

image(resizedImg, obj.x, obj.y);

}

}

Another challenge was the length of the Enemy arrays created in the constructor() of the game class for each level as it modulated the duration of each level. The more enemies there are in a level, the longer its duration, as the condition for termination was when a particular level Enemy array became empty. However, I found that the more enemies there were in a level, the more slow and laggy the movements became, possibly due to the number of objects that had to be drawn on the screen. I attempted to fix this by ensuring that objects are only drawn when they are within the screen bounds as their locations are initialized randomly off-screen. While this helped a little, the problem remained. So a future improvement could be to look into this further and perhaps choose lighter/smaller sprites to display or have a different initialization mechanism.

Additionally, here are a few other ideas to elevate the current version a little more:

- Add some more levels, perhaps in between the city and the forest (e.g. a suburban in-between area/ or a highway).

- Add different types of potions with varying degrees of health replenishment (the stronger the potion, the rarer it is). This should be accompanied by an increase in the difficulty of the game, e.g. more enemy sprites, faster enemy sprite movements, or an increase in the damage attribute of certain sprites.

- Add some feedback, such as a jitter, when a collision occurs with an enemy object. An extension of this would be the possibility of annihilating an enemy if the Player sprite jumps on its head (the same way Gombas are annihilated in Super Mario).

Final Sketch

GUI

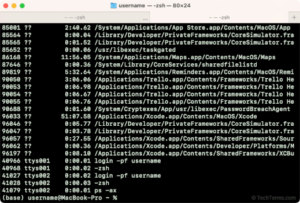

GUI Terminal Interface

Terminal Interface