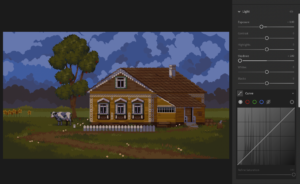

For my midterm project I created a simple interactive piece which focuses on the importance of mindfulness, slowing down and taking a break. The piece features a character that can be controlled by the user to walk left and right with the arrow keys. By walking (or ‘wandering’), the user progresses through a series of different scenic environments ranging from grass fields to mountain ranges. The user is then prompted to ‘think’ at given points (improper functionality explained later) before finally returning home. I have sequenced the images (sourced from craftpix) to convey the passage of time with the exception of the last image which I edited in Lightroom to create a ‘blue hour’ color palette. The link to the sketch is below. For the best experience, open your window in full screen:

https://editor.p5js.org/redhaalhammad/sketches/H_1B-Ts-1

In terms of technical application, I am happy that I was able to incorporate an intuitive transition from background to background for the user through my updateBackground functions. I found it challenging to wrap my head around how exactly to include this functionally. An issue that I had early on was that the background would always change to the next image regardless of whether the user walked off-screen to the left or right. I was able to resolve this by adding an else if statement and simply subtracting 1 rather than adding 1. I feel that doing so helped create an immersive environment for the user as it more accurately reflected the development of the character’s ‘wandering’ journey. The source code for the background transitions is included below:

function updateBackground() {

// Cycle through the backgrounds

currentBackground = (currentBackground % 14) + 1;

}

function updateBackground2() {

// Cycle through the backgrounds

currentBackground = (currentBackground % 14) - 1;

}

Building upon this, I feel that another strength of this project is its continuity which applies to both aesthetic and narrative. While I initially wanted a basic silhouette sprite sheet to make the experience more universal and relatable, the pixelated design style of the character matches with the similar style of the background images. Additionally, the visual aesthetic of the background images is consistent despite being sourced from different asset folders on craftpix. In terms of narrative, I was conscious, as mentioned previously, of sequencing the images to reflect both the passage of time but also a sense of space. While I do not repeat images (except for the scene of the character’s home), I consciously chose to include scenes featuring mountains as the character nears returning home in correspondence to the scene of the mountain which appears at the beginning of the journey. The intention behind this was to subtly present to the user that the journey is nearing its end as (based on the sequencing) they can infer that the character’s home is located near mountains.

Unfortunately, I have several issues in this project which I repeatedly tried to resolve but ultimately could not figure out. The first, which will be apparent to users unless they engage with the piece in the p5 editor while the window is in full screen, is that I could not properly situate my character and text into a fixed position relative to the backgrounds. This is likely due to the fact that I used ‘innerWidth’ and ‘innerHeight’ for the canvas dimensions with background images that do not take up this entire space. I tried to place the y-position of my character relative to the height of the images (using summer1 as a reference) but that did not accomplish the adaptive positioning that I wanted it to.

Another technical shortcoming was my inability to successfully add a simple audio track to help create a sense of atmosphere. Despite being a straightforward incorporation which I am familiar with, I was unable to successfully have an audio track play once. When the audio did play, it would be called to play every frame and eventually cause the sketch to crash. I looked to the examples provided in the class notes, researched the references on the p5 website and asked more experienced colleagues but could still not figure out how to do it.

Finally, an issue that I am deeply upset about was the lack of functionality in the ‘press ENTER to think’ prompt. To begin with, I was able to get the ‘think’ prompt to work momentarily. However, when it was working, my sprite sheet was not entirely functional as it would move across the screen without being animated. I suspect that the ‘keyPressed’/’keyCode’ functions were interfering with one another but I could figure out how to resolve it. I am especially upset that this element did not work as I feel that it would have elevated my project on many levels. First, it would have simply added another level of interactivity besides the basic movement, thus making the piece more engaging. Second, I feel that it very succinctly relates to the intention behind this work by prompting the user to physically stop progressing and to focus on the digital scenery in front of them. Moreover, the text which that appeared on-screen when this element was functional (still in the source code) added a much-needed personal touch and sense of character to the work which I feel is lacking currently.