Guitar is overrated 🎸😒. Anyone who got impressed by a electric guitar controlled cyberpunk game should seriously raise their standards.

When I am not working on brain controlled robots, I look after my child, called The Tale of Lin & Lang, which is a fictional universe where Industrial Revolution began in East Asia (China/Korea/Japan), and a re-imagination of alternative history. In that world, there are steampunk inventors who invent computers, clockwork, machines … and there are also artisans who plays the traditional bamboo flute (笛子 – dízi).

Well, that’s fiction…. or is it 🤔? Well, in real life, I am also a bamboo flute player and an inventor, and a steampunk enthusiast…. so I present Fwitch, a flute controlled steampunk switch.

Below is the complete demonstration.

HOW IT WORKS

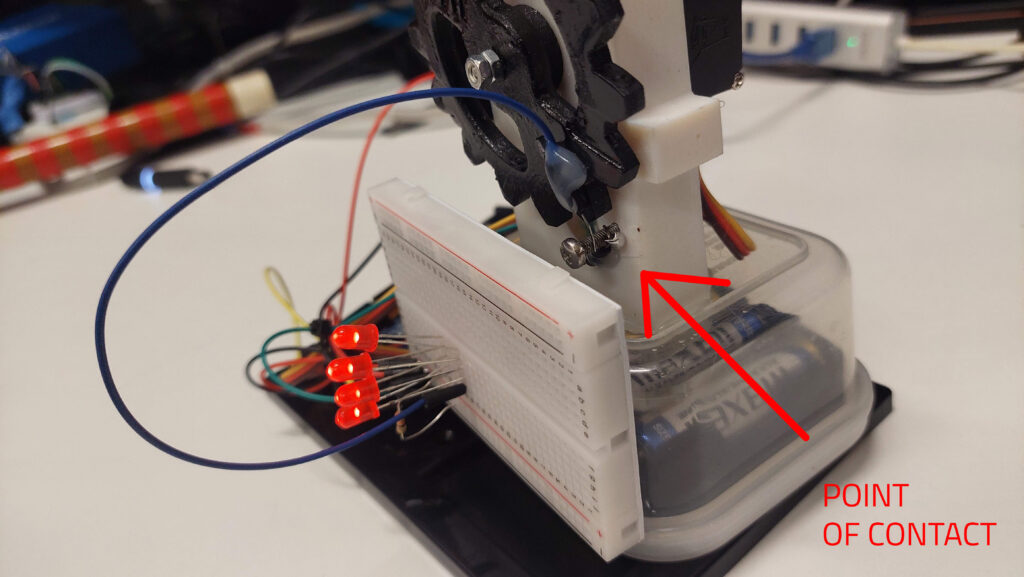

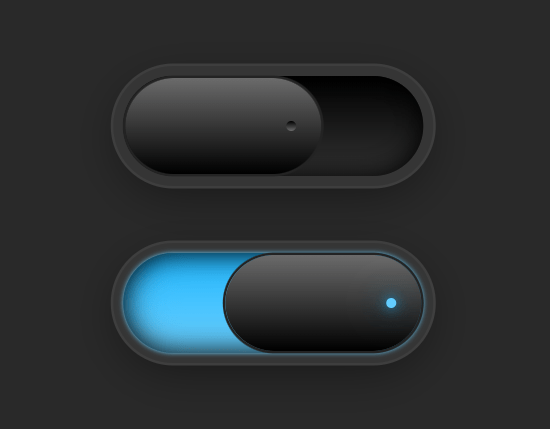

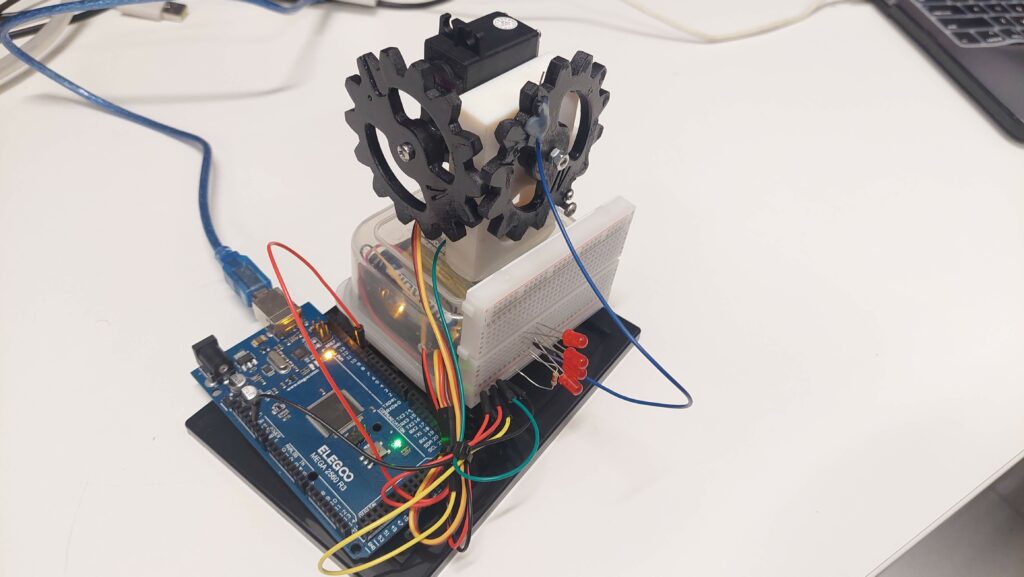

It’s a switch, so nothing complicated. One end of the wire needs to go and meet another wire… I am just driving the motion using 2 steampunk style gears I 3D printed and painted.

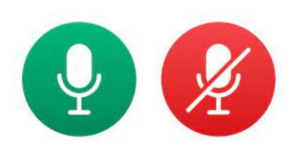

When I blow the flute, the laptop mic listens to my flute volume, and above a particular threshold, will establish a serial connection to arduino and tell the servo motor to rotate to a particular degree. And with another note from the flute, it will toggle the switch. Simple.

The servo I am using is quite large (because the gears are large), hence I need an external power supply. It is hidden in the container below to be neat and tidy.

And yes, I am using a Chinese clone mega board.

And yes, I am using a Chinese clone mega board.

Below are close up shots.

CODE

The following python code listens to the microphone on my computer, and above a particular volume threshold, it will send switch on and off signal through serial to arduino. I could have used a mic and do everything on arduino, but could not find one, so decided to use my laptop mic.

import pyaudio

import numpy as np

import os

import time

import serial

import serial.tools.list_ports

switch_on = False

volume_threshold = 30 # Configurable threshold

switch_toggled = False # Flag to track if the switch was toggled

def clear_screen():

# Clear the console screen.

os.system('cls' if os.name == 'nt' else 'clear')

def list_serial_ports():

ports = serial.tools.list_ports.comports()

return ports

def get_volume(data, frame_count, time_info, status):

global switch_on, switch_toggled

audio_data = np.frombuffer(data, dtype=np.int16)

if len(audio_data) > 0:

volume = np.mean(np.abs(audio_data))

num_stars = max(1, int(volume / 100))

if num_stars > volume_threshold and not switch_toggled:

switch_on = not switch_on

ser.write(b'180\n' if switch_on else b'0\n')

switch_toggled = True

elif num_stars <= volume_threshold and switch_toggled:

switch_toggled = False

clear_screen()

print(f"Switch:{switch_on}\nVolume: {'*' * num_stars}")

return None, pyaudio.paContinue

# List and select serial port

ports = list_serial_ports()

for i, port in enumerate(ports):

print(f"{i}: {port}")

selected_port = int(input("Select port number: "))

ser = serial.Serial(ports[selected_port].device, 9600)

time.sleep(2) # Wait for serial connection to initialize

ser.write(b'0\n') # Initialize with switch off

# Audio setup

FORMAT = pyaudio.paInt16

CHANNELS = 1

RATE = 44100

CHUNK = 1024

audio = pyaudio.PyAudio()

# Start the stream to record audio

stream = audio.open(format=FORMAT, channels=CHANNELS,

rate=RATE, input=True,

frames_per_buffer=CHUNK,

stream_callback=get_volume)

# Start the stream

stream.start_stream()

# Keep the script running until you stop it

try:

while True:

time.sleep(0.1)

except KeyboardInterrupt:

# Stop and close the stream and serial

stream.stop_stream()

stream.close()

ser.close()

audio.terminate()

And this arduino part listens to the serial from python, and rotates the servo accordingly.

#include <Servo.h>

Servo myservo;

int val;

void setup() {

myservo.attach(9);

Serial.begin(9600);

Serial.println("Servo Controller Ready");

}

void loop() {

if (Serial.available() > 0) {

String input = Serial.readStringUntil('\n'); // read the string until newline

val = input.toInt(); // convert the string to integer

val = constrain(val, 0, 180);

myservo.write(val);

Serial.print("Position set to: ");

Serial.println(val);

delay(15);

}

}

Hope you enjoy.

Remarks

Well, since the assignment rubric required use of Arduino, I am using the Arduino. Had it been the original assignment without Arduino, things could have gotten more interesting 🤔. Arduino is a tool, transistors are tools. Many people are so inclined to believe that in order to implement a programmable logic, we need electronics.

🚫 NOOOO!!!!

My inner computer engineer says logic can be implemented anywhere with proper mechanism, and if you can implement logic, anything is a computer.

- 🧬 Human DNA is just a program to read lines of nucleobases and produce proteins based on that.

- 💧We can use water and pipes to implement logic gates and design our hydro switch.

- 🍳If I wanted to, even the omelette on my breakfast plate can be a switch.

We don’t even need 3 pin transistors , we can design purely “Mechanical” logic gates and design the switch. But oh well… putting back my celestial mechanics into my pocket.