Concept

The Smart House System is an interactive physical computing project that simulates features of an intelligent home using Arduino UNO and p5.js. The system includes:

-

A smart parking assistant that detects cars entering and exiting, and updates available parking slots automatically.

-

A light automation system that turns on indoor lights when it gets dark, based on ambient light readings.

-

A real-time dashboard and voice announcer, implemented in p5.js, that visualizes the system state and speaks updates aloud using

p5.speech.

This system provides a fun and engaging way to demonstrate real-world home automation, combining sensors, outputs, and visual/voice feedback for user interaction.

Interaction Demo

How the Implementation Works

The system uses ultrasonic distance sensors to detect when a vehicle is near the entry or exit of the parking area. A servo motor simulates the gate that opens when a car arrives and parking is available.

A photoresistor (LDR) detects light levels to automatically turn on five LEDs that simulate indoor lighting when it gets dark.

All event messages from Arduino are sent to a p5.js sketch over web serial. The browser-based sketch then:

-

Displays the parking status

-

Shows light status

-

Uses p5.speech to speak real-time messages like “Parking is full!” or “Lights are now on!”

Interaction Design

The project is designed for simple, touchless interaction using real-world analog sensors:

-

Bringing your hand or an object close to the entry sensor simulates a car arriving. If space is available, the gate opens, the slot count is reduced, and a voice announces the update.

-

Moving your hand in front of the exit sensor simulates a car leaving, increasing the parking availability.

-

Covering the LDR sensor simulates nighttime — lights automatically turn on, and the system announces it.

-

The p5.js dashboard shows real-time status and acts as an interactive voice feedback system.

Arduino Code

The Arduino UNO is responsible for:

-

Reading two ultrasonic sensors for car entry/exit

-

Reading the photoresistor (LDR) for light level

-

Controlling a servo motor for the gate

-

Controlling 5 indoor LEDs

-

Sending status messages to the p5.js sketch over serial

Code Overview:

-

Starts with 3 available parking slots

-

Gate opens and slot count decreases when a car is detected at entry

-

Slot count increases when a car exits

-

Indoor lights turn on when light level drops below a threshold

-

Sends messages like

car_entry,car_exit,parking_full,lights_on,lights_off, andparking_spots:X

#include <Servo.h>

// Ultrasonic sensor pins

#define trigEntry 2

#define echoEntry 3

#define trigExit 4

#define echoExit 5

// Servo motor pin

#define servoPin 6

// LED pins

int ledPins[] = {7, 8, 9, 10, 11};

// Light sensor pin

#define lightSensor A0

Servo gateServo;

int Slot = 3; // Initial parking spots

void setup() {

Serial.begin(9600);

// Ultrasonic sensors

pinMode(trigEntry, OUTPUT);

pinMode(echoEntry, INPUT);

pinMode(trigExit, OUTPUT);

pinMode(echoExit, INPUT);

// LED pins

for (int i = 0; i < 5; i++) {

pinMode(ledPins[i], OUTPUT);

}

// LDR analog input

pinMode(lightSensor, INPUT);

// Servo

gateServo.attach(servoPin);

gateServo.write(100); // Gate closed

}

void loop() {

int entryDistance = getDistance(trigEntry, echoEntry);

int exitDistance = getDistance(trigExit, echoExit);

int lightValue = analogRead(lightSensor); // 0 (dark) to 1023 (bright)

Serial.print("Entry: "); Serial.print(entryDistance);

Serial.print(" | Exit: "); Serial.print(exitDistance);

Serial.print(" | Light: "); Serial.print(lightValue);

Serial.print(" | Slots: "); Serial.println(Slot);

// ===== Car Entry Logic =====

if (entryDistance < 10 && Slot > 0) {

openGate();

Slot--;

Serial.println("car_entry");

Serial.print("parking_spots:");

Serial.println(Slot);

delay(2000);

closeGate();

}

// ===== Parking Full Logic =====

else if (entryDistance < 10 && Slot == 0) {

Serial.println("parking_full");

delay(1000); // Prevent spamming the message

}

// ===== Car Exit Logic =====

if (exitDistance < 10 && Slot < 3) {

openGate();

Slot++;

Serial.println("car_exit");

Serial.print("parking_spots:");

Serial.println(Slot);

delay(2000);

closeGate();

}

// ===== Light Control (5 LEDs) =====

if (lightValue < 900) { // It's dark

for (int i = 0; i < 5; i++) {

digitalWrite(ledPins[i], HIGH);

}

Serial.println("lights_on");

} else {

for (int i = 0; i < 5; i++) {

digitalWrite(ledPins[i], LOW);

}

Serial.println("lights_off");

}

delay(500);

}

// ===== Gate Functions =====

void openGate() {

gateServo.write(0);

delay(1000);

}

void closeGate() {

gateServo.write(100);

delay(1000);

}

// ===== Distance Sensor Function =====

int getDistance(int trigPin, int echoPin) {

digitalWrite(trigPin, LOW);

delayMicroseconds(2);

digitalWrite(trigPin, HIGH);

delayMicroseconds(10);

digitalWrite(trigPin, LOW);

long duration = pulseIn(echoPin, HIGH);

int distance = duration * 0.034 / 2;

return distance;

}

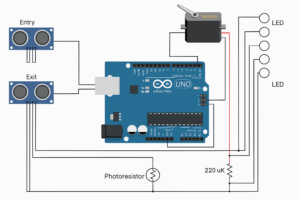

Circuit Schematic

p5.js Code and Dashboard

The p5.js sketch:

-

Uses the

p5.webseriallibrary to connect to Arduino -

Uses

p5.speechfor voice announcements -

Displays a dashboard showing the number of available parking slots

-

Shows the indoor light status using a colored circle

The voice announcements are fun and slightly humorous, e.g.:

“A wild car appears!”

“Uh-oh! Parking is full.”

“It’s getting spooky in here… turning the lights on!”

The sketch uses a say() wrapper function to safely trigger voice output in Chrome after the user clicks once.

Code Highlights:

-

Automatically resumes Chrome’s audio context

-

Waits for user interaction before enabling speech

-

Processes serial messages one line at a time

-

Provides a Connect/Disconnect button for user control

Arduino and p5.js Communication

The communication uses Web Serial API via p5.webserial:

-

Arduino sends messages like

"car_entry\n","lights_on\n", etc. -

p5.js reads each line, processes it, updates the dashboard, and speaks it out loud

-

A connect button in the sketch allows users to select their Arduino port manually

-

All communication is unidirectional: Arduino → p5.js

What I’m Proud Of

-

Fully working sensor-triggered voice feedback via p5.js — makes the system feel alive

-

Smooth parking logic with entry and exit detection

-

Integration of multiple Arduino components (servo, LDR, LEDs, ultrasonic)

-

An intuitive UI that works both visually and with voice

-

Reliable browser-based connection using modern Web Serial

Areas for Future Improvement

-

Add a screen-based parking spot display (e.g., 7-segment or OLED)

-

Use non-blocking code in Arduino with

millis()instead ofdelay() -

Make a mobile-responsive version of the dashboard UI

-

Add a security camera feed or face detection in p5.js

-

Improve the servo animation to be smoother and time-synced

-

Add a buzzer or alert when parking is full