Concept

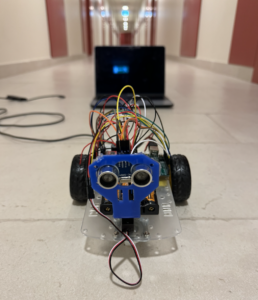

For my final project, I decided to create a human-following robot that I like to think of as a non-human pet, inspired by none other than Wall-E – that lovable robot from the movies. Just like Wall-E, my creation is meant to tag along beside you, sensing your presence and movement with its built-in sensors. It’s a robot that follows you around, imitating the way a curious pet might trail after its owner.

But there’s a twist – it’s not just an automatic follower. With P5JS, a programming tool, you get the reins, too. You can control it like you’re playing a video game, guiding it around with your keyboard and mouse. The idea struck me while watching Wall-E’s adventures, and I thought, why not blend that inspiration into something real? Something you can interact with, just like a pet that’s eager for your attention, whether it’s autonomously roaming or being directed by your commands.

User Testing Videos

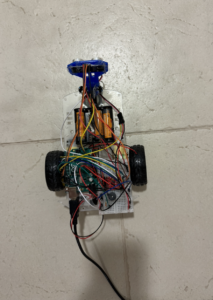

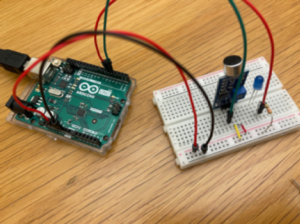

Key Components

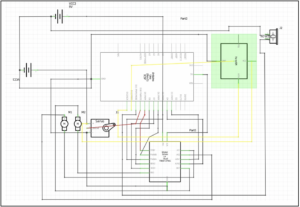

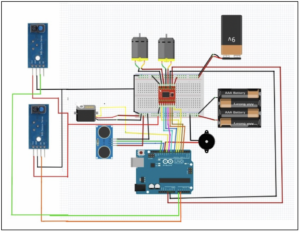

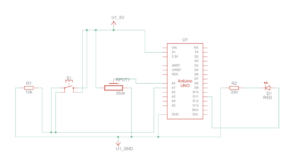

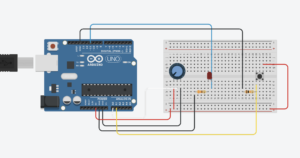

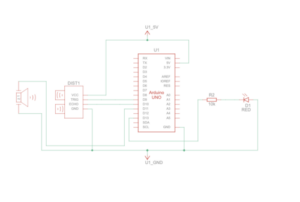

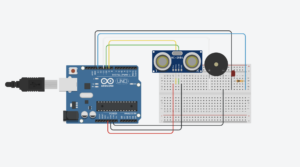

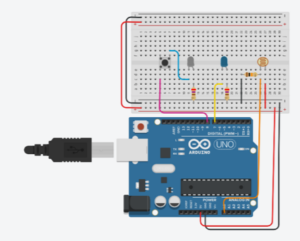

- Arduino Uno: The Arduino Uno acts as the brain of our robot. It’s a microcontroller responsible for processing data from sensors, making decisions, and controlling the motors and servo. The best part? It’s beginner-friendly, making it an ideal choice for those new to robotics.

- Motor Driver: the powerhouse behind the robot’s movement. It precisely controls the motors that drive the wheels, ensuring our robot gracefully follows its human companion.

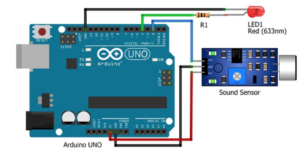

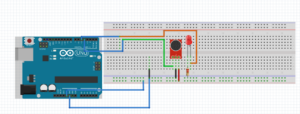

- Ultrasonic Sensor: The ultrasonic sensor serves as the robot’s eyes, allowing it to measure distances. This is crucial for avoiding collisions and maintaining a safe following distance.

- IR Sensor: Our robot needs to be smart enough to navigate around obstacles. That’s where the IR sensor comes in, allowing the robot to turn. By emitting and detecting infrared radiation, it enhances obstacle detection.

- Servo Motor: It helps move the ultrasonic sensor, giving the robot flexibility.

- Motors and Wheels: For our robot to follow, it needs reliable motors and wheels. The motor driver ensures these components work seamlessly, making our robot mobile and ready for adventure.

- Piezo Speaker: Communication is key, even for robots. The piezo speaker provides audible feedback, alerting users that robots is ready to operate.

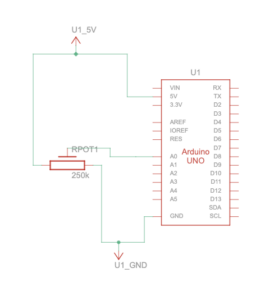

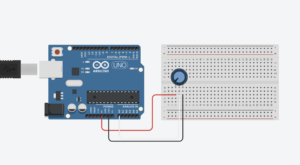

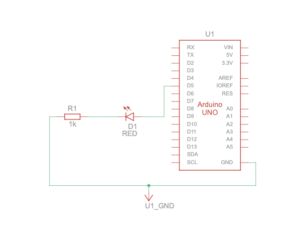

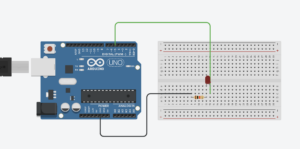

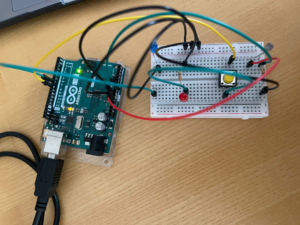

Schematic and Circuit Diagram

Implementation details

- Interaction Design: The interaction design of my project centers on a user-friendly and intuitive experience. The robot operates in two modes: autonomous, where it uses sensors to follow the user around, and manual, where the user can control its movements through a P5JS interface. Switching between modes is seamless, catering to moments when you want a companionable presence without the effort or times when you prefer direct control.

- Arduino Description: The Arduino code for my project serves as the brain of my pet-like robot. It integrates motor control with sensor inputs to enable the robot to follow a person autonomously or be controlled manually via P5JS. The code dictates how the robot moves in response to what the sensors detect, like proximity to objects or a person’s movements. It manages the logic for when the robot should move forward, turn, or stop to ensure smooth operation. Additionally, the code includes functions for playing melodies and controlling servo movements, giving the robot a lively and interactive character.

Code Snippet:

#include <SparkFun_TB6612.h>

#include "pitches.h"

#include <Servo.h>

//Motor Driver Pins

#define AIN1 3

#define BIN1 7

#define AIN2 4

#define BIN2 8

#define PWMA 5

#define PWMB 6

#define STBY 9

// Motor speed and control variables

const int offsetA = 1;

const int offsetB = 1;

int speed = 100;

int brightness = 0; // Variable to receive serial data for control

// Initialize motor objects with defined pins and offsets

Motor motor1 = Motor(AIN1, AIN2, PWMA, offsetA, STBY);

Motor motor2 = Motor(BIN1, BIN2, PWMB, offsetB, STBY);

//Ultrasonic Sensor

int distance;

long timetaken;

double feet, inch;

// Define ultrasonic sensor pins

#define echoPin 13

#define trigPin 12

// Define IR sensor pins

#define IRR A0 //pin for right sensor

#define IRL A1 //pin for left sensor

//Define Buzzzer pins

int speaker = 11;

int melody[] = {

NOTE_C4, NOTE_G3, NOTE_G3, NOTE_A3, NOTE_G3, 0, NOTE_B3, NOTE_C4

};

// Melody and note durations arrays for the buzzer

int noteDurations[] = {

4, 8, 8, 4, 4, 4, 4, 4

};

//Servo Motor initialization

Servo myservo;

int pos = 0; // Variable to store the servo position

void setup() {

// Setup for ultrasonic sensor

pinMode(trigPin, OUTPUT); //ultrasonic sensor

pinMode(echoPin, INPUT);

// Setup for IR sensors

pinMode(IRL, INPUT); //left ir sensor

pinMode(IRR, INPUT); //right ir sensor

//plays instrumental tones

for (int thisNote = 0; thisNote < 8; thisNote++) {

// to calculate the note duration, take one second divided by the note type.

//e.g. quarter note = 1000 / 4, eighth note = 1000/8, etc.

int noteDuration = 1000 / noteDurations[thisNote];

tone(11, melody[thisNote], noteDuration);

int pauseBetweenNotes = noteDuration * 1.30;

delay(pauseBetweenNotes);

// stop the tone playing:

noTone(11);

}

// Setup for servo motor

myservo.attach(10);

for (pos = 0; pos <= 180; pos += 1) { // goes from 0 degrees to 180 degrees

// in steps of 1 degree

myservo.write(pos); // tell servo to go to position in variable 'pos'

delay(15); // waits 15ms for the servo to reach the position

}

for (pos = 180; pos >= 0; pos -= 1) { // goes from 180 degrees to 0 degrees

myservo.write(pos); // tell servo to go to position in variable 'pos'

delay(15); // waits 15ms for the servo to reach the position

}

pinMode(A3, OUTPUT);

// Initialize Serial communication

Serial.begin(9600);

}

void loop() {

// Main loop for sensor reading and motor control

int distance, readLeft, readRight;

// Read ultrasonic sensor distance

distance = ultra();

Serial.println(distance);

// Read IR sensor states

readRight = digitalRead(IRR);

readLeft = digitalRead(IRL);

// Movement and control logic based on sensor readings

if (readLeft == 1 && distance > 10 && distance < 25 && readRight == 1) {

forward(motor1, motor2, speed); // Move forward

} else if (readLeft == 1 && readRight == 0) { //turn right

left(motor1, motor2, speed);

} else if (readLeft == 0 && readRight == 1) { //turn left

right(motor1, motor2, speed);

} else if (readLeft == 1 && readRight == 1) {

brake(motor1, motor2); // Brake the motors

} else if (distance > 5 && distance < 10) {

brake(motor1, motor2); // Brake if within a specific distance range

} else if (distance < 5) {

back(motor1, motor2, speed); // Move backward

}

// Remote control logic via Serial communication

if (Serial.available() > 0) { // Check if there is any Serial data available

// read the most recent byte (which will be from 0 to 255):

brightness = Serial.read();

// Conditional statements to control the robot based on the received byte

if (brightness == 0) {

// If the received byte is 0, move the robot forward

// The function 'forward' is called with motors and speed as arguments

forward(motor1, motor2, 200);

} else if (brightness == 1) {

// If the received byte is 1, move the robot backward

// The function 'back' is called with motors and speed as arguments

back(motor1, motor2, 200);

}

}

}

- Description of P5: In the P5.js code, there’s a dual-feature interface for my robot. It visually represents the sensor data, showing a value that decreases as you get closer to the robot and increases as you move away, mirroring the robot’s perception in real-time. Simultaneously, this interface allows you to control the robot’s movement. With simple commands, you can guide the robot to move forward or backward, offering a straightforward and interactive way to both visualize and manipulate the robot’s position and actions.

Code Snippet:

let serial; // variable for the serial object

let latestData = "wait"; // variable to hold the

let val = 0; // Variable to store a value for serial communication

let colorValue = 0;

function setup() {

createCanvas(1000, 800);

textSize(18);

// serial constructor

serial = new p5.SerialPort();

// serial port to use - you'll need to change this

serial.open("/dev/tty.usbmodem141101");

// what to do when we get serial data

serial.on("data", gotData);

}

// Callback function for processing received serial data

function gotData() {

let currentString = serial.readLine(); // Read the incoming data as a string

trim(currentString); // Remove any leading/trailing whitespace

if (!currentString) return; // If the string is empty, do nothing

console.log(currentString); // Log the data to the console for debugging

latestData = currentString; // Update the latestData variable

}

function draw() {

background(211, 215, 255);

fill(102, 11, 229);

// Map the latestData to a rotational degree value

let rotDeg = map(latestData, 0, 1000, 0, 10000);

// Check for the space bar key press to start

if (key != " ") {

// Display the starting screen

textSize(30);

fill(0, 0, 0);

rect(0, 0, 1000, 800); // Draw a black rectangle covering the canvas

fill(200, 200, 200); // Set text color

text("PRESS SPACE BAR TO START THE HFR", width / 4, height / 2);

} else {

// Main interaction screen

// Display forward and backward areas and instructions

textSize(18);

// Forward area

fill(102, 11, 229);

rect(890, 0, 110, 1000); // Draw the forward area

fill(255, 245, 224);

text("FORWARD", 900, 450); // Label for the forward area

// Backward area

fill(102, 11, 229);

rect(0, 0, 110, 1000); // Draw the backward area

fill(255, 255, 255);

text("BACKWARD", 0, 450); // Label for the backward area

// Draw the robot representation

fill(35, 45, 63);

rect(500, -100, 100, 600); // Draw the robot's body

fill(180, 101, 229);

rect(500, 500, 100, -rotDeg); // Draw the robot's moving part

// Additional robot features

fill(200, 120, 157);

rect(500, 500, 100, 80); // Base of the moving part

fill(0, 0, 0);

rect(460, 580, 40, -30); // Left wheel

rect(600, 580, 40, -30); // Right wheel

fill(255, 255, 255);

text(latestData, 540, 560); // Display the latest data

// Display control instructions

fill("black");

text("Control the Robot:\n\n", 470, 600);

text(

"Forward Movement:\n" +

"- 'Forward' area on right\n" +

"- Click to move forward\n" +

"- Click again to stop\n\n",

670,

650

);

text(

"Backward Movement:\n" +

"- 'Backward' area on left\n" +

"- Click to move backward\n" +

"- Click again to stop\n\n",

150,

650

);

text("Move mouse to desired side and click to control movement!", 300, 770);

textStyle(BOLD);

// Serial communication based on mouse position

if (!colorValue) {

if (mouseX <= width / 2) {

val = 1; // Set val to 1 if mouse is on the left half

serial.write(val); // Send val to the serial port

console.log("Left"); // Log the action

} else {

val = 0; // Set val to 0 if mouse is on the right half

serial.write(val); // Send val to the serial port

console.log("Right"); // Log the action

}

}

}

// Draw a circle at the mouse position

fill(255, 255, 255);

ellipse(mouseX, mouseY, 10, 10);

}

// Function to handle mouse click events

function mouseClicked() {

if (colorValue === 0) {

colorValue = 255;

} else {

colorValue = 0;

}

}

- Communication between Arduino and p5.js:

In this project, the Arduino sends sensor data to P5.js, allowing for a visual representation of proximity; the closer you are to the sensor, the lower the number, and vice versa. P5.js then sends back control commands to Arduino, enabling the user to maneuver the robot forward and backward. This bidirectional communication between Arduino and P5.js is streamlined through serial communication, using an application called SerialControl to effectively connect ports in P5.js. This setup ensures efficient data transfer and responsive control of the robot’s movements.

Something I am Proud of

I’m particularly proud of the hardware implementation aspect of my robot project. It was a journey that demanded considerable time, effort, and a variety of materials to reach the final outcome. The process of assembling and fine-tuning the hardware, from selecting the right sensors and motors to designing and building the physical structure, was both challenging and rewarding. Seeing the components come together into a functioning robot was a testament to the hard work and dedication put into this project. This aspect of the project stands out for me as a significant achievement.

Challenges Faced

One of the challenges I faced was with the P5.js control interface. When trying to remotely control the robot, it moved extremely slowly, and at times, it would completely stop responding, even though I was actively trying to move it. I spent a significant amount of time troubleshooting this issue, delving into various aspects of the code and communication protocols. Eventually, I came to realize that this might be a limitation within the system, possibly related to lag or processing delays, which seem to occur quite frequently.

Another challenge I encountered involved the power supply for the robot. Initially, I had a battery pack with four cells, totaling 6V, but my robot only required 4.5V. To adjust the voltage, I removed one cell and connected a wire to bridge the gap. However, this setup proved problematic; as the robot moved, the wire would shift its position, causing intermittent power loss and loss of control. The robot would continue moving uncontrollably until I reconnected the wire. To resolve this, I came up with a creative solution. I crafted a connector using aluminum foil, shaping it to fit securely on both ends of the battery compartment. This improvised connector ensured a stable connection, eliminating the issue of the wire shifting during movement. With this fix, the robot now operates smoothly without any control issues.

Future Improvements

In terms of future improvements for my project, one key area I’d like to focus on is enhancing the P5.js sketch to make it more interactive and engaging. I plan to introduce multiple pages within the sketch, each offering different functionalities or information, to create a more comprehensive and user-friendly interface. Additionally, I’m considering integrating sound into the P5.js environment. This could include audio feedback for certain actions or ambient sounds to make the interaction with the robot more immersive and enjoyable. These improvements aim to not only enrich the user experience but also add layers of complexity and sophistication to the project.

IM Show!

It was an honor to showcase my human-following robot at the IM show. Seeing the enthusiasm and curiosity of students and faculty members as they passed by to test my robot was a heartwarming experience. I was particularly thrilled to witness the interest of professors who have been integral to my learning journey. Among them was Evi Mansor, who taught me in the communications lab; her impressed reaction was a significant moment for me. Additionally, Professor Michael Shiloh, a well-known figure in the IM department, showed keen interest in my project. A special and heartfelt thanks goes to Professor Aya Riad, whose guidance and teaching were pivotal in developing the skills necessary to create such an innovative and successful outcome. The support and the lively interest of the audience made the event a memorable highlight of my academic journey.

Resources

- https://youtu.be/yAV5aZ0unag?si=ZzwIOrRLBYmrv34C

- https://youtu.be/F02hrB09yg0?si=d40SgnSfkBMgduA8

- https://youtu.be/suLQpNPLzDo?si=G2rJR6YrsynycGoK

- https://circuitdigest.com/microcontroller-projects/human-following-robot-using-arduino-and-ultrasonic-sensor

- https://projecthub.arduino.cc/mohammadsohail0008/human-following-bot-f139db