Initially, my plan was to create an engaging game by incorporating various elements, including sprites, background parallax scrolling, object-oriented programming (OOP), and TensorFlow.js integration for character control through either speech recognition or body motion detection.

However, for several reasons, I have changed my midterm project idea. The game I was initially going to create would have likely been a remake of an existing game, and it didn’t sound very authentic. My goal for taking this class is to challenge myself creatively, and I gained valuable insights during Thursday’s class, which greatly influenced the ideas I’m going to implement in my project. The part I was missing was probably deciding which machine learning model to use. After observing Professor Aya’s demonstration of the poseNet model in class, my project’s direction became clearer. I have transitioned from creating a game to crafting a digital art piece.

As I write this report on October 7 at 4:29 PM, I have been experimenting with the handpose model from the ml5 library. Handpose is a machine-learning model that enables palm detection and hand-skeleton finger tracking in the browser. It can detect one hand at a time, providing 21 3D hand key points that describe important palm and finger locations.

I took a systematic approach, first checking the results when a hand is present in the frame and when it isn’t.

My next step was to verify the accuracy of the points obtained from the model. I drew green ellipses using the model’s points to ensure they corresponded to the correct locations on the hand. I noticed that the points were mirrored, which was a result of my attempt to mirror the webcam feed.

I resolved this issue by placing the drawing function for the points between the push() and pop() functions I used to mirror the feed.

I also discovered that the object returned from the prediction included a bounding box for the hand. I drew out the box to observe how it was affected by the hand’s movements. I plan to use the values returned in topLeft and bottomRight to control the volume of the soundtrack I intend to use in the application.

I have also spent time brainstorming how to utilize the information from the model to create the piece. The relevant information I receive from the model includes landmarks, bounding box, and handInViewConfidence. I am contemplating whether to compute a single point from the model’s points or to utilize all the points to create the piece. To make a decision, I have decided to test both approaches to determine which produces the best result.

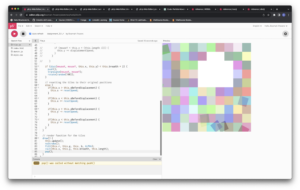

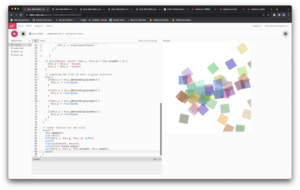

In light of this, I created a new sketch to plan how to utilize the information from the model. In my first attempt, I created a Point class that takes x, y, and z coordinates, along with the handInViewConfidence. The x, y, and z coordinates are mapped to values between 0 and 255, while the handInViewConfidence is mapped to a value between 90 and 200 (these values are arbitrary). All this information is used to create two colors, which are linearly interpolated to generate a final color.

After creating the sketch for the Point class, I incorporated it into the existing sketch for drawing the landmarks on the hand. I adjusted the drawKeyPoint() function to create points that were added to an array of points. The point objects were then drawn on the canvas from the array.

// A function to create points for the detected keypoints

function loadKeyPoints() {

for (let i = 0; i < hand.length; i += 1) {

const prediction = hand[i];

for (let j = 0; j < prediction.landmarks.length; j += 1) {

const keypoint = prediction.landmarks[j];

points.push(new Point(keypoint[0], keypoint[1],

keypoint[2], prediction.handInViewConfidence))

}

}

}

I also worked on creating different versions of the sketch. For the second version I created, I used curveVertex() instead of point() in the draw function of the Point class to see how the piece would turn out. I liked the outcome, so I decided to include it as a different mode in the program.

In my efforts to make the sketch more interactive, I have been attempting to utilize the SoundClassification model from the ml5 library as well. I tried working with the “SpeechCommands18w” model and my own custom pre-trained speech commands. However, both models I have tried are not accurate. I have had to repeat the same command numerous times because the model fails to recognize it. I am exploring alternative solutions and ways to potentially improve the model’s accuracy.

Although I am still working on the core of my project, I have begun designing the landing page and other sub-interfaces of my program. Below are sketches for some of the pages.

Summary

The progress I’ve made so far involves shifting my initial plan from creating a game to crafting a digital art piece. This change came after attending a class that provided valuable insights, particularly in selecting a machine learning model. I’ve been working with the handpose model, addressing issues like mirroring points and exploring the use of bounding box data for sound control.

I’m also brainstorming ways to utilize landmarks and handInViewConfidence from the model to create the art piece, testing various approaches to mapping data to colors. Additionally, I’ve been experimenting with the SoundClassification model from the ml5 library, though I’ve encountered accuracy challenges.

While the core of my project is still in progress, I’ve started designing the program’s landing page and sub-interfaces. Overall, I’ve made progress in refining my project idea and addressing technical aspects while exploring creative possibilities.

Below is a screenshot of the rough work I’ve been doing.