Concept:

I chose this prompt because I have always wanted a pet but my parents won’t let me have one. Therefore I am doing this prompt as sort of a make up for the thing that I always wanted but never got.

The whole concept is easy. The user as the owner of the pet, interacts with the pet by “talking” to it (typing in messages to “say” to the cat”). After the cat “hears” what the human says, it will evaluate whether the human is being mean or not. This evaluation is achieved through the GPT-3.5-Turbo API. If the cat thinks the human is being mean, it will become less happy, which is represented by decreasing her mood on a scale of 1 to 10, and vice versa. There are two main functions the user can do with the pet for now. One is to talk with it and observe its actions, and the other is an attempt to pet it. If the human chooses to talk with it, a buzzer on the physical CyberCat will start beeping as if responding. The happier the cat is, the slower and calmer the cat’s sound will be. However, if she is very pissed, her sound will be high-speed and rapid. On the other hand, when you try to pet her, it will choose based on its mood. If she is happy (mood 5 or above), she will come towards you until she senses you within 20 centimeters. But if she is not happy, she will back away from you until you are separated for at least 50 seconds.

I also put an “easter egg” in the project, that is if you type in “dance” in the message box, it will give you this sort of “dance” as if trying to catch her own tail.

Video Introduction to Interaction:

Implementation:

The Interaction design is very simple. For the talk function, it is a simple one way transmission. The user inputs through keyboard, and the p5 script processes the input and send message to the Arduino, which responds to the message by making the buzzer beep. For the other, there is a sort of data processing added on top of the other function. after command is given to the Arduino, it will check a condition first, and then act in response to the condition.

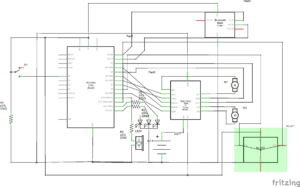

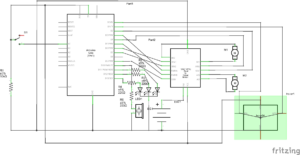

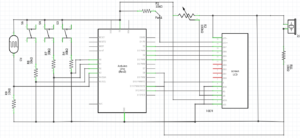

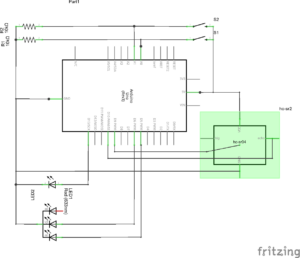

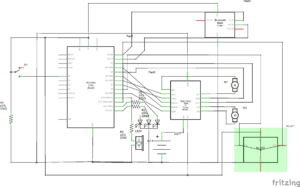

This is the schematic of the project. The pins do not necessarily correspond to the ones in the real implementation but the idea is the same. It includes 7 added parts, which are a BT module HC-06, a motor driver, 2 motors driven by the motor driver, a distance sensor, a buzzer, and an LED. The LED is for signifying the serial connection, the BT module is for wireless connection between Arduino and PC, and the other parts have their own obvious functions. there are many parts of the Arduino code I find worth mentioning, but I guess I will only mention one, that is the loop logic. The code goes like this:

void loop() {

if (Serial.available() > 0) {

state = 1;

analogWrite(greenPin, 255);

cmd = Serial.parseInt();

mood = Serial.parseInt();

if (Serial.read() == '\n') {

if (cmd == 1) {

state = 2;

// beep according to mood

delay(1000);

talkResponse(mood);

delay(1000);

noTone(buzPin);

state = 1;

recieved = 1;

Serial.println(recieved, state);

} else if (cmd == 2) {

state = 2;

// move according to distance and mood

delay(1000);

petResponse(mood);

state = 1;

recieved = 1;

Serial.println(recieved, state);

} else if (cmd == 3) {

state = 2;

//dance

for(int i = 0; i <3; i++){

motorDrive(motor1, turnCW, 192);

motorDrive(motor2, turnCW, 192);

delay(1000);

motorDrive(motor1, turnCCW, 192);

motorDrive(motor2, turnCCW, 192);

delay(1000);

}

motorBrake(motor1);

motorBrake(motor2);

state = 1;

recieved = 1;

Serial.println(recieved, state);

} else {

state = 1;

}

}

}

}

This is also how I did the communication between p5 and Arduino. Usually, the p5 script will send a message to Arduino when an interaction button is pressed. The first number of the message will be the command the Arduino will execute, and it will go inside the if statement to execute the command. After execution, the Arduino will send back two parameters to inform p5 that it has been executed, and more commands can be sent.

Below is the P5 embed:

The p5 sketch has been made to recalculate positions whenever the canvas size is resized, so the full screen will also display perfectly. Basically, the main p5 sketch consists of a start screen, then the main page. on the main page, there is a text area and three buttons. Two of which connect to commands and one is the instructions. The thing that was hardest was the GPT-3.5-Turbo API. It took me a while to learn how to use it and what prompt should I give to make it respond as I want it to. The code is shown below:

let gpt3Endpoint = 'https://api.openai.com/v1/chat/completions';

async function makeOpenAIRequest(userInput) {

const messages = [

{ role: 'user', content: userInput + "You are a pet, not an assistant. Evaluate my tone and give a response. Your mood is " + currentMood + ". Your mood fluctuates from a range of 1 to 10 where 1 is unhappy and 10 is happy. If the mood is already 10 or 1, no longer increase or decrease it. If you think I am being mean or unfriendly, decrease your mood. Otherwise, increase it. Respond with a format of 'Your cat felt ... and ..., and she ...(something a cat would do). pet mood: ...'. What comes after 'pet mood: ' should always be a number of your current mood." },

];

try {

const response = await fetch(gpt3Endpoint, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`,

},

body: JSON.stringify({

model: 'gpt-3.5-turbo',

messages: messages,

max_tokens: 150,

}),

});

const data = await response.json();

if (data.choices && data.choices.length > 0 && data.choices[0].message && data.choices[0].message.content) {

chatOutput = data.choices[0].message.content;

let splitString = split(chatOutput, ':');

currentMood = int(splitString[1]);

} else {

console.error('Invalid OpenAI response:', data);

chatOutput = 'No valid response from OpenAI.';

}

} catch (error) {

console.error('Error:', error);

chatOutput = 'An error occurred while generating chat.';

}

}

let isRequesting = false;

async function generateChat(typedText) {

if (isRequesting) {

return; // Don't initiate a new request if one is already ongoing

}

isRequesting = true;

if (typedText!="") {

await makeOpenAIRequest(typedText);

} else {

alert('Please enter a prompt before generating chat.');

}

isRequesting = false;

}

This is also the part I am particularly proud of since it is the first time ever I have included AI in one of my projects (Even though I didn’t train it myself).

Area for improvements:

There are many areas for improvement. First of all, The cat is moving on wheels only, making its movements very different from that of a real cat. Also, the current commands we have are very little. maybe in the future, I can add more commands. Another thing is that the AI model I used does not seem smart enough. Its responses sometimes are invalid and unpredictable. I might need to revise and update the prompt so that it does exactly what I asked.