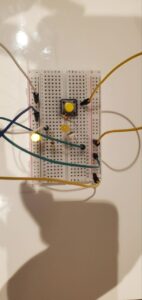

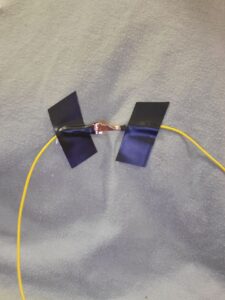

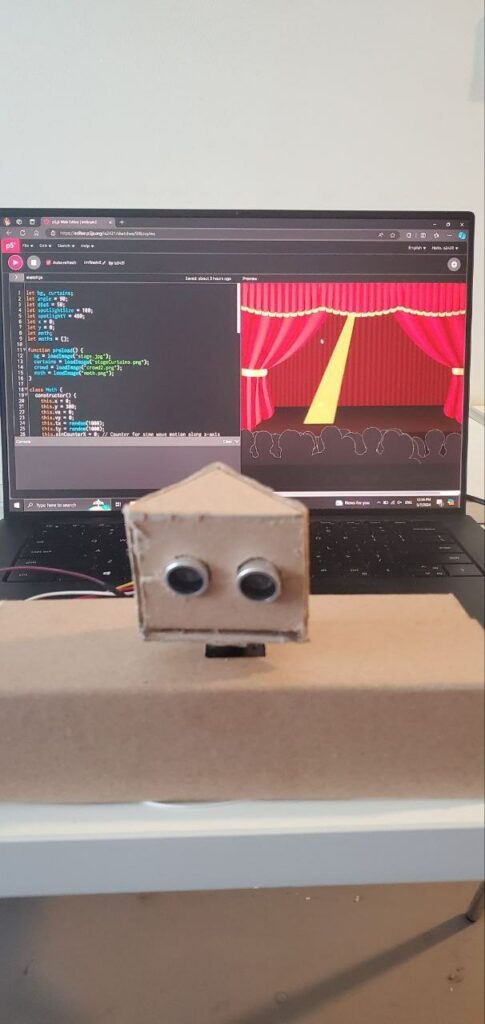

At its core, the Meditative Moth consists of a physical setup with an ultrasonic sensor, mounted on a servo motor, which detects objects within its range. This data is then transmitted to a p5.js sketch running on a laptop, where a virtual moth mirrors the movements of the physical sensor on screen. Adding another layer to the experience, a stage light follows the virtual moth’s path, creating an immersive and dynamic visual display.

The interaction is simple yet profound. As the sensor detects objects, the virtual moth flutters and dances across the screen, its movements guided by the presence and position of the objects. This interplay between the physical and digital, the real and the virtual, encourages us to reflect on our own attention and how we engage with the world around us.

The question of control in the Meditative Moth project adds a layer of intrigue to its artistic interpretation. Whether your movements directly guide the moth’s flight, with the spotlight following in its wake, or you command the spotlight, drawing the moth towards its illumination, the experience delves into the complexities of attention. The first scenario emphasizes conscious direction, where you actively choose your focus, while the second highlights the subconscious forces that influence our attention, drawing us towards certain stimuli. Ultimately, the ambiguity of control invites contemplation on the intricate interplay between conscious choice and subconscious influence, prompting us to explore the depths of our own attention and its ever-shifting nature.

Arduino and p5 files:

Arduino code:

#include <Servo.h>

// Define servo and sensor pins

const int servoPin = 9;

const int trigPin = 10;

const int echoPin = 11;

// Define variables for servo movement and distance

int distance;

int targetAngle = 90; // Initial servo position

int sweepDirection = 1; // Sweep direction: 1 for right, -1 for left

int sweepAngle = 30; // Angle to sweep from the target angle

int minDist = 50;

Servo myServo;

void setup() {

myServo.attach(servoPin);

pinMode(trigPin, OUTPUT);

pinMode(echoPin, INPUT);

Serial.begin(9600);

}

void loop() {

// Scan for objects by sweeping the servo

scanForObjects();

// Track the object if found

if (distance < minDist) {

trackObjects();

}

delay(50);

}

void scanForObjects() {

//Serial.println("scanning");

for (int angle = 20; angle <= 120; angle += 2) {

myServo.write(angle);

delay(50);

distance = getDistance();

Serial.print(angle);

Serial.print(',');

Serial.println(distance);

if (distance < minDist) {

//Serial.println("target found");

targetAngle = angle;

return;

}

}

}

void trackObjects() {

while (distance < minDist) {

distance = getDistance();

//Serial.println("tracking");

myServo.write(targetAngle);

}

sweepForObjects();

}

void sweepForObjects() {

//Serial.println("sweeping");

int currentAngle = targetAngle;

for (int i = 0; i < 2; i++) { // Sweep left and right

for (int angle = currentAngle; angle >= 20 && angle <= 120; angle += sweepDirection) {

myServo.write(angle);

delay(50);

distance = getDistance();

Serial.print(angle);

Serial.print(',');

Serial.println(distance);

if (distance < minDist) {

//Serial.println("target found while sweeping");

targetAngle = angle;

trackObjects(); // Return to tracking

return;

}

}

// Change sweep direction

sweepDirection *= -1;

}

// If the object is not found during sweeping, return to scanning

scanForObjects();

}

int getDistance() {

long duration;

int distanceCm;

digitalWrite(trigPin, LOW);

delayMicroseconds(2);

digitalWrite(trigPin, HIGH);

delayMicroseconds(10);

digitalWrite(trigPin, LOW);

duration = pulseIn(echoPin, HIGH);

distanceCm = duration / 29 / 2;

return distanceCm;

}

p5 Project (the project will not display anything without an Arduino):

p5 Project (non-interactive version, no Arduino needed):

Implementation:

Here’s a breakdown of the different components:

- Physical Setup: The ultrasonic sensor and servo motor act as the eyes of the project, constantly scanning for objects and relaying their positions.

- Arduino Code: The Arduino acts as the brain, controlling the servo motor and sending angle and distance data to the p5.js sketch via serial communication.

- p5.js Sketch: The sketch receives the data and translates it into the movements of the virtual moth and spotlight on the screen. The moth’s flight path, as well as the spotlight’s location, directly corresponds to the detected object’s position.

User interactions

Reflections and Future Directions:

One of the project’s highlights is the successful implementation of the tracking algorithm within the Arduino code. Although it may not be as good as I initially wanted it, this is more of a hardware issue than a code issue. This intricate dance between the physical and virtual environments forms the foundation of the entire experience. Additionally, the integration of physical and virtual elements creates a truly captivating and thought-provoking experience for the audience.

At the end of the project, right before the deadline, I ran into a pretty severe error after my laptop updated which prevented me from connecting my Arduino to p5. I tried many ways to debug, and eventually even tried getting a new laptop from the library. None of that worked, however when I plugged the Arduino into the new laptop, something in it updated, and the next time I plugged it into my laptop, the project started working again.

Looking ahead, there are many possibilities to enhance the Meditative Moth:

- Enhanced Visuals: Refining the visual representation of the moth and the stage light effects could create an even more mesmerizing and aesthetically pleasing experience.

- Auditory Expansion: Introducing music and crowd cheering, could deepen the audience’s engagement and further enrich the meditative aspects of the project.

- Movement Exploration: Experimenting with different movement patterns and behaviors for the virtual moth could evoke a wider range of emotional responses and add another layer of depth to the project.

IM Fest

The moth did not see much time on-stage during the IM Festival. There are a multitude of reasons why. For one, it was not a game, thus it would struggle to retain people’s attention. In a hall full of fun games, a small art project would hardly catch anyone’s attention, especially since it is not immediately apparent what the message of the project is.

Additionally, people struggled with the intuitiveness of the controls. It was not entirely clear from the project that the was an optimal distance for the sensor to track the viewer. Many people, I noticed, would try to activate the sensor by putting their hands in front of it, this never worked. I think I should have put some tape on the floor to indicate the optimal range to interact with the moth.

My monitor would often turn off during the festival, obviously obscuring the moth. I tried running a YouTube video in the background, however this failed to keep the monitor active.

I would occasionally see the sensor activate when people would pass it. This would grab their attention, but not enough for them to care for the whole project. Additionally, I would see the sensor sometimes tracking people who were interacting with the adjacent projects. This at least told me that the sensor and servo were doing exactly what I wanted them to do. Unfortunately, not many people were paying attention to see it.