Concept

My original idea was to create an interactive world map where users could click on regions to listen to the music typical of that area, introducing them to different cultures. However, I later shifted gears to develop a project where users could hear languages spoken in those regions instead. I found this idea exciting because it provides an opportunity to become familiar with various dialects and accents. At one point, I questioned if I was just creating a translator, something readily available through apps like Google. But then I realized the simplicity of my concept: a straightforward map highlighting regions with less commonly known languages. When users click on these areas, they hear their own words spoken back to them in the local language. I want to acknowledge that my inspiration came from the installations at Lulu Island, Manarat Abu Dhabi.

How does the implementation work?

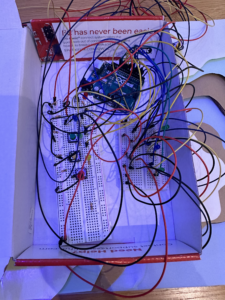

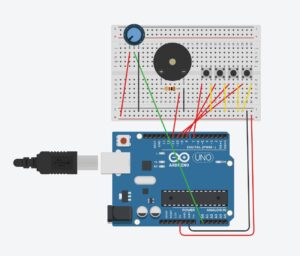

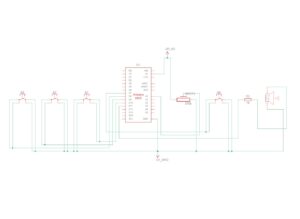

I used switches connected to the Arduino for input handling. Each switch corresponds to a country. When a user presses a switch, the Arduino records which country was selected. I also integrate a microphone to capture the user’s spoken words. The Arduino was set up to process this input when the user spoke. For the Visual Interface I used p5.js which was handling the graphical part of my project, it provided visual feedback when a country was selected and showed the status of voice input and translation. After capturing the audio input from the Arduino, use p5.js to handle the audio data, possibly sending it to a server for speech recognition and translation. The translated text can then be converted back into audio. An important implementation was the Interaction Between Arduino and p5.js their Communication using serial communication to pass the selected country and the captured audio from the Arduino to the p5.js application. I also had to Implement a speech recognition module to transcribe the spoken words into text. For that, I used translation API to translate the text into the selected language. In the end, ensured the implementation for Speech-to-text – TRANSLATION – text-to-Speech was working properly and in the right order.

Description of interaction design

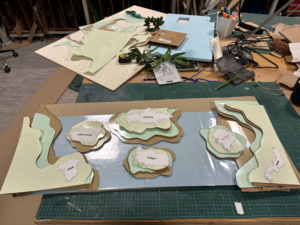

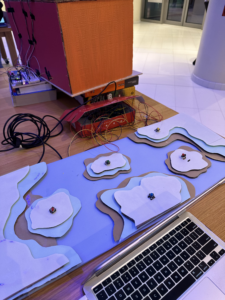

Initially, the design was supposed to be a layered map of the world [the laser cut design] but with the restraint of only being able to use it and the fact that I can only use 6 digital Arduino pins, I have to come up with 6 countries and then mapped out on the world, also than placed them in their respective location, so each. Because of not being able to utilize the laser machines, I had a plan to DYI my map, for I used the available contrasting color papers and then laminated them to get the surface but strong and then Layered them on top of each other {with the wholes so that wiring of the switches was easier).

Description of Arduino code + code snippets

This Arduino script is designed to handle inputs from six different switches, each corresponding to a unique country, and controls an LED and serial communication for each switch. When a switch is pressed, the associated LED turns on and a specific number is sent via serial to a connected p5.js application, indicating the country selected. Each button press triggers a brief activation of the LED and a serial print, followed by a deactivation of the LED after a short delay, ensuring clear feedback for each interaction. The code also debounces the switches to prevent multiple activations from a single press.

#include <Arduino.h>

const int switchPin1 = 2; //turkish

const int switchPin2 = 3; //korean

const int switchPin3 = 4; //arabic

const int switchPin4 = 5; //spanish

const int switchPin5 = 6; //Russian

const int switchPin6 = 7; //Japanease

const int ledPin1 = 8; //turkish

const int ledPin2 = 9; //korean

const int ledPin3 = 10; //arabic

const int ledPin4 = 11; //spanish

const int ledPin5 = 12; //Russian

const int ledPin6 = 13; //Japanease

// Variables to store the last state of each button

bool lastState1 = HIGH; //turkish

bool lastState2 = HIGH; //korean

bool lastState3 = HIGH; //arabic

bool lastState4 = HIGH;

bool lastState5 = HIGH;

bool lastState6 = HIGH;

void setup() {

pinMode(ledPin1, OUTPUT); //turkish

pinMode(ledPin2, OUTPUT); //korean

pinMode(ledPin3, OUTPUT); //arabic

pinMode(ledPin4, OUTPUT);

pinMode(ledPin5, OUTPUT);

pinMode(ledPin6, OUTPUT);

pinMode(switchPin1, INPUT_PULLUP); //turkish

pinMode(switchPin2, INPUT_PULLUP); //korean

pinMode(switchPin3, INPUT_PULLUP); //arabic

pinMode(switchPin4, INPUT_PULLUP);

pinMode(switchPin5, INPUT_PULLUP);

pinMode(switchPin6, INPUT_PULLUP);

Serial.begin(9600);

}

void loop() {

bool currentState1 = digitalRead(switchPin1); //turkish

bool currentState2 = digitalRead(switchPin2); //korean

bool currentState3 = digitalRead(switchPin3); //arabic

bool currentState4 = digitalRead(switchPin4);

bool currentState5 = digitalRead(switchPin5);

bool currentState6 = digitalRead(switchPin6);

// Check if button 2 was pressed (state change from HIGH to LOW)

if (lastState1 == HIGH && currentState1 == LOW) { //turkish

digitalWrite(ledPin1, HIGH); // Turn on LED

Serial.println("1"); // Send "2" to p5.js

delay(3000); // Short debounce delay

digitalWrite(ledPin1, LOW); // Turn off LED

}

// Check if button 2 was pressed (state change from HIGH to LOW)

if (lastState2 == HIGH && currentState2 == LOW) { //korean

digitalWrite(ledPin2, HIGH); // Turn on LED

Serial.println("2"); // Send "2" to p5.js

delay(3000); // Short debounce delay

digitalWrite(ledPin2, LOW); // Turn off LED

}

// Check if button 3 was pressed (state change from HIGH to LOW)

if (lastState3 == HIGH && currentState3 == LOW) { //arabic

digitalWrite(ledPin3, HIGH); // Turn on LED

Serial.println("3"); // Send "3" to p5.js

delay(3000); // Short debounce delay

digitalWrite(ledPin3, LOW); // Turn off LED

}

// Check if button 4 was pressed (state change from HIGH to LOW)

if (lastState4 == HIGH && currentState4 == LOW) {

digitalWrite(ledPin4, HIGH); // Turn on LED

Serial.println("4"); // Send "4" to p5.js

delay(10); // Short debounce delay

digitalWrite(ledPin4, LOW); // Turn off LED

}

// Check if button 5 was pressed (state change from HIGH to LOW)

if (lastState5 == HIGH && currentState5 == LOW) {

digitalWrite(ledPin5, HIGH); // Turn on LED

Serial.println("5"); // Send "5" to p5.js

delay(3000); // Short debounce delay

digitalWrite(ledPin5, LOW); // Turn off LED

}

// Check if button 6 was pressed (state change from HIGH to LOW)

if (lastState6 == HIGH && currentState6 == LOW) {

digitalWrite(ledPin6, HIGH); // Turn on LED

Serial.println("6"); // Send "6" to p5.js

delay(3000); // Short debounce delay

digitalWrite(ledPin6, LOW); // Turn off LED

}

// Update last states

lastState1 = currentState1;

lastState2 = currentState2;

lastState3 = currentState3;

lastState4 = currentState4;

lastState5 = currentState5;

lastState6 = currentState6;

delay(100); // Optional: Additional delay to reduce loop cycling speed

}

Description of p5.js code + code snippets + embedded sketch

This p5.js code manages a web-based interface that integrates with Arduino for a language translation project. It handles speech recognition and synthesis, and dynamically changes UI states to guide the user through different stages: from initial welcome, through instructions, to active speech input. The script uses serial communication to receive language selection from Arduino, updates the UI based on this input, and switches between different background images to reflect the current state. Users can initiate speech-to-text translation, which is then translated into the selected language and spoken back, providing an interactive system.

// Global variables for speech recognition and synthesis

let speechRec, speech, output, fromText, toText, langFrom, langTo, latestData;

let serial; // Serial communication object

let screenState = "welcome";

let languages = {};

let bg_img1, bg_img2, bg_img3, bg_img;

let prevData="0";

function preload(){

bg_img1 = loadImage('assets/Screen1.png');

bg_img2 = loadImage('assets/Screen2.png');

bg_img3 = loadImage('assets/Screen3.png');

bg_img4 = loadImage('assets/Screen1.png');

}

function setup() {

// bg_img1.loadPixels();

// bg_img2.loadPixels();

// bg_img3.loadPixels();

// bg_img4.loadPixels();

// noCanvas();

createCanvas(windowWidth, windowHeight);

// DOM elements

output = select("#speech");

fromText = select("#from-text");

toText = select("#to-text");

langFrom = select("#lang-from");

langTo = select("#lang-to");

// Populate language dropdowns

// populateLanguageOptions();

// Initialize serial communication

serial = new p5.SerialPort();

// Event handlers for serial communication

serial.on("connected", serverConnected);

serial.on("open", portOpen);

// serial.on("data", serialEvent);

// serial.on("error", serialError);

// serial.on("close", portClose);

// select("#connect").mousePressed(connectSerial);

// Open the serial port to your Arduino

serial.open("/dev/cu.usbmodem1201"); // Adjust to match your Arduino's serial port

}

async function connectSerial() {

// Prompt user to select any serial port.

port = await navigator.serial.requestPort();

// Wait for the serial port to open.

await port.open({ baudRate: 9600 });

let decoder = new TextDecoderStream();

inputDone = port.readable.pipeTo(decoder.writable);

inputStream = decoder.readable;

reader = inputStream.getReader();

readLoop();

loop(); // Start the draw loop again

}

async function readLoop() {

while (true) {

const { value, done } = await reader.read();

if (value) {

console.log(`Received: ${value}`);

latestData = value.trim();

}

if (done) {

console.log("Closed the reader stream");

reader.releaseLock();

break;

}

}

}

function draw() {

// Use the draw function to react to changes in data received from Arduino

if (screenState === "welcome") {

// background(220, 0, 0);

image(bg_img1,0,0,windowWidth, windowHeight);

}

else if (screenState === "instruction") {

// background(0, 0, 255);

image(bg_img2,0,0,windowWidth, windowHeight);

}

else if (screenState === "speak") {

// background(0, 255, 0);

image(bg_img3,0,0,windowWidth, windowHeight);

serialLanguageChange();

text("Please Speak Whats on Your Mind", windowWidth/4, (windowHeight/4 - 50))

text(fromText.value(), windowWidth/4, windowHeight/4)

text(languages[langTo.value()], windowWidth/4, (windowHeight/4 + 50))

text(toText.value(), windowWidth/4, (windowHeight/4 + 100))

}

else {

// background(100);

console.log('last_screen');

image(bg_img4,0,0,windowWidth, windowHeight);

}

}

// Populate language selection dropdowns

function populateLanguageOptions() {

languages = {

"en-GB": "English",

"tr-TR": "Turkish",

"ko-KR": "You were in Korea",

"ar-SA": "Arabic",

"ru-RU": "Russian",

"ja-JP": "Japanese",

"es-ES": "Spanish",

};

for (const [code, name] of Object.entries(languages)) {

langFrom.option(name, code);

langTo.option(name, code);

}

langFrom.selected("en-GB"); // Default language from

langTo.selected("en-GB"); // Default language to

}

// Callback for received speech

function gotSpeech() {

if (speechRec.resultValue) {

let said = speechRec.resultString;

// output.html("okay");

fromText.value(said);

}

}

// Serial data event handling

function serialEvent() {

// console.log("In Serial Event");

// let data = serial.readStringUntil("\r\n").trim(); // Read incoming data

// console.log("Received from Arduino:", data); // Debugging log

// if (latestData == "1" && prevData!="1") {

// console.log(data, "in");

// changeLanguage("tr-TR"); // Change to Korean

// prevData=1;

// } else if (latestData == "2" && prevData!="2") {

// changeLanguage("ko-KR"); // Change to Turkish

// prevData=2;

// } else if (latestData === "3" && prevData!="3") {

// changeLanguage("ar-SA"); // Change to Turkish

// prevData=3;

// } else if (latestData === "4" && prevData!="4") {

// changeLanguage("ru-RU"); // Change to Turkish

// prevData=4;

// } else if (latestData === "5" && prevData!="5") {

// changeLanguage("ja-JP"); // Change to Turkish

// prevData=5;

// } else if (latestData === "6" && prevData!="6") {

// changeLanguage("uk-UA"); // Change to Turkish

// prevData=6;

// }

}

// Change the translation language and translate text

function changeLanguage(langCode) {

console.log("Changing language to:", langCode); // Debugging log

console.log(prevData);

langTo.selected(langCode); // Set translation language

if (fromText.value().trim() !== "") {

translateText(); // Translate the text if non-empty

}

}

// Translate text using an external API

function translateText() {

let text = fromText.value().trim();

let translateFrom = langFrom.value();

let translateTo = langTo.value();

console.log("Translating from", translateFrom, "to", translateTo); // Debugging log

let apiUrl = `https://api.mymemory.translated.net/get?q=${encodeURIComponent(

text

)}&langpair=${encodeURIComponent(translateFrom)}|${encodeURIComponent(

translateTo

)}`;

fetch(apiUrl)

.then((response) => response.json())

.then((data) => {

let translatedText = data.responseData.translatedText;

toText.value(translatedText);

console.log("Translation complete:", translatedText); // Debugging log

speakText(translatedText, translateTo);

})

.catch((err) => console.error("Translation error:", err));

}

// Speak out the translated text

function speakText(text, lang) {

speech.setLang(lang); // Set the speech language

speech.speak(text); // Speak the text

}

// Serial event handlers

function serverConnected() {

console.log("Connected to Serial Server");

}

function portOpen() {

console.log("The serial port is open.");

}

function serialError(err) {

console.log("Serial Error:", err);

}

function portClose() {

console.log("The serial port is closed.");

}

function keyTyped() {

if (screenState === "welcome") {

if (key == "c") {

connectSerial()

}

if (keyCode == ENTER) {

screenState = "instruction";

}

}

else if (screenState === "instruction") {

if (keyCode == ENTER) {

htmlElements();

populateLanguageOptions();

// Initialize speech recognition and synthesis

speechRec = new p5.SpeechRec("en-US", gotSpeech);

speech = new p5.Speech();

speechRec.continuous = true;

speechRec.interimResults = false;

speechRec.start();

serial.on("data", serialEvent);

serial.on("error", serialError);

serial.on("close", portClose);

screenState = "speak";

}

}

else if (screenState === "speak") {

if (key === "r") {

removeHtmlElements();

resetAll();

screenState = "welcome";

}

}

}

function htmlElements() {

fromText = createInput('');

fromText.position(-1000, -30);

fromText.size(160);

fromText.attribute('placeholder', 'Text to translate');

fromText.id('from-text'); // Assign ID for consistency

toText = createInput('');

toText.position(-1000, -60);

toText.size(160);

toText.attribute('placeholder', 'Translated text will appear here');

toText.attribute('disabled', true);

toText.id('to-text'); // Assign ID for consistency

langFrom = createSelect();

langFrom.position(-1000, -90);

langFrom.id('lang-from'); // Assign ID for consistency

langTo = createSelect();

langTo.position(-1000, -120);

langTo.id('lang-to');

}

function removeHtmlElements() {

if (fromText) {

fromText.remove();

fromText = null; // Clear the variable to prevent errors

}

if (toText) {

toText.remove();

toText = null; // Clear the variable to prevent errors

}

if (langFrom) {

langFrom.remove();

langFrom = null; // Clear the variable to prevent errors

}

if (langTo) {

langTo.remove();

langTo = null; // Clear the variable to prevent errors

}

}

function serialLanguageChange(){

if (latestData === "1" && prevData!="1") {

changeLanguage("tr-TR"); // Change to Turkish

prevData="1"

} else if (latestData === "2" && prevData!="2") {

changeLanguage("ko-KR"); // Change to Korean

prevData="2"

} else if (latestData === "3" && prevData!="3") {

changeLanguage("ar-SA"); // Change to Arabic

prevData="3"

} else if (latestData === "4" && prevData!="4") {

changeLanguage("ru-RU"); // Change to Thai

prevData="4"

} else if (latestData === "5" && prevData!="5") {

changeLanguage("ja-JP"); // Change to German

prevData="5"

} else if (latestData === "6" && prevData!="6") {

changeLanguage("es-ES"); // Change to Kazakh

prevData="6"

}

}

function resetAll() {

// Remove HTML elements

// removeHtmlElements();

// Reset the speech recognizer and synthesizer

// if (speechRec) {

// speechRec.stop(); // Stop the speech recognizer

// }

if (speech) {

speech.cancel(); // Stop any ongoing speech synthesis

}

// Optionally reset any other state, e.g., clearing input fields or logs

// if (output) {

// output.html(""); // Clear any displayed output

// }

// Reset the serial communication or any other interfaces

if (serial) {

serial.clear(); // Clear the data from the serial port

serial.close(); // Close the serial port

}

// Reset global variables if necessary

latestData = null;

// if (speechRec) {

// speechRec.stop(); // Stop the speech recognizer if it's running

// }

// Reinitialize components if needed immediately

// setupSpeech();

// setupHtmlElements(); // Assume you have a function to setup HTML elements again if needed immediately

// populateLanguageOptions();

}

Since it is a full-screen Sketch:

https://editor.p5js.org/ib2419/full/ChiVj1B9N

Links to resources used

https://mymemory.translated.net/doc/spec.php

https://github.com/cmooredev/LibreTranslate/blob/main/README.md

Challenges faced and how you tried to overcome them

There were 3 main phases in which I faced the challenges: Ideation (what should it be, a music system telling about the different regions or language translator) – Figuring out the Arduino ( I was planning that each time when the user presses on the country using the button there would be a LED with it too which will glow) – and finally integrating the API and ensuring its serial communication with Arduino, also the API was giving me the translation but then connecting that with the P5js speech library was another task that I had a really hard time figuring out. Also when I was making the text-to-speech activated after the translation, I had to make sure that the translated text was spoken in the particular region’s Language. Because It was giving me the error of speaking the translated text in an English accent, it was just like a foreigner trying to speak your native language, so I had to fix and I think this took the most time for my error to resolve time. Apart from that When I was integrating the images for different screens, when I added the last screen it gave me a hard time again, but yeah it was just about playing around with the code that then helped me fix the error.

What are some areas for future improvement?

The API I was using did not help me in translating lots of languages, only the main languages that were translated were the common ones, and also if I could get a good physical interface that would fit my Arduino init hiding all of the wiring and connections. Also, I can integrate more pins in the digital pins section to add more countries?

IM showCase documentation